Resources, Conservation and Recycling ( IF 13.2 ) Pub Date : 2022-09-20 , DOI: 10.1016/j.resconrec.2022.106664 Da Huo , Yuksel Asli Sari , Ryan Kealey , Qian Zhang

|

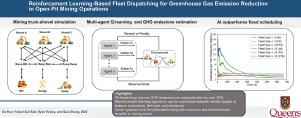

In typical mining operations, more than half of the direct greenhouse gas (GHG) emissions come from haulage fuel consumption. Smarter truck fleet dispatching is a feasible and manageable solution to reduce direct emissions with existing equipment. Conventional scheduling-based and human-led dispatching solutions often cause lower efficiency that wastes resources and elevates emissions. In this study, a simulated environment is developed to enable testing smarter real-time dispatching systems, Q-learning as a model-free reinforcement learning algorithm is used to improve fleet productivity, decrease waiting time and, consequently, reduce GHG emissions. The proposed algorithm trains the fleet to make better decisions based on payload, traffic, queueing, and maintenance conditions. Results show that this solution can reduce GHG emissions from haulage fuel consumption by over 30% while achieving the same production levels as compared to fixed scheduling. The proposed solution also shows advantages in handling operational randomness and balancing fleet size, productivity, and emissions.

中文翻译:

基于强化学习的车队调度在露天采矿作业中减少温室气体排放

在典型的采矿作业中,超过一半的直接温室气体 (GHG) 排放来自运输燃料消耗。更智能的卡车车队调度是一种可行且易于管理的解决方案,可通过现有设备减少直接排放。传统的基于调度和以人为主导的调度解决方案通常会导致效率降低,从而浪费资源并增加排放。在这项研究中,开发了一个模拟环境来测试更智能的实时调度系统,使用 Q-learning 作为一种无模型的强化学习算法来提高车队生产力,减少等待时间,从而减少温室气体排放。所提出的算法训练车队根据有效载荷、交通、排队和维护条件做出更好的决策。结果表明,与固定调度相比,该解决方案可以将运输燃料消耗的温室气体排放量减少 30% 以上,同时实现相同的生产水平。提议的解决方案还显示出在处理操作随机性和平衡车队规模、生产力和排放方面的优势。

京公网安备 11010802027423号

京公网安备 11010802027423号