Engineering ( IF 12.8 ) Pub Date : 2022-09-07 , DOI: 10.1016/j.eng.2022.04.024 Haifeng Wang , Jiwei Li , Hua Wu , Eduard Hovy , Yu Sun

|

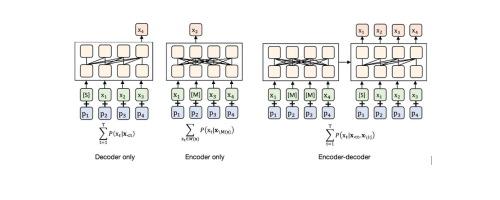

Pre-trained language models have achieved striking success in natural language processing (NLP), leading to a paradigm shift from supervised learning to pre-training followed by fine-tuning. The NLP community has witnessed a surge of research interest in improving pre-trained models. This article presents a comprehensive review of representative work and recent progress in the NLP field and introduces the taxonomy of pre-trained models. We first give a brief introduction of pre-trained models, followed by characteristic methods and frameworks. We then introduce and analyze the impact and challenges of pre-trained models and their downstream applications. Finally, we briefly conclude and address future research directions in this field.

中文翻译:

预训练语言模型及其应用

预训练的语言模型在自然语言处理 (NLP) 中取得了惊人的成功,导致范式从监督学习转向预训练,然后进行微调。NLP 社区见证了对改进预训练模型的研究兴趣激增。本文全面回顾了 NLP 领域的代表性工作和最新进展,并介绍了预训练模型的分类。我们首先简要介绍预训练模型,然后介绍特征方法和框架。然后,我们介绍并分析了预训练模型及其下游应用的影响和挑战。最后,我们简要总结并指出该领域未来的研究方向。

京公网安备 11010802027423号

京公网安备 11010802027423号