Neurocomputing ( IF 6 ) Pub Date : 2021-09-08 , DOI: 10.1016/j.neucom.2021.09.003 Xianggen Liu 1, 2, 3 , Pengyong Li 2, 3, 4 , Fandong Meng 5 , Hao Zhou 6 , Huasong Zhong 7 , Jie Zhou 5 , Lili Mou 8 , Sen Song 2, 3

|

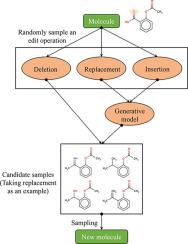

Optimization of discrete structures aims at generating a new structure with the better property given an existing one, which is a fundamental problem in machine learning. Different from the continuous optimization, the realistic applications of discrete optimization (e.g., text generation) are very challenging due to the complex and long-range constraints, including both syntax and semantics, in discrete structures. In this work, we present SAGS, a novel Simulated Annealing framework for Graph and Sequence optimization. The key idea is to integrate powerful neural networks into metaheuristics (e.g., simulated annealing, SA) to restrict the search space in discrete optimization. We start by defining a sophisticated objective function, involving the property of interest and pre-defined constraints (e.g., grammar validity). SAGS searches from the discrete space towards this objective by performing a sequence of local edits, where deep generative neural networks propose the editing content and thus can control the quality of editing. We evaluate SAGS on paraphrase generation and molecule generation for sequence optimization and graph optimization, respectively. Extensive results show that our approach achieves state-of-the-art performance compared with existing paraphrase generation methods in terms of both automatic and human evaluations. Further, SAGS also significantly outperforms all the previous methods in molecule generation.

中文翻译:

用于优化图形和序列的模拟退火

离散结构的优化旨在在给定现有结构的情况下生成具有更好特性的新结构,这是机器学习中的一个基本问题。与连续优化不同,离散优化的实际应用(例如,文本生成)由于离散结构中的复杂和远程约束,包括语法和语义,而非常具有挑战性。在这项工作中,我们提出了 SAGS,一种用于图形和序列优化的新型模拟退火框架。关键思想是将强大的神经网络集成到元启发式(例如,模拟退火,SA)中以限制离散优化中的搜索空间。我们首先定义一个复杂的目标函数,涉及感兴趣的属性和预定义的约束(例如,语法有效性)。SAGS 通过执行一系列本地编辑从离散空间向该目标搜索,其中深度生成神经网络提出编辑内容,从而可以控制编辑质量。我们分别在用于序列优化和图形优化的释义生成和分子生成上评估 SAGS。广泛的结果表明,与现有的释义生成方法相比,我们的方法在自动和人工评估方面都达到了最先进的性能。此外,SAGS 在分子生成方面也明显优于以前的所有方法。我们分别在用于序列优化和图形优化的释义生成和分子生成上评估 SAGS。广泛的结果表明,与现有的释义生成方法相比,我们的方法在自动和人工评估方面都达到了最先进的性能。此外,SAGS 在分子生成方面也明显优于以前的所有方法。我们分别在用于序列优化和图形优化的释义生成和分子生成上评估 SAGS。广泛的结果表明,与现有的释义生成方法相比,我们的方法在自动和人工评估方面都达到了最先进的性能。此外,SAGS 在分子生成方面也明显优于以前的所有方法。

京公网安备 11010802027423号

京公网安备 11010802027423号