Computer Speech & Language ( IF 4.3 ) Pub Date : 2021-07-28 , DOI: 10.1016/j.csl.2021.101268 Yingying Liu 1, 2 , Peipei Li 1, 2 , Xuegang Hu 1, 2, 3

|

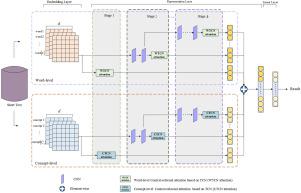

Short text classification is a challenging task in natural language processing. Existing traditional methods using external knowledge to deal with the sparsity and ambiguity of short texts have achieved good results, but accuracy still needs to be improved because they ignore the context-relevant features. Deep learning methods based on RNN or CNN are hence becoming more and more popular in short text classification. However, RNN based methods cannot perform well in the parallelization which causes the lower efficiency, while CNN based methods ignore sequences and relationships between words, which causes the poorer effectiveness. Motivated by this, we propose a novel short text classification approach combining Context-Relevant Features with multi-stage Attention model based on Temporal Convolutional Network (TCN) and CNN, called CRFA. In our approach, we firstly use Probase as external knowledge to enrich the semantic representation for the solution to the data sparsity and ambiguity of short texts. Secondly, we design a multi-stage attention model based on TCN and CNN, where TCN is introduced to improve the parallelization of the proposed model for higher efficiency, and discriminative features are obtained at each stage through the fusion of attention and different-level CNN for a higher accuracy. Specifically, TCN is adopted to capture context-related features at word and concept levels, and meanwhile, in order to measure the importance of features, Word-level TCN (WTCN) based attention, Concept-level TCN (CTCN) based attention and different-level CNN are used at each stage to focus on the information of more important features. Finally, experimental studies demonstrate the effectiveness and efficiency of our approach in the short text classification compared to several well-known short text classification approaches based on CNN and RNN.

中文翻译:

将上下文相关特征与多阶段注意力网络相结合进行短文本分类

短文本分类是自然语言处理中的一项具有挑战性的任务。现有的传统方法利用外部知识来处理短文本的稀疏性和歧义性,取得了不错的效果,但由于忽略了上下文相关的特征,准确率仍有待提高。因此,基于 RNN 或 CNN 的深度学习方法在短文本分类中越来越流行。然而,基于RNN的方法在并行化中表现不佳,导致效率较低,而基于CNN的方法忽略了词之间的序列和关系,导致有效性较差。受此启发,我们提出了一种新的短文本分类方法,将上下文相关特征与基于时间卷积网络 (TCN) 和 CNN 的多阶段注意力模型相结合,称为 CRFA。在我们的方法中,我们首先使用 Probase 作为外部知识来丰富语义表示,以解决短文本的数据稀疏性和歧义性问题。其次,我们设计了一个基于 TCN 和 CNN 的多阶段注意力模型,其中引入了 TCN 来提高所提出模型的并行化以提高效率,并且通过注意力和不同级别 CNN 的融合在每个阶段获得判别特征以获得更高的精度。具体而言,采用 TCN 在词和概念层面捕获上下文相关特征,同时,为了衡量特征的重要性,基于词级 TCN (WTCN) 的注意力、基于概念级 TCN (CTCN) 的注意力和不同的每个阶段都使用-level CNN,专注于更重要特征的信息。最后,

京公网安备 11010802027423号

京公网安备 11010802027423号