Computers & Security ( IF 5.6 ) Pub Date : 2021-07-17 , DOI: 10.1016/j.cose.2021.102402 Nguyen Truong 1 , Kai Sun 1 , Siyao Wang 1 , Florian Guitton 1 , YiKe Guo 1, 2

|

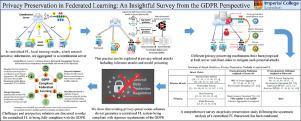

In recent years, along with the blooming of Machine Learning (ML)-based applications and services, ensuring data privacy and security have become a critical obligation. ML-based service providers not only confront with difficulties in collecting and managing data across heterogeneous sources but also challenges of complying with rigorous data protection regulations such as EU/UK General Data Protection Regulation (GDPR). Furthermore, conventional centralised ML approaches have always come with long-standing privacy risks to personal data leakage, misuse, and abuse. Federated learning (FL) has emerged as a prospective solution that facilitates distributed collaborative learning without disclosing original training data. Unfortunately, retaining data and computation on-device as in FL are not sufficient for privacy-guarantee because model parameters exchanged among participants conceal sensitive information that can be exploited in privacy attacks. Consequently, FL-based systems are not naturally compliant with the GDPR. This article is dedicated to surveying of state-of-the-art privacy-preservation techniques in FL in relations with GDPR requirements. Furthermore, insights into the existing challenges are examined along with the prospective approaches following the GDPR regulatory guidelines that FL-based systems shall implement to fully comply with the GDPR.

中文翻译:

联邦学习中的隐私保护:从 GDPR 的角度进行的有见地的调查

近年来,随着基于机器学习 (ML) 的应用程序和服务的蓬勃发展,确保数据隐私和安全已成为一项重要义务。基于 ML 的服务提供商不仅面临跨异构源收集和管理数据的困难,而且还面临遵守欧盟/英国通用数据保护条例 (GDPR) 等严格数据保护法规的挑战。此外,传统的集中式机器学习方法总是伴随着个人数据泄露、误用和滥用的长期隐私风险。联邦学习 (FL) 已成为一种前瞻性解决方案,可在不公开原始训练数据的情况下促进分布式协作学习。很遗憾,在 FL 中保留设备上的数据和计算不足以保证隐私,因为参与者之间交换的模型参数隐藏了可在隐私攻击中被利用的敏感信息。因此,基于 FL 的系统自然不符合 GDPR。本文致力于调查 FL 中与 GDPR 要求相关的最先进的隐私保护技术。此外,根据基于 FL 的系统应实施以完全符合 GDPR 的 GDPR 监管指南,对现有挑战的洞察与前瞻性方法一起进行了检查。本文致力于调查 FL 中与 GDPR 要求相关的最先进的隐私保护技术。此外,根据基于 FL 的系统应实施以完全符合 GDPR 的 GDPR 监管指南,对现有挑战的洞察与前瞻性方法一起进行了检查。本文致力于调查 FL 中与 GDPR 要求相关的最先进的隐私保护技术。此外,根据基于 FL 的系统应实施以完全符合 GDPR 的 GDPR 监管指南,对现有挑战的洞察与前瞻性方法一起进行了检查。

京公网安备 11010802027423号

京公网安备 11010802027423号