International Journal of Human-Computer Studies ( IF 5.4 ) Pub Date : 2021-06-18 , DOI: 10.1016/j.ijhcs.2021.102684 Tjeerd A.J. Schoonderwoerd , Wiard Jorritsma , Mark A. Neerincx , Karel van den Bosch

|

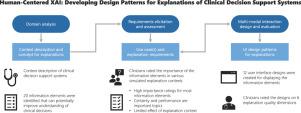

Much of the research on eXplainable Artificial Intelligence (XAI) has centered on providing transparency of machine learning models. More recently, the focus on human-centered approaches to XAI has increased. Yet, there is a lack of practical methods and examples on the integration of human factors into the development processes of AI-generated explanations that humans prove to uptake for better performance. This paper presents a case study of an application of a human-centered design approach for AI-generated explanations. The approach consists of three components: Domain analysis to define the concept & context of explanations, Requirements elicitation & assessment to derive the use cases & explanation requirements, and the consequential Multi-modal interaction design & evaluation to create a library of design patterns for explanations. In a case study, we adopt the DoReMi-approach to design explanations for a Clinical Decision Support System (CDSS) for child health. In the requirements elicitation & assessment, a user study with experienced paediatricians uncovered what explanations the CDSS should provide. In the interaction design & evaluation, a second user study tested the consequential interaction design patterns. This case study provided a first set of user requirements and design patterns for an explainable decision support system in medical diagnosis, showing how to involve expert end users in the development process and how to develop, more or less, generic solutions for general design problems in XAI.

中文翻译:

以人为本的 XAI:开发用于解释临床决策支持系统的设计模式

可解释人工智能 (XAI) 的大部分研究都集中在提供机器学习模型的透明度上。最近,对以人为中心的 XAI 方法的关注有所增加。然而,缺乏将人为因素整合到人工智能生成解释的开发过程中的实用方法和示例,人类证明这些解释可以提高性能。本文介绍了以人为中心的设计方法在 AI 生成解释中的应用案例研究。该方法由三个部分组成:待办事项主要分析定义的概念和解释的情况下,重新quirements启发和评估得出的用例和解释的要求,以及相应中号ULTI奥智模式我nteraction设计及评价创建的设计模式的解释库。在一个案例研究中,我们采用 DoReMi 方法来设计用于儿童健康的临床决策支持系统 (CDSS) 的解释。在需求获取和评估中,由经验丰富的儿科医生进行的用户研究揭示了 CDSS 应提供的解释。在交互设计和评估中,第二个用户研究测试了相应的交互设计模式。本案例研究为医学诊断中的可解释决策支持系统提供了第一组用户需求和设计模式,展示了如何让专家最终用户参与开发过程,以及如何或多或少地为一般设计问题开发通用解决方案。夏。

京公网安备 11010802027423号

京公网安备 11010802027423号