Knowledge-Based Systems ( IF 8.8 ) Pub Date : 2021-06-11 , DOI: 10.1016/j.knosys.2021.107216 Xu Liu , Yingguang Li , Qinglu Meng , Gengxiang Chen

|

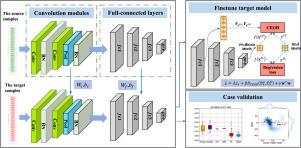

Deep transfer learning (DTL) has received increasing attention in smart manufacturing, whereas most current studies focus on the situation of marginal distribution shift in classification. We observe a new regression scenario in machine health monitoring systems (MHMS) with conditional distribution discrepancy across domains and try to propose a general theoretical approach for broader applications. In this paper, we propose a DTL framework CDAR, namely conditional distribution deep adaptation in regression. As only few labeled target data is available, in addition to only considering the prediction accuracy of individual samples, CDAR aims to preserve the global properties of the conditional distribution dominated by the target data. Thus, a hybrid loss function is constructed by combining the mean square error (MSE) and conditional embedding operator discrepancy (CEOD) in CDAR, and the target model is able to be finetuned by minimizing the designed loss function through back-propagation. The performance of the proposed CDAR is compared with two classical marginal distribution adaptation algorithms, TCA and DAN, and a specific method of DTL, FA. Experiments are carried out on two real-world datasets and the results verify the effectiveness of our method.

中文翻译:

回归中条件转移的深度迁移学习

深度迁移学习(DTL)在智能制造中受到越来越多的关注,而目前大多数研究都集中在分类中的边际分布转移情况。我们在机器健康监测系统 (MHMS) 中观察到一个新的回归场景,它具有跨域的条件分布差异,并试图为更广泛的应用提出一种通用的理论方法。在本文中,我们提出了一个 DTL 框架 CDAR,即回归中的条件分布深度适应。由于可用的标记目标数据很少,除了仅考虑单个样本的预测精度外,CDAR 旨在保留由目标数据主导的条件分布的全局属性。因此,通过结合 CDAR 中的均方误差 (MSE) 和条件嵌入算子差异 (CEOD) 构建混合损失函数,并且能够通过反向传播最小化设计的损失函数来微调目标模型。将所提出的 CDAR 的性能与两种经典的边缘分布自适应算法 TCA 和 DAN 以及 DTL、FA 的特定方法进行了比较。在两个真实世界的数据集上进行了实验,结果验证了我们方法的有效性。

京公网安备 11010802027423号

京公网安备 11010802027423号