Information Fusion ( IF 18.6 ) Pub Date : 2021-05-25 , DOI: 10.1016/j.inffus.2021.05.009 Giulia Vilone , Luca Longo

|

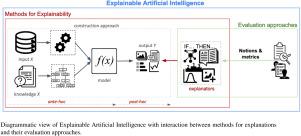

Explainable Artificial Intelligence (XAI) has experienced a significant growth over the last few years. This is due to the widespread application of machine learning, particularly deep learning, that has led to the development of highly accurate models that lack explainability and interpretability. A plethora of methods to tackle this problem have been proposed, developed and tested, coupled with several studies attempting to define the concept of explainability and its evaluation. This systematic review contributes to the body of knowledge by clustering all the scientific studies via a hierarchical system that classifies theories and notions related to the concept of explainability and the evaluation approaches for XAI methods. The structure of this hierarchy builds on top of an exhaustive analysis of existing taxonomies and peer-reviewed scientific material. Findings suggest that scholars have identified numerous notions and requirements that an explanation should meet in order to be easily understandable by end-users and to provide actionable information that can inform decision making. They have also suggested various approaches to assess to what degree machine-generated explanations meet these demands. Overall, these approaches can be clustered into human-centred evaluations and evaluations with more objective metrics. However, despite the vast body of knowledge developed around the concept of explainability, there is not a general consensus among scholars on how an explanation should be defined, and how its validity and reliability assessed. Eventually, this review concludes by critically discussing these gaps and limitations, and it defines future research directions with explainability as the starting component of any artificial intelligent system.

中文翻译:

可解释人工智能的可解释性概念和评估方法

可解释人工智能 (XAI) 在过去几年中经历了显着增长。这是由于机器学习,特别是深度学习的广泛应用,导致了缺乏可解释性和可解释性的高度准确模型的发展。已经提出、开发和测试了大量解决这个问题的方法,同时还有几项研究试图定义可解释性的概念及其评估。该系统评价通过分层系统对所有科学研究进行聚类,该系统对与可解释性概念和 XAI 方法的评估方法相关的理论和概念进行分类,从而为知识体系做出贡献。这种层次结构的结构建立在对现有分类法和同行评审科学材料的详尽分析之上。调查结果表明,学者们已经确定了解释应该满足的许多概念和要求,以便最终用户容易理解并提供可以指导决策的可操作信息。他们还提出了各种方法来评估机器生成的解释在多大程度上满足这些需求。总的来说,这些方法可以归结为以人为本的评估和具有更客观指标的评估。然而,尽管围绕可解释性概念发展了大量知识,但学者们对于如何定义解释以及如何评估其有效性和可靠性并没有达成普遍共识。最终,

京公网安备 11010802027423号

京公网安备 11010802027423号