Artificial Intelligence ( IF 14.4 ) Pub Date : 2021-05-12 , DOI: 10.1016/j.artint.2021.103525 Richard Dazeley , Peter Vamplew , Cameron Foale , Charlotte Young , Sunil Aryal , Francisco Cruz

|

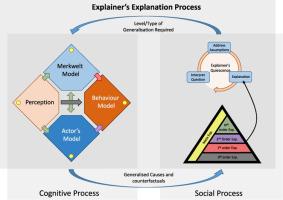

Over the last few years there has been rapid research growth into eXplainable Artificial Intelligence (XAI) and the closely aligned Interpretable Machine Learning (IML). Drivers for this growth include recent legislative changes and increased investments by industry and governments, along with increased concern from the general public. People are affected by autonomous decisions every day and the public need to understand the decision-making process to accept the outcomes. However, the vast majority of the applications of XAI/IML are focused on providing low-level ‘narrow’ explanations of how an individual decision was reached based on a particular datum. While important, these explanations rarely provide insights into an agent's: beliefs and motivations; hypotheses of other (human, animal or AI) agents' intentions; interpretation of external cultural expectations; or, processes used to generate its own explanation. Yet all of these factors, we propose, are essential to providing the explanatory depth that people require to accept and trust the AI's decision-making. This paper aims to define levels of explanation and describe how they can be integrated to create a human-aligned conversational explanation system. In so doing, this paper will survey current approaches and discuss the integration of different technologies to achieve these levels with Broad eXplainable Artificial Intelligence (Broad-XAI), and thereby move towards high-level ‘strong’ explanations.

中文翻译:

符合人类要求的对话解释的可解释人工智能水平

在过去的几年中,对可扩展人工智能(XAI)和紧密结合的可解释机器学习(IML)的研究迅速发展。这种增长的驱动力包括最近的立法变化以及行业和政府的投资增加,以及公众的日益关注。人们每天都会受到自主决策的影响,公众需要了解决策过程才能接受结果。但是,XAI / IML的绝大多数应用程序都集中于提供有关如何基于特定数据得出单个决策的低级“狭窄”解释。尽管很重要,但这些解释很少能提供关于代理人的见解:信念和动机;其他(人类,动物或AI)代理人意图的假设;解释外部文化期望;或者,用于产生自己的解释的过程。我们建议,所有这些因素对于提供人们接受和信任AI决策所需的解释深度至关重要。本文旨在定义解释级别,并描述如何将它们集成在一起以创建一个与人类保持一致的对话解释系统。这样做,本文将调查当前的方法,并讨论不同技术的集成以实现这些水平。本文旨在定义解释级别,并描述如何将它们集成在一起以创建一个与人类保持一致的对话解释系统。这样做,本文将调查当前的方法,并讨论不同技术的集成以实现这些水平。本文旨在定义解释级别,并描述如何将它们集成在一起以创建一个与人类保持一致的对话解释系统。这样做,本文将调查当前的方法,并讨论不同技术的集成以实现这些水平。广泛的,可扩展的人工智能(Broad-XAI),从而朝着高级的“强力”解释迈进。

京公网安备 11010802027423号

京公网安备 11010802027423号