当前位置:

X-MOL 学术

›

Comput. Animat. Virtual Worlds

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

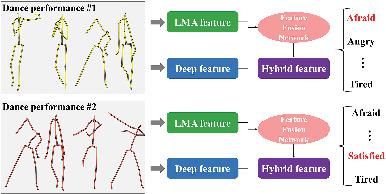

EmoDescriptor: A hybrid feature for emotional classification in dance movements

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2021-04-10 , DOI: 10.1002/cav.1996 Junxuan Bai 1, 2 , Rong Dai 1 , Ju Dai 2 , Junjun Pan 1, 2

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2021-04-10 , DOI: 10.1002/cav.1996 Junxuan Bai 1, 2 , Rong Dai 1 , Ju Dai 2 , Junjun Pan 1, 2

Affiliation

|

Similar to language and music, dance performances provide an effective way to express human emotions. With the abundance of the motion capture data, content-based motion retrieval and classification have been fiercely investigated. Although researchers attempt to interpret body language in terms of human emotions, the progress is limited by the scarce 3D motion database annotated with emotion labels. This article proposes a hybrid feature for emotional classification in dance performances. The hybrid feature is composed of an explicit feature and a deep feature. The explicit feature is calculated based on the Laban movement analysis, which considers the body, effort, shape, and space properties. The deep feature is obtained from latent representation through a 1D convolutional autoencoder. Eventually, we present an elaborate feature fusion network to attain the hybrid feature that is almost linearly separable. The abundant experiments demonstrate that our hybrid feature is superior to the separate features for the emotional classification in dance performances.

中文翻译:

EmoDescriptor:舞蹈动作中情绪分类的混合特征

与语言和音乐类似,舞蹈表演提供了一种表达人类情感的有效方式。随着动作捕捉数据的丰富,基于内容的动作检索和分类得到了激烈的研究。尽管研究人员试图根据人类情感来解释肢体语言,但进展受限于带有情感标签注释的稀缺 3D 运动数据库。本文提出了一种混合特征,用于舞蹈表演中的情绪分类。混合特征由显式特征和深度特征组成。显式特征是基于拉班运动分析计算的,该分析考虑了身体、努力、形状和空间属性。深度特征是通过一维卷积自动编码器从潜在表示中获得的。最终,我们提出了一个精细的特征融合网络来获得几乎线性可分的混合特征。大量实验表明,我们的混合特征优于舞蹈表演中情感分类的单独特征。

更新日期:2021-04-10

中文翻译:

EmoDescriptor:舞蹈动作中情绪分类的混合特征

与语言和音乐类似,舞蹈表演提供了一种表达人类情感的有效方式。随着动作捕捉数据的丰富,基于内容的动作检索和分类得到了激烈的研究。尽管研究人员试图根据人类情感来解释肢体语言,但进展受限于带有情感标签注释的稀缺 3D 运动数据库。本文提出了一种混合特征,用于舞蹈表演中的情绪分类。混合特征由显式特征和深度特征组成。显式特征是基于拉班运动分析计算的,该分析考虑了身体、努力、形状和空间属性。深度特征是通过一维卷积自动编码器从潜在表示中获得的。最终,我们提出了一个精细的特征融合网络来获得几乎线性可分的混合特征。大量实验表明,我们的混合特征优于舞蹈表演中情感分类的单独特征。

京公网安备 11010802027423号

京公网安备 11010802027423号