当前位置:

X-MOL 学术

›

Comput. Animat. Virtual Worlds

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Human posture tracking with flexible sensors for motion recognition

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2021-04-06 , DOI: 10.1002/cav.1993 Zhiyong Chen 1 , Xiaowei Chen 1 , Yong Ma 2 , Shihui Guo 1 , Yipeng Qin 3 , Minghong Liao 1

Computer Animation and Virtual Worlds ( IF 1.1 ) Pub Date : 2021-04-06 , DOI: 10.1002/cav.1993 Zhiyong Chen 1 , Xiaowei Chen 1 , Yong Ma 2 , Shihui Guo 1 , Yipeng Qin 3 , Minghong Liao 1

Affiliation

|

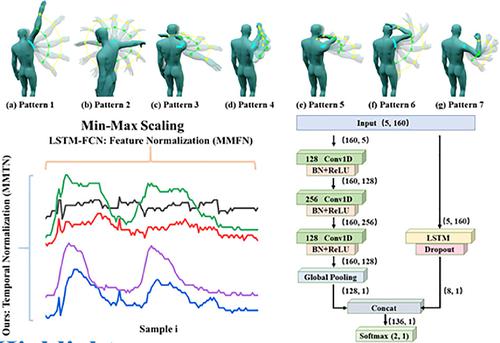

The integration of conventional clothes with flexible electronics is a promising solution as a future-generation computing platform. However, the problem of user authentication on this novel platform is still underexplored. This work uses flexible sensors to track human posture and achieves the goal of user authentication. We capture human movement pattern by four stretch sensors around the shoulder and one on the elbow. We introduce the long short-term memory fully convolutional network (LSTM-FCN), which directly takes noisy and sparse sensor data as input and verifies its consistency with the user's predefined movement patterns. The method can identify a user by matching movement patterns even if there are large intrapersonal variations. The authentication accuracy of LSTM-FCN reaches 98.0%, which is 10.7% and 6.5% higher than that of dynamic time warping and dynamic time warping dependent.

中文翻译:

使用灵活的传感器进行人体姿势跟踪以进行运动识别

传统服装与柔性电子产品的集成是作为下一代计算平台的有前途的解决方案。然而,这个新型平台上的用户身份验证问题仍未得到充分探索。这项工作使用灵活的传感器来跟踪人体姿势并实现用户身份验证的目标。我们通过肩部周围的四个拉伸传感器和肘部的一个拉伸传感器捕捉人体运动模式。我们引入了长短期记忆全卷积网络(LSTM-FCN),它直接将嘈杂和稀疏的传感器数据作为输入,并验证其与用户预定义的运动模式的一致性。即使存在较大的个人内部差异,该方法也可以通过匹配运动模式来识别用户。LSTM-FCN的认证准确率达到98.0%,分别为10.7%和6。

更新日期:2021-04-06

中文翻译:

使用灵活的传感器进行人体姿势跟踪以进行运动识别

传统服装与柔性电子产品的集成是作为下一代计算平台的有前途的解决方案。然而,这个新型平台上的用户身份验证问题仍未得到充分探索。这项工作使用灵活的传感器来跟踪人体姿势并实现用户身份验证的目标。我们通过肩部周围的四个拉伸传感器和肘部的一个拉伸传感器捕捉人体运动模式。我们引入了长短期记忆全卷积网络(LSTM-FCN),它直接将嘈杂和稀疏的传感器数据作为输入,并验证其与用户预定义的运动模式的一致性。即使存在较大的个人内部差异,该方法也可以通过匹配运动模式来识别用户。LSTM-FCN的认证准确率达到98.0%,分别为10.7%和6。

京公网安备 11010802027423号

京公网安备 11010802027423号