Journal of Biomedical informatics ( IF 4.5 ) Pub Date : 2020-12-10 , DOI: 10.1016/j.jbi.2020.103655 Aniek F Markus 1 , Jan A Kors 1 , Peter R Rijnbeek 1

|

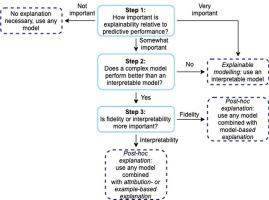

Artificial intelligence (AI) has huge potential to improve the health and well-being of people, but adoption in clinical practice is still limited. Lack of transparency is identified as one of the main barriers to implementation, as clinicians should be confident the AI system can be trusted. Explainable AI has the potential to overcome this issue and can be a step towards trustworthy AI. In this paper we review the recent literature to provide guidance to researchers and practitioners on the design of explainable AI systems for the health-care domain and contribute to formalization of the field of explainable AI. We argue the reason to demand explainability determines what should be explained as this determines the relative importance of the properties of explainability (i.e. interpretability and fidelity). Based on this, we propose a framework to guide the choice between classes of explainable AI methods (explainable modelling versus post-hoc explanation; model-based, attribution-based, or example-based explanations; global and local explanations). Furthermore, we find that quantitative evaluation metrics, which are important for objective standardized evaluation, are still lacking for some properties (e.g. clarity) and types of explanations (e.g. example-based methods). We conclude that explainable modelling can contribute to trustworthy AI, but the benefits of explainability still need to be proven in practice and complementary measures might be needed to create trustworthy AI in health care (e.g. reporting data quality, performing extensive (external) validation, and regulation).

中文翻译:

可解释性在创建可信赖的医疗保健人工智能中的作用:对术语,设计选择和评估策略的全面调查

人工智能(AI)具有改善人们健康和福祉的巨大潜力,但在临床实践中的采用仍然有限。缺乏透明度被认为是实施的主要障碍之一,因为临床医生应该相信AI系统可以信任。可解释的AI可以克服这个问题,并且可以迈向可信赖的AI。在本文中,我们回顾了最近的文献,以为研究人员和从业人员提供有关医疗领域可解释AI系统设计的指南,并有助于可解释AI领域的形式化。我们认为要求可解释性的原因决定了应解释的内容,因为这决定了可解释性(即可解释性和保真度)的相对重要性。基于此,我们提出了一个框架来指导可解释的AI方法类别之间的选择(可解释的建模与事后解释;基于模型的解释,基于属性的解释或基于示例的解释;全局和局部解释)。此外,我们发现对于某些客观属性(例如,清晰度)和解释类型(例如,基于示例的方法),仍然缺乏对于客观标准化评估非常重要的定量评估指标。我们得出结论,可解释的建模可以促进可信赖的AI,但是可解释性的好处仍然需要在实践中得到证明,并且可能需要采取补充措施来在医疗保健中创建可信赖的AI(例如,报告数据质量,执行广泛的(外部)验证以及规)。

京公网安备 11010802027423号

京公网安备 11010802027423号