Journal of Biomedical informatics ( IF 4.5 ) Pub Date : 2020-11-18 , DOI: 10.1016/j.jbi.2020.103621 Stephen R Pfohl 1 , Agata Foryciarz 2 , Nigam H Shah 1

|

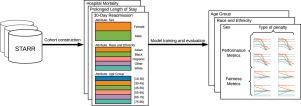

The use of machine learning to guide clinical decision making has the potential to worsen existing health disparities. Several recent works frame the problem as that of algorithmic fairness, a framework that has attracted considerable attention and criticism. However, the appropriateness of this framework is unclear due to both ethical as well as technical considerations, the latter of which include trade-offs between measures of fairness and model performance that are not well-understood for predictive models of clinical outcomes. To inform the ongoing debate, we conduct an empirical study to characterize the impact of penalizing group fairness violations on an array of measures of model performance and group fairness. We repeat the analysis across multiple observational healthcare databases, clinical outcomes, and sensitive attributes. We find that procedures that penalize differences between the distributions of predictions across groups induce nearly-universal degradation of multiple performance metrics within groups. On examining the secondary impact of these procedures, we observe heterogeneity of the effect of these procedures on measures of fairness in calibration and ranking across experimental conditions. Beyond the reported trade-offs, we emphasize that analyses of algorithmic fairness in healthcare lack the contextual grounding and causal awareness necessary to reason about the mechanisms that lead to health disparities, as well as about the potential of algorithmic fairness methods to counteract those mechanisms. In light of these limitations, we encourage researchers building predictive models for clinical use to step outside the algorithmic fairness frame and engage critically with the broader sociotechnical context surrounding the use of machine learning in healthcare.

中文翻译:

用于临床风险预测的公平机器学习的经验表征

使用机器学习来指导临床决策有可能加剧现有的健康差距。最近的几项工作将这个问题定义为算法公平性问题,这个框架引起了相当多的关注和批评。然而,由于伦理和技术方面的考虑,该框架的适当性尚不清楚,后者包括公平性和模型性能之间的权衡,临床结果的预测模型不太好理解。为了告知正在进行的辩论,我们进行了一项实证研究,以描述惩罚违反群体公平性的行为对模型性能和群体公平性的一系列衡量标准的影响。我们对多个观察性医疗保健数据库、临床结果和敏感属性重复分析。我们发现,惩罚跨组预测分布差异的程序会导致组内多个性能指标几乎普遍下降。在检查这些程序的次要影响时,我们观察到这些程序对跨实验条件的校准和排名公平性度量的影响的异质性。除了报告的权衡之外,我们强调对医疗保健中的算法公平性的分析缺乏推理导致健康差异的机制以及算法公平性方法抵消这些机制的潜力所必需的背景基础和因果意识。鉴于这些限制,

京公网安备 11010802027423号

京公网安备 11010802027423号