Computer Vision and Image Understanding ( IF 4.5 ) Pub Date : 2020-09-23 , DOI: 10.1016/j.cviu.2020.103111 Utku Ozbulak , Baptist Vandersmissen , Azarakhsh Jalalvand , Ivo Couckuyt , Arnout Van Messem , Wesley De Neve

|

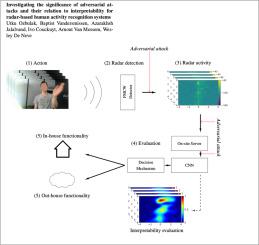

Given their substantial success in addressing a wide range of computer vision challenges, Convolutional Neural Networks (CNNs) are increasingly being used in smart home applications, with many of these applications relying on the automatic recognition of human activities. In this context, low-power radar devices have recently gained in popularity as recording sensors, given that the usage of these devices allows mitigating a number of privacy concerns, a key issue when making use of conventional video cameras. Another concern that is often cited when designing smart home applications is the resilience of these applications against cyberattacks. It is, for instance, well-known that the combination of images and CNNs is vulnerable against adversarial examples, mischievous data points that force machine learning models to generate wrong classifications during testing time. In this paper, we investigate the vulnerability of radar-based CNNs to adversarial attacks, and where these radar-based CNNs have been designed to recognize human gestures. Through experiments with four unique threat models, we show that radar-based CNNs are susceptible to both white- and black-box adversarial attacks. We also expose the existence of an extreme adversarial attack case, where it is possible to change the prediction made by the radar-based CNNs by only perturbing the padding of the inputs, without touching the frames where the action itself occurs. Moreover, we observe that gradient-based attacks exercise perturbation not randomly, but on important features of the input data. We highlight these important features by making use of Grad-CAM, a popular neural network interpretability method, hereby showing the connection between adversarial perturbation and prediction interpretability.

中文翻译:

调查对抗性攻击的重要性及其与基于雷达的人类活动识别系统的可解释性的关系

鉴于卷积神经网络(CNN)在解决各种计算机视觉挑战方面取得了巨大成功,因此越来越多地用于智能家居应用中,其中许多应用依赖于人类活动的自动识别。在这种情况下,由于低功率雷达设备的使用可以缓解许多隐私问题,而低功率雷达设备最近已成为记录传感器,这是使用传统摄像机时的关键问题。设计智能家居应用程序时经常提到的另一个问题是这些应用程序抵御网络攻击的弹性。例如,众所周知,图片和CNN的组合容易受到对抗性例子的攻击,调皮的数据点迫使机器学习模型在测试期间生成错误的分类。在本文中,我们研究了基于雷达的CNN容易遭受对抗性攻击,并且这些基于雷达的CNN旨在识别人类手势。通过对四个独特威胁模型的实验,我们表明基于雷达的CNN容易受到白盒和黑盒对抗攻击。我们还暴露了极端对抗攻击的情况,在这种情况下,仅通过扰动输入的填充而无需触摸动作本身发生的帧,就可以改变基于雷达的CNN的预测。此外,我们观察到基于梯度的攻击不是随机地进行扰动,而是对输入数据的重要特征进行扰动。

京公网安备 11010802027423号

京公网安备 11010802027423号