当前位置:

X-MOL 学术

›

Int. J. Imaging Syst. Technol.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Multi‐feature fusion of deep networks for mitosis segmentation in histological images

International Journal of Imaging Systems and Technology ( IF 3.3 ) Pub Date : 2020-09-18 , DOI: 10.1002/ima.22487 Yuan Zhang 1 , Jin Chen 1 , Xianzhu Pan 1

International Journal of Imaging Systems and Technology ( IF 3.3 ) Pub Date : 2020-09-18 , DOI: 10.1002/ima.22487 Yuan Zhang 1 , Jin Chen 1 , Xianzhu Pan 1

Affiliation

|

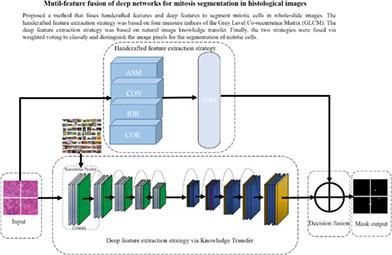

Mitotic cell detection in pathological images is significant for predicting the malignancy of tumors and the intelligent segmentation of these cells. Overcoming human error generated by pathologists in reading the images while enabling fast detection through high computing power remains a very challenging task. In this study, we proposed a method that fuses handcrafted features and deep features to segment mitotic cells in whole‐slide images. The handcrafted feature extraction strategy was based on four measure indices of the Gray Level Co‐occurrence Matrix. The deep feature extraction strategy was based on natural image knowledge transfer. Finally, the two strategies were fused to classify and distinguish the image pixels for the segmentation of mitotic cells. We used the AMIDA13 dataset and the pathological images collected by the Department of Pathology of Anhui No. 2 Provincial People's Hospital as the experimental dataset. We compared the Areas Under Curve (AUC) of Receiver Operating Characteristic obtained through the handcrafted feature model, the improved deep feature model with knowledge transfer, the classic U‐NET model, and the proposed multi‐feature fusion model. The results showed that the AUC values of our proposed method had 0.07 and 0.05 improved to classic U‐NET model on test dataset and validation dataset respectively, while achieved the best segmentation performance and detected most of true‐positive cells, representing a breakthrough for clinical application. The experiments also indicated that the staining uniformity of pathological tissue impacted the model performance.

中文翻译:

深度网络的多特征融合用于组织学图像中的有丝分裂分割

病理图像中的有丝分裂细胞检测对于预测肿瘤的恶性和这些细胞的智能分割具有重要意义。克服病理学家在读取图像时产生的人为错误,同时通过高计算能力实现快速检测仍然是一项非常艰巨的任务。在这项研究中,我们提出了一种融合手工制作的特征和深层特征的方法来分割全幻灯片图像中的有丝分裂细胞。手工特征提取策略基于灰度共生矩阵的四个度量指标。深度特征提取策略基于自然图像知识转移。最后,将这两种策略融合在一起,以分类和区分用于有丝分裂细胞分割的图像像素。我们使用AMIDA13数据集和安徽省第二人民医院病理科收集的病理图像作为实验数据集。我们比较了通过手工特征模型,改进的具有知识转移的深层特征模型,经典的U-NET模型以及拟议的多特征融合模型获得的接收器工作特性的曲线下面积(AUC)。结果表明,我们提出的方法的AUC值分别在测试数据集和验证数据集上分别比经典U-NET模型提高了0.07和0.05,同时实现了最佳的分割性能并检测了大多数真实阳性细胞,代表了临床上的突破应用。实验还表明,病理组织的染色均匀性影响了模型性能。

更新日期:2020-09-18

中文翻译:

深度网络的多特征融合用于组织学图像中的有丝分裂分割

病理图像中的有丝分裂细胞检测对于预测肿瘤的恶性和这些细胞的智能分割具有重要意义。克服病理学家在读取图像时产生的人为错误,同时通过高计算能力实现快速检测仍然是一项非常艰巨的任务。在这项研究中,我们提出了一种融合手工制作的特征和深层特征的方法来分割全幻灯片图像中的有丝分裂细胞。手工特征提取策略基于灰度共生矩阵的四个度量指标。深度特征提取策略基于自然图像知识转移。最后,将这两种策略融合在一起,以分类和区分用于有丝分裂细胞分割的图像像素。我们使用AMIDA13数据集和安徽省第二人民医院病理科收集的病理图像作为实验数据集。我们比较了通过手工特征模型,改进的具有知识转移的深层特征模型,经典的U-NET模型以及拟议的多特征融合模型获得的接收器工作特性的曲线下面积(AUC)。结果表明,我们提出的方法的AUC值分别在测试数据集和验证数据集上分别比经典U-NET模型提高了0.07和0.05,同时实现了最佳的分割性能并检测了大多数真实阳性细胞,代表了临床上的突破应用。实验还表明,病理组织的染色均匀性影响了模型性能。

京公网安备 11010802027423号

京公网安备 11010802027423号