当前位置:

X-MOL 学术

›

Remote Sens. Ecol. Conserv.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Mapping of land cover with open‐source software and ultra‐high‐resolution imagery acquired with unmanned aerial vehicles

Remote Sensing in Ecology and Conservation ( IF 5.5 ) Pub Date : 2020-01-13 , DOI: 10.1002/rse2.144 Ned Horning 1 , Erica Fleishman 2, 3 , Peter J. Ersts 1 , Frank A. Fogarty 2 , Martha Wohlfeil Zillig 2

Remote Sensing in Ecology and Conservation ( IF 5.5 ) Pub Date : 2020-01-13 , DOI: 10.1002/rse2.144 Ned Horning 1 , Erica Fleishman 2, 3 , Peter J. Ersts 1 , Frank A. Fogarty 2 , Martha Wohlfeil Zillig 2

Affiliation

|

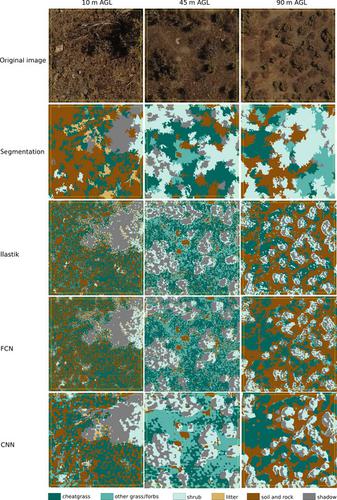

The use of unmanned aerial vehicles (UAVs) to map and monitor the environment has increased sharply in the last few years. Many individuals and organizations have purchased consumer‐grade UAVs, and commonly acquire aerial photographs to map land cover. The resulting ultra‐high‐resolution (sub‐decimeter‐resolution) imagery has high information content, but automating the extraction of this information to create accurate, wall‐to‐wall land‐cover maps is quite difficult. We introduce image‐processing workflows that are based on open‐source software and can be used to create land‐cover maps from ultra‐high‐resolution aerial imagery. We compared four machine‐learning workflows for classifying images. Two workflows were based on random forest algorithms. Of these, one used a pixel‐by‐pixel approach available in ilastik, and the other used image segments and was implemented with R and the Orfeo ToolBox. The other two workflows used fully connected neural networks and convolutional neural networks implemented with Nenetic. We applied the four workflows to aerial photographs acquired in the Great Basin (western USA) at flying heights of 10 m, 45 m and 90 m above ground level. Our focal cover type was cheatgrass (Bromus tectorum), a non‐native invasive grass that changes regional fire dynamics. The most accurate workflow for classifying ultra‐high‐resolution imagery depends on diverse factors that are influenced by image resolution and land‐cover characteristics, such as contrast, landscape patterns and the spectral texture of the land‐cover types being classified. For our application, the ilastik workflow yielded the highest overall accuracy (0.82–0.89) as assessed by pixel‐based accuracy.

中文翻译:

使用开源软件和无人驾驶飞机获取的超高分辨率图像绘制土地覆盖图

在最近几年中,无人驾驶飞机(UAV)用于绘制地图和监视环境的数量急剧增加。许多个人和组织已经购买了消费级无人机,并且通常会获取航空照片以绘制土地覆盖图。由此产生的超高分辨率(亚分米分辨率)图像具有很高的信息含量,但是要自动提取这些信息以创建准确的墙对墙土地覆盖图非常困难。我们介绍了基于开源软件的图像处理工作流程,这些工作流程可用于根据超高分辨率的航空影像创建土地覆盖图。我们比较了四种用于图像分类的机器学习工作流程。两个工作流基于随机森林算法。其中一个使用了ilastik提供的逐像素方法,其他使用的图像段则是通过R和Orfeo ToolBox实现的。其他两个工作流程使用完全连接的神经网络和使用Nenetic实现的卷积神经网络。我们将这四个工作流程应用于在大盆地(美国西部)以高于地面10 m,45 m和90 m的飞行高度采集的航拍照片。我们的焦点覆盖物类型是草皮草(Bromus tectorum),一种非本地入侵性草,可改变区域火灾动态。对超高分辨率图像进行分类的最准确的工作流程取决于受图像分辨率和土地覆盖特征影响的各种因素,例如对比度,景观模式和所分类土地覆盖类型的光谱纹理。对于我们的应用程序,通过基于像素的准确性评估,ilastik工作流程产生了最高的整体准确性(0.82-0.89)。

更新日期:2020-01-13

中文翻译:

使用开源软件和无人驾驶飞机获取的超高分辨率图像绘制土地覆盖图

在最近几年中,无人驾驶飞机(UAV)用于绘制地图和监视环境的数量急剧增加。许多个人和组织已经购买了消费级无人机,并且通常会获取航空照片以绘制土地覆盖图。由此产生的超高分辨率(亚分米分辨率)图像具有很高的信息含量,但是要自动提取这些信息以创建准确的墙对墙土地覆盖图非常困难。我们介绍了基于开源软件的图像处理工作流程,这些工作流程可用于根据超高分辨率的航空影像创建土地覆盖图。我们比较了四种用于图像分类的机器学习工作流程。两个工作流基于随机森林算法。其中一个使用了ilastik提供的逐像素方法,其他使用的图像段则是通过R和Orfeo ToolBox实现的。其他两个工作流程使用完全连接的神经网络和使用Nenetic实现的卷积神经网络。我们将这四个工作流程应用于在大盆地(美国西部)以高于地面10 m,45 m和90 m的飞行高度采集的航拍照片。我们的焦点覆盖物类型是草皮草(Bromus tectorum),一种非本地入侵性草,可改变区域火灾动态。对超高分辨率图像进行分类的最准确的工作流程取决于受图像分辨率和土地覆盖特征影响的各种因素,例如对比度,景观模式和所分类土地覆盖类型的光谱纹理。对于我们的应用程序,通过基于像素的准确性评估,ilastik工作流程产生了最高的整体准确性(0.82-0.89)。

京公网安备 11010802027423号

京公网安备 11010802027423号