当前位置:

X-MOL 学术

›

Hum. Brain Mapp.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Long short-term memory-based neural decoding of object categories evoked by natural images.

Human Brain Mapping ( IF 4.8 ) Pub Date : 2020-07-10 , DOI: 10.1002/hbm.25136 Wei Huang 1 , Hongmei Yan 1 , Chong Wang 1 , Jiyi Li 1 , Xiaoqing Yang 1 , Liang Li 1 , Zhentao Zuo 2 , Jiang Zhang 3 , Huafu Chen 1

Human Brain Mapping ( IF 4.8 ) Pub Date : 2020-07-10 , DOI: 10.1002/hbm.25136 Wei Huang 1 , Hongmei Yan 1 , Chong Wang 1 , Jiyi Li 1 , Xiaoqing Yang 1 , Liang Li 1 , Zhentao Zuo 2 , Jiang Zhang 3 , Huafu Chen 1

Affiliation

|

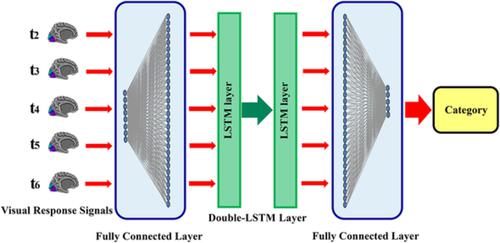

Visual perceptual decoding is one of the important and challenging topics in cognitive neuroscience. Building a mapping model between visual response signals and visual contents is the key point of decoding. Most previous studies used peak response signals to decode object categories. However, brain activities measured by functional magnetic resonance imaging are a dynamic process with time dependence, so peak signals cannot fully represent the whole process, which may affect the performance of decoding. Here, we propose a decoding model based on long short‐term memory (LSTM) network to decode five object categories from multitime response signals evoked by natural images. Experimental results show that the average decoding accuracy using the multitime (2–6 s) response signals is 0.540 from the five subjects, which is significantly higher than that using the peak ones (6 s; accuracy: 0.492; p < .05). In addition, from the perspective of different durations, methods and visual areas, the decoding performances of the five object categories are deeply and comprehensively explored. The analysis of different durations and decoding methods reveals that the LSTM‐based decoding model with sequence simulation ability can fit the time dependence of the multitime visual response signals to achieve higher decoding performance. The comparative analysis of different visual areas demonstrates that the higher visual cortex (VC) contains more semantic category information needed for visual perceptual decoding than lower VC.

中文翻译:

自然图像诱发的对象类别的基于长短期记忆的神经解码。

视觉感知解码是认知神经科学中重要且具有挑战性的课题之一。建立视觉反应信号与视觉内容之间的映射模型是解码的关键。大多数先前的研究使用峰值响应信号来解码对象类别。然而,功能磁共振成像测量的大脑活动是一个具有时间依赖性的动态过程,因此峰值信号不能完全代表整个过程,这可能会影响解码的性能。在这里,我们提出了一种基于长短期记忆(LSTM)网络的解码模型,用于从自然图像引发的多次响应信号中解码五个对象类别。实验结果表明,使用多时间(2-6 秒)响应信号的平均解码精度为 0.540,来自五个受试者,p < .05)。此外,从不同的持续时间、方法和视觉区域的角度,对五个对象类别的解码性能进行了深入和全面的探索。对不同持续时间和解码方法的分析表明,具有序列模拟能力的基于LSTM的解码模型可以拟合多时间视觉响应信号的时间依赖性,从而实现更高的解码性能。不同视觉区域的比较分析表明,较高的视觉皮层(VC)比较低的VC包含更多视觉感知解码所需的语义类别信息。

更新日期:2020-07-10

中文翻译:

自然图像诱发的对象类别的基于长短期记忆的神经解码。

视觉感知解码是认知神经科学中重要且具有挑战性的课题之一。建立视觉反应信号与视觉内容之间的映射模型是解码的关键。大多数先前的研究使用峰值响应信号来解码对象类别。然而,功能磁共振成像测量的大脑活动是一个具有时间依赖性的动态过程,因此峰值信号不能完全代表整个过程,这可能会影响解码的性能。在这里,我们提出了一种基于长短期记忆(LSTM)网络的解码模型,用于从自然图像引发的多次响应信号中解码五个对象类别。实验结果表明,使用多时间(2-6 秒)响应信号的平均解码精度为 0.540,来自五个受试者,p < .05)。此外,从不同的持续时间、方法和视觉区域的角度,对五个对象类别的解码性能进行了深入和全面的探索。对不同持续时间和解码方法的分析表明,具有序列模拟能力的基于LSTM的解码模型可以拟合多时间视觉响应信号的时间依赖性,从而实现更高的解码性能。不同视觉区域的比较分析表明,较高的视觉皮层(VC)比较低的VC包含更多视觉感知解码所需的语义类别信息。

京公网安备 11010802027423号

京公网安备 11010802027423号