当前位置:

X-MOL 学术

›

WIREs Data Mining Knowl. Discov.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Explainable artificial intelligence and machine learning: A reality rooted perspective

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-06-22 , DOI: 10.1002/widm.1368 Frank Emmert‐Streib 1, 2 , Olli Yli‐Harja 2, 3, 4 , Matthias Dehmer 5, 6, 7

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-06-22 , DOI: 10.1002/widm.1368 Frank Emmert‐Streib 1, 2 , Olli Yli‐Harja 2, 3, 4 , Matthias Dehmer 5, 6, 7

Affiliation

|

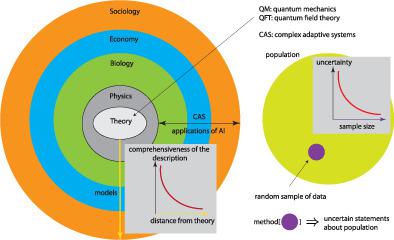

As a consequence of technological progress, nowadays, one is used to the availability of big data generated in nearly all fields of science. However, the analysis of such data possesses vast challenges. One of these challenges relates to the explainability of methods from artificial intelligence (AI) or machine learning. Currently, many of such methods are nontransparent with respect to their working mechanism and for this reason are called black box models, most notably deep learning methods. However, it has been realized that this constitutes severe problems for a number of fields including the health sciences and criminal justice and arguments have been brought forward in favor of an explainable AI (XAI). In this paper, we do not assume the usual perspective presenting XAI as it should be, but rather provide a discussion what XAI can be. The difference is that we do not present wishful thinking but reality grounded properties in relation to a scientific theory beyond physics.

中文翻译:

可解释的人工智能和机器学习:基于现实的观点

如今,由于技术的进步,人们已经习惯了几乎所有科学领域中产生的大数据的可用性。但是,对此类数据的分析面临巨大挑战。这些挑战之一涉及来自人工智能(AI)或机器学习的方法的可解释性。当前,许多这样的方法相对于它们的工作机制是不透明的,因此被称为黑匣子模型,最著名的是深度学习方法。但是,已经认识到,这对于包括健康科学和刑事司法在内的许多领域构成了严重的问题,并且已经提出了支持可解释的AI(XAI)的论点。在本文中,我们不假设通常的观点应以实际的方式呈现XAI,而是提供讨论什么是XAI。可以。不同之处在于,我们没有提出一厢情愿的想法,而是提出了与物理学以外的科学理论相关的基于现实的属性。

更新日期:2020-06-22

中文翻译:

可解释的人工智能和机器学习:基于现实的观点

如今,由于技术的进步,人们已经习惯了几乎所有科学领域中产生的大数据的可用性。但是,对此类数据的分析面临巨大挑战。这些挑战之一涉及来自人工智能(AI)或机器学习的方法的可解释性。当前,许多这样的方法相对于它们的工作机制是不透明的,因此被称为黑匣子模型,最著名的是深度学习方法。但是,已经认识到,这对于包括健康科学和刑事司法在内的许多领域构成了严重的问题,并且已经提出了支持可解释的AI(XAI)的论点。在本文中,我们不假设通常的观点应以实际的方式呈现XAI,而是提供讨论什么是XAI。可以。不同之处在于,我们没有提出一厢情愿的想法,而是提出了与物理学以外的科学理论相关的基于现实的属性。

京公网安备 11010802027423号

京公网安备 11010802027423号