当前位置:

X-MOL 学术

›

Comput. Graph.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

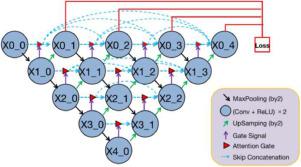

ANU-Net: Attention-based Nested U-Net to exploit full resolution features for medical image segmentation

Computers & Graphics ( IF 2.5 ) Pub Date : 2020-08-01 , DOI: 10.1016/j.cag.2020.05.003 Chen Li , Yusong Tan , Wei Chen , Xin Luo , Yulin He , Yuanming Gao , Fei Li

Computers & Graphics ( IF 2.5 ) Pub Date : 2020-08-01 , DOI: 10.1016/j.cag.2020.05.003 Chen Li , Yusong Tan , Wei Chen , Xin Luo , Yulin He , Yuanming Gao , Fei Li

|

Abstract Organ cancer have a high mortality rate. In order to help doctors diagnose and treat organ lesion, an automatic medical image segmentation model is urgently needed as manually segmentation is time-consuming and error-prone. However, automatic segmentation of target organ from medical images is a challenging task because of organ’s uneven and irregular shapes. In this paper, we propose an attention-based nested segmentation network, named ANU-Net. Our proposed network has a deep supervised encoder-decoder architecture and a redesigned dense skip connection. ANU-Net introduces attention mechanism between nested convolutional blocks so that the features extracted at different levels can be merged with a task-related selection. Besides, we redesign a hybrid loss function combining with three kinds of losses to make full use of full resolution feature information. We evaluated proposed model on MICCAI 2017 Liver Tumor Segmentation (LiTS) Challenge Dataset and ISBI 2019 Combined Healthy Abdominal Organ Segmentation (CHAOS) Challenge. ANU-Net achieved very competitive performance for four kinds of medical image segmentation tasks.

中文翻译:

ANU-Net:基于注意力的嵌套 U-Net 利用全分辨率特征进行医学图像分割

摘要 器官癌的死亡率很高。为了帮助医生诊断和治疗器官病变,由于手动分割耗时且容易出错,因此迫切需要一种自动医学图像分割模型。然而,由于器官的不均匀和不规则形状,从医学图像中自动分割目标器官是一项具有挑战性的任务。在本文中,我们提出了一种基于注意力的嵌套分割网络,名为 ANU-Net。我们提出的网络具有深度监督的编码器-解码器架构和重新设计的密集跳过连接。ANU-Net 在嵌套卷积块之间引入了注意力机制,以便在不同级别提取的特征可以与与任务相关的选择合并。除了,我们重新设计了结合三种损失的混合损失函数,以充分利用全分辨率特征信息。我们评估了 MICCAI 2017 肝脏肿瘤分割 (LiTS) 挑战数据集和 ISBI 2019 联合健康腹部器官分割 (CHAOS) 挑战的拟议模型。ANU-Net 在四种医学图像分割任务中取得了非常有竞争力的性能。

更新日期:2020-08-01

中文翻译:

ANU-Net:基于注意力的嵌套 U-Net 利用全分辨率特征进行医学图像分割

摘要 器官癌的死亡率很高。为了帮助医生诊断和治疗器官病变,由于手动分割耗时且容易出错,因此迫切需要一种自动医学图像分割模型。然而,由于器官的不均匀和不规则形状,从医学图像中自动分割目标器官是一项具有挑战性的任务。在本文中,我们提出了一种基于注意力的嵌套分割网络,名为 ANU-Net。我们提出的网络具有深度监督的编码器-解码器架构和重新设计的密集跳过连接。ANU-Net 在嵌套卷积块之间引入了注意力机制,以便在不同级别提取的特征可以与与任务相关的选择合并。除了,我们重新设计了结合三种损失的混合损失函数,以充分利用全分辨率特征信息。我们评估了 MICCAI 2017 肝脏肿瘤分割 (LiTS) 挑战数据集和 ISBI 2019 联合健康腹部器官分割 (CHAOS) 挑战的拟议模型。ANU-Net 在四种医学图像分割任务中取得了非常有竞争力的性能。

京公网安备 11010802027423号

京公网安备 11010802027423号