当前位置:

X-MOL 学术

›

WIREs Data Mining Knowl. Discov.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Learning decision trees through Monte Carlo tree search: An empirical evaluation

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-02-24 , DOI: 10.1002/widm.1348 Cecília Nunes 1 , Mathieu De Craene 2 , Hélène Langet 1 , Oscar Camara 1 , Anders Jonsson 1

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-02-24 , DOI: 10.1002/widm.1348 Cecília Nunes 1 , Mathieu De Craene 2 , Hélène Langet 1 , Oscar Camara 1 , Anders Jonsson 1

Affiliation

|

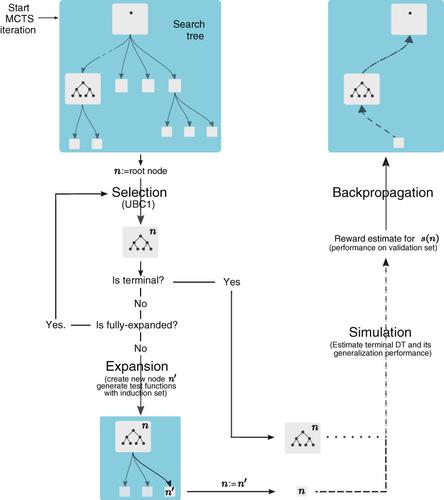

Decision trees (DTs) are a widely used prediction tool, owing to their interpretability. Standard learning methods follow a locally optimal approach that trades off prediction performance for computational efficiency. Such methods can however be far from optimal, and it may pay off to spend more computational resources to increase performance. Monte Carlo tree search (MCTS) is an approach to approximate optimal choices in exponentially large search spaces. We propose a DT learning approach based on the Upper Confidence Bound applied to tree (UCT) algorithm, including procedures to expand and explore the space of DTs. To mitigate the computational cost of our method, we employ search pruning strategies that discard some branches of the search tree. The experiments show that proposed approach outperformed the C4.5 algorithm in 20 out of 31 datasets, with statistically significant improvements in the trade‐off between prediction performance and DT complexity. The approach improved locally optimal search for datasets with more than 1,000 instances, or for smaller datasets likely arising from complex distributions.

中文翻译:

通过蒙特卡洛树搜索学习决策树:一项实证评估

由于决策树(DT)具有可解释性,因此是一种广泛使用的预测工具。标准学习方法遵循局部最优方法,该方法在权衡预测性能与计算效率之间进行权衡。然而,这些方法可能远非最佳,并且可能会花费更多的计算资源来提高性能。蒙特卡罗树搜索(MCTS)是一种在指数较大的搜索空间中近似最佳选择的方法。我们提出了一种基于应用于树(UCT)算法的上限可信度的DT学习方法,其中包括扩展和探索DT空间的过程。为了减轻我们方法的计算成本,我们采用了搜索修剪策略,该策略会丢弃搜索树的某些分支。实验表明,该方法在31个数据集中的20个中均优于C4.5算法,在预测性能和DT复杂度之间的折衷方面具有统计上的显着改进。该方法改进了对具有1,000多个实例的数据集或可能由复杂分布产生的较小数据集的局部最优搜索。

更新日期:2020-02-24

中文翻译:

通过蒙特卡洛树搜索学习决策树:一项实证评估

由于决策树(DT)具有可解释性,因此是一种广泛使用的预测工具。标准学习方法遵循局部最优方法,该方法在权衡预测性能与计算效率之间进行权衡。然而,这些方法可能远非最佳,并且可能会花费更多的计算资源来提高性能。蒙特卡罗树搜索(MCTS)是一种在指数较大的搜索空间中近似最佳选择的方法。我们提出了一种基于应用于树(UCT)算法的上限可信度的DT学习方法,其中包括扩展和探索DT空间的过程。为了减轻我们方法的计算成本,我们采用了搜索修剪策略,该策略会丢弃搜索树的某些分支。实验表明,该方法在31个数据集中的20个中均优于C4.5算法,在预测性能和DT复杂度之间的折衷方面具有统计上的显着改进。该方法改进了对具有1,000多个实例的数据集或可能由复杂分布产生的较小数据集的局部最优搜索。

京公网安备 11010802027423号

京公网安备 11010802027423号