当前位置:

X-MOL 学术

›

WIREs Data Mining Knowl. Discov.

›

论文详情

Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Bias in data‐driven artificial intelligence systems—An introductory survey

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-02-03 , DOI: 10.1002/widm.1356 Eirini Ntoutsi 1 , Pavlos Fafalios 2 , Ujwal Gadiraju 1 , Vasileios Iosifidis 1 , Wolfgang Nejdl 1 , Maria‐Esther Vidal 3 , Salvatore Ruggieri 4 , Franco Turini 4 , Symeon Papadopoulos 5 , Emmanouil Krasanakis 5 , Ioannis Kompatsiaris 5 , Katharina Kinder‐Kurlanda 6 , Claudia Wagner 6 , Fariba Karimi 6 , Miriam Fernandez 7 , Harith Alani 7 , Bettina Berendt 8, 9 , Tina Kruegel 10 , Christian Heinze 10 , Klaus Broelemann 11 , Gjergji Kasneci 11 , Thanassis Tiropanis 12 , Steffen Staab 1, 12, 13

WIREs Data Mining and Knowledge Discovery ( IF 7.8 ) Pub Date : 2020-02-03 , DOI: 10.1002/widm.1356 Eirini Ntoutsi 1 , Pavlos Fafalios 2 , Ujwal Gadiraju 1 , Vasileios Iosifidis 1 , Wolfgang Nejdl 1 , Maria‐Esther Vidal 3 , Salvatore Ruggieri 4 , Franco Turini 4 , Symeon Papadopoulos 5 , Emmanouil Krasanakis 5 , Ioannis Kompatsiaris 5 , Katharina Kinder‐Kurlanda 6 , Claudia Wagner 6 , Fariba Karimi 6 , Miriam Fernandez 7 , Harith Alani 7 , Bettina Berendt 8, 9 , Tina Kruegel 10 , Christian Heinze 10 , Klaus Broelemann 11 , Gjergji Kasneci 11 , Thanassis Tiropanis 12 , Steffen Staab 1, 12, 13

Affiliation

|

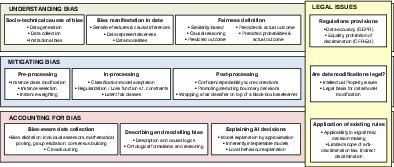

Artificial Intelligence (AI)‐based systems are widely employed nowadays to make decisions that have far‐reaching impact on individuals and society. Their decisions might affect everyone, everywhere, and anytime, entailing concerns about potential human rights issues. Therefore, it is necessary to move beyond traditional AI algorithms optimized for predictive performance and embed ethical and legal principles in their design, training, and deployment to ensure social good while still benefiting from the huge potential of the AI technology. The goal of this survey is to provide a broad multidisciplinary overview of the area of bias in AI systems, focusing on technical challenges and solutions as well as to suggest new research directions towards approaches well‐grounded in a legal frame. In this survey, we focus on data‐driven AI, as a large part of AI is powered nowadays by (big) data and powerful machine learning algorithms. If otherwise not specified, we use the general term bias to describe problems related to the gathering or processing of data that might result in prejudiced decisions on the bases of demographic features such as race, sex, and so forth.

中文翻译:

数据驱动的人工智能系统中的偏见—入门调查

如今,基于人工智能(AI)的系统已被广泛采用,以做出对个人和社会产生深远影响的决策。他们的决定可能会影响到世界各地的所有人,无论何时何地,都会引起人们对潜在人权问题的关注。因此,有必要超越为预测性能而优化的传统AI算法,并在其设计,培训和部署中融入道德和法律原则,以确保社会福利,同时仍然受益于AI技术的巨大潜力。这项调查的目的是针对AI系统中的偏见领域提供广泛的多学科概述,重点是技术挑战和解决方案,并针对基于法律框架的合理方法提出新的研究方向。在本次调查中,我们专注于数据驱动的AI,如今,由于AI的大部分由(大)数据和强大的机器学习算法提供支持。如果没有另外说明,我们将使用一般性偏见来描述与数据收集或处理相关的问题,这些问题可能会导致基于种族,性别等人口统计特征做出偏见的决策。

更新日期:2020-02-03

中文翻译:

数据驱动的人工智能系统中的偏见—入门调查

如今,基于人工智能(AI)的系统已被广泛采用,以做出对个人和社会产生深远影响的决策。他们的决定可能会影响到世界各地的所有人,无论何时何地,都会引起人们对潜在人权问题的关注。因此,有必要超越为预测性能而优化的传统AI算法,并在其设计,培训和部署中融入道德和法律原则,以确保社会福利,同时仍然受益于AI技术的巨大潜力。这项调查的目的是针对AI系统中的偏见领域提供广泛的多学科概述,重点是技术挑战和解决方案,并针对基于法律框架的合理方法提出新的研究方向。在本次调查中,我们专注于数据驱动的AI,如今,由于AI的大部分由(大)数据和强大的机器学习算法提供支持。如果没有另外说明,我们将使用一般性偏见来描述与数据收集或处理相关的问题,这些问题可能会导致基于种族,性别等人口统计特征做出偏见的决策。

京公网安备 11010802027423号

京公网安备 11010802027423号