Our official English website, www.x-mol.net, welcomes your feedback! (Note: you will need to create a separate account there.)

Comparison of aggregate and individual participant data approaches to meta-analysis of randomised trials: An observational study.

PLOS Medicine ( IF 15.8 ) Pub Date : 2020-01-31 , DOI: 10.1371/journal.pmed.1003019 Jayne F Tierney 1 , David J Fisher 1 , Sarah Burdett 1 , Lesley A Stewart 2 , Mahesh K B Parmar 1

PLOS Medicine ( IF 15.8 ) Pub Date : 2020-01-31 , DOI: 10.1371/journal.pmed.1003019 Jayne F Tierney 1 , David J Fisher 1 , Sarah Burdett 1 , Lesley A Stewart 2 , Mahesh K B Parmar 1

Affiliation

|

BACKGROUND

It remains unclear when standard systematic reviews and meta-analyses that rely on published aggregate data (AD) can provide robust clinical conclusions. We aimed to compare the results from a large cohort of systematic reviews and meta-analyses based on individual participant data (IPD) with meta-analyses of published AD, to establish when the latter are most likely to be reliable and when the IPD approach might be required.

METHODS AND FINDINGS

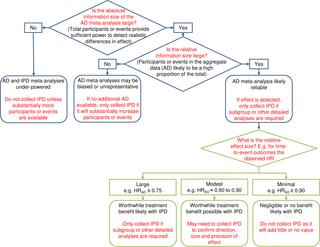

We used 18 cancer systematic reviews that included IPD meta-analyses: all of those completed and published by the Meta-analysis Group of the MRC Clinical Trials Unit from 1991 to 2010. We extracted or estimated hazard ratios (HRs) and standard errors (SEs) for survival from trial reports and compared these with IPD equivalents at both the trial and meta-analysis level. We also extracted or estimated the number of events. We used paired t tests to assess whether HRs and SEs from published AD differed on average from those from IPD. We assessed agreement, and whether this was associated with trial or meta-analysis characteristics, using the approach of Bland and Altman. The 18 systematic reviews comprised 238 unique trials or trial comparisons, including 37,082 participants. A HR and SE could be generated for 127 trials, representing 53% of the trials and approximately 79% of eligible participants. On average, trial HRs derived from published AD were slightly more in favour of the research interventions than those from IPD (HRAD to HRIPD ratio = 0.95, p = 0.007), but the limits of agreement show that for individual trials, the HRs could deviate substantially. These limits narrowed with an increasing number of participants (p < 0.001) or a greater number (p < 0.001) or proportion (p < 0.001) of events in the AD. On average, meta-analysis HRs from published AD slightly tended to favour the research interventions whether based on fixed-effect (HRAD to HRIPD ratio = 0.97, p = 0.088) or random-effects (HRAD to HRIPD ratio = 0.96, p = 0.044) models, but the limits of agreement show that for individual meta-analyses, agreement was much more variable. These limits tended to narrow with an increasing number (p = 0.077) or proportion of events (p = 0.11) in the AD. However, even when the information size of the AD was large, individual meta-analysis HRs could still differ from their IPD equivalents by a relative 10% in favour of the research intervention to 5% in favour of control. We utilised the results to construct a decision tree for assessing whether an AD meta-analysis includes sufficient information, and when estimates of effects are most likely to be reliable. A lack of power at the meta-analysis level may have prevented us identifying additional factors associated with the reliability of AD meta-analyses, and we cannot be sure that our results are generalisable to all outcomes and effect measures.

CONCLUSIONS

In this study we found that HRs from published AD were most likely to agree with those from IPD when the information size was large. Based on these findings, we provide guidance for determining systematically when standard AD meta-analysis will likely generate robust clinical conclusions, and when the IPD approach will add considerable value.

中文翻译:

汇总和个体参加者数据方法对随机试验的荟萃分析的比较:一项观察性研究。

背景技术尚不清楚何时依赖已发布的汇总数据(AD)的标准系统评价和荟萃分析可提供可靠的临床结论。我们旨在将基于个人参与者数据(IPD)的大量系统评价和荟萃分析与已发布AD的荟萃分析进行比较的结果,以确定后者何时最可能可靠以及何时采用IPD方法需要。方法和结果我们使用了18项癌症系统评价,其中包括IPD荟萃分析:所有这些均由MRC临床试验部门的荟萃分析小组于1991年至2010年完成并发表。我们提取或估算了危险比(HRs)和标准来自试验报告的生存错误(SE),并在试验和荟萃分析级别将其与IPD等效方案进行比较。我们还提取或估计了事件数量。我们使用配对的t检验来评估已发布AD的HR和SE与IPD的平均值是否存在差异。我们使用Bland和Altman的方法评估了一致性,以及这是否与试验或荟萃分析特征相关。18条系统评价包括238个独特的试验或试验比较,包括37,082名参与者。可以为127个试验生成HR和SE,占试验的53%和合格参与者的约79%。平均而言,已发表的AD的试验性HR比IPD的试验性HR略多于IPD(HRAD与HRIPD的比率= 0.95,p = 0.007),但协议的局限性表明,对于单个试验,HR可能会偏离实质上。随着参与者人数的增加(p <0.001)或AD中事件的更大数量(p <0.001)或比例(p <0.001),这些限制逐渐缩小。平均而言,无论是基于固定效应(HRAD与HRIPD的比= 0.97,p = 0.088)还是基于随机效应(HRAD与HRIPD的比= 0.96,p = 0.044),已发表AD的荟萃分析HR都倾向于偏爱研究干预措施。 )模型,但协议的限制表明,对于单独的荟萃分析,协议的可变性要大得多。随着广告活动中事件数量(p = 0.077)或事件比例(p = 0.11)的增加,这些限制趋于缩小。但是,即使AD的信息量很大,单个荟萃分析HR仍可能与其IPD等效值相差10%(支持研究干预)或5%(支持对照)。我们利用结果构建了一个决策树,用于评估AD荟萃分析是否包括足够的信息,以及何时评估效果最可能可靠。在荟萃分析水平上缺乏能力可能阻止了我们确定与AD荟萃分析的可靠性相关的其他因素,并且我们无法确定我们的结果是否可以推广到所有结果和效果指标。结论在这项研究中,我们发现,当信息量很大时,来自已发布的AD的HR与IPD的HR最一致。基于这些发现,我们为系统地确定标准AD荟萃分析何时可能会产生可靠的临床结论以及IPD方法何时会带来可观的价值提供指导。

更新日期:2020-02-03

中文翻译:

汇总和个体参加者数据方法对随机试验的荟萃分析的比较:一项观察性研究。

背景技术尚不清楚何时依赖已发布的汇总数据(AD)的标准系统评价和荟萃分析可提供可靠的临床结论。我们旨在将基于个人参与者数据(IPD)的大量系统评价和荟萃分析与已发布AD的荟萃分析进行比较的结果,以确定后者何时最可能可靠以及何时采用IPD方法需要。方法和结果我们使用了18项癌症系统评价,其中包括IPD荟萃分析:所有这些均由MRC临床试验部门的荟萃分析小组于1991年至2010年完成并发表。我们提取或估算了危险比(HRs)和标准来自试验报告的生存错误(SE),并在试验和荟萃分析级别将其与IPD等效方案进行比较。我们还提取或估计了事件数量。我们使用配对的t检验来评估已发布AD的HR和SE与IPD的平均值是否存在差异。我们使用Bland和Altman的方法评估了一致性,以及这是否与试验或荟萃分析特征相关。18条系统评价包括238个独特的试验或试验比较,包括37,082名参与者。可以为127个试验生成HR和SE,占试验的53%和合格参与者的约79%。平均而言,已发表的AD的试验性HR比IPD的试验性HR略多于IPD(HRAD与HRIPD的比率= 0.95,p = 0.007),但协议的局限性表明,对于单个试验,HR可能会偏离实质上。随着参与者人数的增加(p <0.001)或AD中事件的更大数量(p <0.001)或比例(p <0.001),这些限制逐渐缩小。平均而言,无论是基于固定效应(HRAD与HRIPD的比= 0.97,p = 0.088)还是基于随机效应(HRAD与HRIPD的比= 0.96,p = 0.044),已发表AD的荟萃分析HR都倾向于偏爱研究干预措施。 )模型,但协议的限制表明,对于单独的荟萃分析,协议的可变性要大得多。随着广告活动中事件数量(p = 0.077)或事件比例(p = 0.11)的增加,这些限制趋于缩小。但是,即使AD的信息量很大,单个荟萃分析HR仍可能与其IPD等效值相差10%(支持研究干预)或5%(支持对照)。我们利用结果构建了一个决策树,用于评估AD荟萃分析是否包括足够的信息,以及何时评估效果最可能可靠。在荟萃分析水平上缺乏能力可能阻止了我们确定与AD荟萃分析的可靠性相关的其他因素,并且我们无法确定我们的结果是否可以推广到所有结果和效果指标。结论在这项研究中,我们发现,当信息量很大时,来自已发布的AD的HR与IPD的HR最一致。基于这些发现,我们为系统地确定标准AD荟萃分析何时可能会产生可靠的临床结论以及IPD方法何时会带来可观的价值提供指导。

京公网安备 11010802027423号

京公网安备 11010802027423号