Nature ( IF 64.8 ) Pub Date : 2020-01-15 , DOI: 10.1038/s41586-019-1924-6 Will Dabney 1 , Zeb Kurth-Nelson 1, 2 , Naoshige Uchida 3 , Clara Kwon Starkweather 3 , Demis Hassabis 1 , Rémi Munos 1 , Matthew Botvinick 1, 4

|

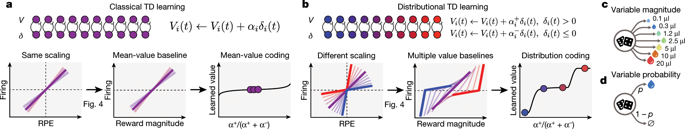

Since its introduction, the reward prediction error theory of dopamine has explained a wealth of empirical phenomena, providing a unifying framework for understanding the representation of reward and value in the brain1,2,3. According to the now canonical theory, reward predictions are represented as a single scalar quantity, which supports learning about the expectation, or mean, of stochastic outcomes. Here we propose an account of dopamine-based reinforcement learning inspired by recent artificial intelligence research on distributional reinforcement learning4,5,6. We hypothesized that the brain represents possible future rewards not as a single mean, but instead as a probability distribution, effectively representing multiple future outcomes simultaneously and in parallel. This idea implies a set of empirical predictions, which we tested using single-unit recordings from mouse ventral tegmental area. Our findings provide strong evidence for a neural realization of distributional reinforcement learning.

中文翻译:

基于多巴胺的强化学习中的价值分布代码

自推出以来,多巴胺的奖励预测误差理论解释了丰富的经验现象,为理解大脑中奖励和价值的表示提供了一个统一的框架1,2,3。根据现在的规范理论,奖励预测表示为单个标量,它支持了解随机结果的期望或均值。在这里,我们提出了一个基于多巴胺的强化学习的说明,灵感来自最近关于分布式强化学习的人工智能研究4,5,6. 我们假设大脑不是将可能的未来奖励表示为单一平均值,而是作为概率分布,有效地同时并行地表示多个未来结果。这个想法意味着一组经验预测,我们使用来自小鼠腹侧被盖区域的单单元记录进行了测试。我们的研究结果为分布式强化学习的神经实现提供了强有力的证据。

京公网安备 11010802027423号

京公网安备 11010802027423号