Abstract

Blind quantum computation (BQC) is a secure quantum computation method that protects the privacy of clients. Measurement-based quantum computation (MBQC) is a promising approach for realizing BQC. To obtain reliable results in blind MBQC, it is crucial to verify whether the resource graph states are accurately prepared in the adversarial scenario. However, previous verification protocols for this task are too resource-consuming or noise-susceptible to be applied in practice. Here, we propose a robust and efficient protocol for verifying arbitrary graph states with any prime local dimension in the adversarial scenario, which leads to a robust and efficient protocol for verifying the resource state in blind MBQC. Our protocol requires only local Pauli measurements and is thus easy to realize with current technologies. Nevertheless, it can achieve optimal scaling behaviors with respect to the system size and the target precision as quantified by the infidelity and significance level, which has never been achieved before. Notably, our protocol can exponentially enhance the scaling behavior with the significance level.

Similar content being viewed by others

Introduction

Quantum computation offers the promise of exponential speedups over classical computation on a number of important problems1,2,3. However, it is very challenging to realize practical quantum computation in the near future, especially for clients with limited quantum computational power. Blind quantum computation (BQC)4 is an effective method that enables such a client to delegate his (her) computation to a server, which is capable of performing quantum computation, without leaking any information about the computation task. So far, various protocols of BQC have been proposed in theory5,6,7,8 and demonstrated in experiments9,10,11,12. Many of these protocols build on the model of measurement-based quantum computation (MBQC)13,14,15, in which graph states are used as resources and local projective measurements on qudits are used to drive the computation.

To realize BQC successfully, it is crucial to protect the privacy of the client and verify the correctness of the computation results. The latter task, known as verification of BQC, has been studied in various models as explained in the Methods section, among which MBQC in the receive-and-measure setting is particularly convenient16,17,18,19,20,21. However, it is extremely challenging to construct robust and efficient verification protocols, especially for noisy, intermediate-scale quantum (NISQ) devices3,22,23. Actually, this problem lies at the heart of the active research field of quantum characterization, verification, and validation (QCVV)24,25,26,27,28,29.

In this work, we focus on the problem of verifying the resource graph states in the following adversarial scenario16,30,31, which is crucial to the verification of blind MBQC in the receive-and-measure setting6,16,17,18,19,20,21: Alice is a client (verifier) who can only perform single-qudit projective measurements with a trusted measurement device, and Bob is a server (prover) who can prepare arbitrary quantum states. In order to perform MBQC, Alice delegates the preparation of the n-qudit graph state \(\left\vert G\right\rangle \in {{{\mathcal{H}}}}\) to Bob, who then prepares a quantum state ρ on the whole space \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\) and sends it to Alice qudit by qudit. If Bob is honest, then he is supposed to prepare N + 1 copies of \(\left\vert G\right\rangle\); while if he is malicious, then he can mess up the computation of Alice by generating an arbitrary correlated or even entangled state ρ. To obtain reliable computation results, Alice needs to verify the resource state prepared by Bob with suitable tests on N systems, where each test is a binary measurement on a single-copy system. If the test results satisfy certain conditions, then the conditional reduced state on the remaining system is close to the target state \(\left\vert G\right\rangle\) and can be used for MBQC; otherwise, the state is rejected. Since there is no communication from Alice to Bob after the preparation of the state ρ, the information-theoretic blindness is guaranteed by the no-signaling principle6.

The assumption that the client can perform reliable local projective measurements can be justified as follows. First, the measurement devices are controlled by Alice in her laboratory and are not affected by the adversary. So it is reasonable to assume that the measurement devices are trustworthy. Second, in practice, Alice can calibrate and verify her measurement devices before performing blind MBQC, and the resource costs of these operations are independent of the complexity of the quantum computation and the qudit number of the resource graph state. If high-quality measurements can be certified after calibration and verification, then Alice can safely use them to verify the graph state and perform blind MBQC.

As pointed out above, the verification of the resource graph state in the adversarial scenario16,30,31 is a crucial and challenging part in the verification of blind MBQC. A valid verification protocol in the adversarial scenario has to meet the basic requirements of completeness and soundness16,20,31. The completeness means Alice does not reject the ideal graph state \(\left\vert G\right\rangle\). Intuitively, the verification protocol is sound if Alice does not mistakenly accept any bad state that is far from the ideal state \(\left\vert G\right\rangle\). Concretely, the soundness means the following: once accepting, Alice needs to ensure with a high confidence level 1 − δ that the reduced state for MBQC has a sufficiently high fidelity (at least 1 − ϵ) with \(\left\vert G\right\rangle\). Here 0 < δ ≤ 1 is called the significance level and the threshold 0 < ϵ < 1 is called the target infidelity. The two parameters specify the target verification precision. The efficiency of a protocol is characterized by the number N of tests needed to achieve a given precision. Under the requirements of completeness and soundness, the optimal scaling behaviors of N with respect to ϵ, δ, and the qudit number n of \(\left\vert G\right\rangle\) are O(ϵ−1), \(O(\ln {\delta }^{-1})\), and O(1), respectively, as explained in the Results section. However, it is highly nontrivial to construct efficient verification protocols in the adversarial scenario. Although various protocols have been proposed16,18,20,21,31,32,33, most protocols known so far are too resource consuming. Even without considering noise robustness, only the protocol of refs. 30,31 achieves the optimal scaling behaviors with n, ϵ, and δ (see Table 1).

Moreover, most protocols are not robust to experimental noise: the state prepared by Bob may be rejected with a high probability even if it has a small deviation from the ideal resource state. However, in practice, it is extremely difficult to prepare quantum states with genuine multipartite entanglement perfectly. So it is unrealistic to ask honest Bob to generate the perfect resource state. On the other hand, if the deviation from the ideal state is small enough, then it is still useful for MBQC20,32. Therefore, a practical and robust protocol should accept nearly ideal states with a sufficiently high probability; otherwise, Alice needs to repeat the verification protocol many times to perform MBQC, which substantially increases the sample complexity. Unfortunately, no protocol known in the literature can achieve this goal.

Recently, a fault-tolerant protocol was proposed for verifying MBQC based on two-colorable graph states17. With this protocol, Alice can detect whether or not the given state belongs to a set of error-correctable states; then she can perform fault-tolerant MBQC on the accepted state. Although this protocol is noise-resilient to some extent, it is not very efficient (see Table 1), and is difficult to realize in the current era of NISQ devices3,22,23 because too many physical qubits are required to encode the logical qubits. In addition, this protocol is robust only to certain correctable errors since it is based on a given error-correcting code. If the actual error is not correctable, then the probability of acceptance will decrease exponentially with the number of tests, which substantially increases the actual sample complexity.

In this work, we propose a robust and efficient protocol for verifying general qudit graph states with a prime local dimension in the adversarial scenario, which plays a crucial role in robust and efficient verification of blind MBQC. Our protocol is appealing to practical applications because it only requires stabilizer tests based on local Pauli measurements, which are easy to implement with current technologies. It is robust against arbitrary types of noise in state preparation, as long as the fidelity is sufficiently high. Moreover, our protocol can achieve optimal scaling behaviors with respect to the system size and target precision ϵ, δ, and the sample cost is comparable to the counterpart in the nonadversarial scenario as clarified in the Methods section. As far as we know, such a high efficiency has never been achieved before when robustness is taken into account. In addition to qudit graph states, our protocol can also be applied to verifying many other important quantum states in the adversarial scenario, as explained in the Discussion section. Furthermore, many technical results developed in the course of our work are also useful for studying random sampling without replacement, as discussed in the companion paper34 (cf. the Methods section).

Results

Qudit graph states

To establish our results, first, we review the definition of qudit graph states as a preliminary, where the local dimension d is a prime. Mathematically, a graph G = (V, E, mE) is characterized by a set of n vertices V = {1, 2, …, n} and a set of edges E together with multiplicities specified by \({m}_{E}={({m}_{e})}_{e\in E}\), where \({m}_{e}\in {{\mathbb{Z}}}_{d}\) and \({{\mathbb{Z}}}_{d}\) is the ring of integers modulo d, which is also a field given that d is a prime. Two distinct vertices i, j of G are adjacent if they are connected by an edge. The generalized Pauli operators X and Z for a qudit read

where \(j\in {{\mathbb{Z}}}_{d}\).

Given a graph G = (V, E, mE) with n vertices, we can construct an n-qudit graph state \(\left\vert G\right\rangle \in {{{\mathcal{H}}}}\) as follows33,35: first, prepare the state \(\left\vert +\right\rangle := {\sum }_{j\in {{\mathbb{Z}}}_{d}}\left\vert j\right\rangle /\sqrt{d}\) for each vertex; then, for each edge e ∈ E, apply me times the generalized controlled-Z operation CZe on the vertices of e, where \({{{{\rm{CZ}}}}}_{e}={\sum }_{k\in {{\mathbb{Z}}}_{d}}\left\vert k\right\rangle {\left\langle k\right\vert }_{i}\otimes {Z}_{j}^{k}\) if e = (i, j). The resulting graph state has the form

This graph state is also uniquely determined by its stabilizer group S generated by the n commuting operators \({S}_{i}:= {X{}_{i}\bigotimes }_{j\in {V}_{i}}{Z}_{j}^{{m}_{(i,j)}}\) for i = 1, 2, …, n, where Vi is the set of vertices adjacent to vertex i. Each stabilizer operator in S can be written as

where \({{{\bf{k}}}}:= ({k}_{1},\ldots ,{k}_{n})\in {{\mathbb{Z}}}_{d}^{n}\), and \({({g}_{{{{\bf{k}}}}})}_{i}\) denotes the local generalized Pauli operator for the ith qudit.

Strategy for testing qudit graph states

Recently, a homogeneous strategy30,31 for testing qubit stabilizer states based on stabilizer tests was proposed in ref. 36 and generalized to the qudit case with a prime local dimension in Sec. X E of ref. 31. Here we use a variant strategy for testing qudit graph states, which serves as an important subroutine of our verification protocol. Let S be the stabilizer group of \(\left\vert G\right\rangle \in {{{\mathcal{H}}}}\) and \({{{\mathcal{D}}}}({{{\mathcal{H}}}})\) be the set of all density operators on \({{{\mathcal{H}}}}\). For any operator gk ∈ S, the corresponding stabilizer test is constructed as follows: party i measures the local generalized Pauli operator \({({g}_{{{{\bf{k}}}}})}_{i}\) for i = 1, 2, …, n, and records the outcome by an integer \({o}_{i}\in {{\mathbb{Z}}}_{d}\), which corresponds to the eigenvalue \({\omega }^{{o}_{i}}\) of \({({g}_{{{{\bf{k}}}}})}_{i}\); then the test is passed if and only if the outcomes satisfy \({\sum }_{i}{o}_{i}=0\,{{{\rm{mod}}}}\,d\). By construction, the test can be represented by a two-outcome measurement \(\{{P}_{{{{\bf{k}}}}},{\mathbb{I}}-{P}_{{{{\bf{k}}}}}\}\). Here \({\mathbb{I}}\) is the identity operator on \({{{\mathcal{H}}}}\);

is the projector onto the eigenspace of gk with eigenvalue 1 and corresponds to passing the test, while \({\mathbb{I}}-{P}_{{{{\bf{k}}}}}\) corresponds to the failure. It is easy to check that \({P}_{{{{\bf{k}}}}}\left\vert G\right\rangle =\left\vert G\right\rangle\), which means \(\left\vert G\right\rangle\) can always pass the test. The stabilizer test corresponding to the operator \({\mathbb{I}}\in S\) is called the ‘trivial test’ since all states can pass the test with certainty.

To construct a verification strategy for \(\left\vert G\right\rangle\), we perform all distinct tests Pk for \({{{\bf{k}}}}\in {{\mathbb{Z}}}_{d}^{n}\) randomly each with probability d−n. The resulting strategy is characterized by a two-outcome measurement \(\{\tilde{\Omega },{\mathbb{I}}-\tilde{\Omega }\}\), which is determined by the verification operator

For 1/d ≤ λ < 1, if one performs \(\tilde{\Omega }\) and the trivial test with probabilities \(p=\frac{d(1-\lambda )}{d-1}\) and 1 − p, respectively, then another strategy can be constructed as30,31

We denote by ν ≔ 1 − λ the spectral gap of Ω from the largest eigenvalue. This strategy plays a key role in our verification protocol introduced in the next subsection.

As shown in Supplementary Note 6A, the second equality in Eq. (5) holds whenever d is a prime, but may fail if d is not a prime. In the latter case, our strategy is no longer homogeneous in general, and many results in this work may not hold since they are based on homogeneous strategies. This is why we restrict our attention to the case of prime local dimensions.

Verification of graph states in blind MBQC

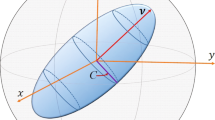

Suppose Alice intends to perform quantum computation with single-qudit projective measurements on the n-qudit graph state \(\left\vert G\right\rangle\) generated by Bob. As shown in Fig. 1, our protocol for verifying \(\left\vert G\right\rangle\) in the adversarial scenario runs as follows.

-

1.

Bob produces a state ρ on the whole space \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\) with N ≥ 1 and sends it to Alice.

-

2.

After receiving the state, Alice randomly permutes the N + 1 systems of ρ (due to this procedure, we can assume that ρ is permutation invariant without loss of generality) and applies the strategy Ω defined in Eq. (6) to the first N systems.

-

3.

Alice chooses an integer 0 ≤ k ≤ N − 1, called the number of allowed failures. If at most k failures are observed among the N tests, Alice accepts the reduced state σN+1 on the remaining system and uses it for MBQC; otherwise, she rejects it.

Here the state ρ generated by Bob might be arbitrarily correlated or entangled on the whole space \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\). To verify the target state, Alice first randomly permutes all N + 1 systems, and then uses a strategy Ω to test each of the first N systems. Finally, she accepts the reduced state σN+1 on the remaining system iff at least N − k tests are passed.

With this verification protocol, Alice aims to achieve three goals: completeness, soundness, and robustness. Recall that \(\left\vert G\right\rangle\) can always pass each test, so the completeness is automatically guaranteed. The soundness is characterized by the target infidelity ϵ and significance level δ as explained in the introduction. For verification protocols working in the nonadversarial scenario, where the source only produces independent states with no correlation or entanglement among different runs, the optimal scaling behaviors of the test number N with respect to ϵ, δ, and n are O(ϵ−1), \(O(\ln {\delta }^{-1})\), and O(1), respectively31,36. The adversarial scenario studied in this work has a weaker assumption on the source31,36, so the scaling behaviors in ϵ, δ, and n cannot be better. Although the condition of soundness looks quite simple, it is highly nontrivial to determine the degree of soundness. Even in the special case k = 0, this problem was resolved only very recently after quite a lengthy analysis30,31. Unfortunately, the robustness of this protocol is poor in this special case, as we shall see later. So we need to tackle this challenge in the general case.

Most previous works did not consider the problem of robustness at all, because it is already very difficult to detect the bad case without considering robustness. To characterize the robustness of a protocol, we need to consider the case in which honest Bob prepares an independent and identically distributed (i.i.d.) quantum state, that is, ρ is a tensor power of the form ρ = τ⊗(N+1) with \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\). Due to inevitable noise, τ may not equal the ideal state \(\left\vert G\right\rangle \left\langle G\right\vert\). Nevertheless, if the infidelity ϵτ ≔ 1 − 〈G∣τ∣G〉 is smaller than the target infidelity, that is, ϵτ < ϵ, then τ is still useful for quantum computing. For a robust verification protocol, such a state should be accepted with a high probability.

In the i.i.d. case, the probability that Alice accepts τ reads

where N is the number of tests, k is the number of allowed failures, and \({B}_{N,k}(p):= \mathop{\sum }\nolimits_{j = 0}^{k}\left(\begin{array}{c}N\\ j\end{array}\right){p}^{j}{(1-p)}^{N-j}\) is the binomial cumulative distribution function. To construct a robust verification protocol, it is preferable to choose a large value of k, so that \({p}_{N,k}^{{{{\rm{iid}}}}}(\tau )\) is sufficiently high. Unfortunately, most previous verification protocols can reach a meaningful conclusion only when k = 016,18,30,31,33, in which case the probability

decreases exponentially with the test number N, which is not satisfactory. These protocols need a large number of tests to guarantee soundness, so it is difficult to get accepted even if Bob is honest. Hence, previous protocols with the choice k = 0 are not robust to noise in state preparation. Since the acceptance probability is small, Alice needs to repeat the verification protocol many times to ensure that she accepts the state τ at least once, which substantially increases the actual sample cost.

When \({\epsilon }_{\tau }=\frac{\epsilon }{2}\) for example, the number of repetitions required is at least \(\Theta \left(\exp [\frac{1}{4\delta }]\right)\) for the HM protocol in ref. 16 and \(\Theta \left(\frac{1}{\sqrt{\delta }}\right)\) for the ZH protocol in refs. 30,31 (see Supplementary Note 3 for details). As a consequence, the total number of required tests is at least \(\Theta \left(\frac{1}{\delta }\exp [\frac{1}{4\delta }]\right)\) for the HM protocol and \(\Theta \left(\frac{\ln {\delta }^{-1}}{\sqrt{\delta }}\right)\) for the ZH protocol, as illustrated in Fig. 2. Therefore, although some protocols known in the literature are reasonably efficient in detecting the bad case, they are not useful in verifying the resource state of blind MBQC in a realistic scenario.

The red dots correspond to \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) in Eq. (18) with λ = 1/2, and the red dashed curve corresponds to the RHS of Eq. (20), which is an upper bound for \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\). The blue dashed curve corresponds to the HM protocol16, and the green solid curve corresponds to the ZH protocol31 with λ = 1/2. The performances of the TMMMF protocol20 and TM protocol32 are not shown because the numbers of tests required are too large (see Supplementary Note 3).

Guaranteed infidelity

Suppose ρ is permutation invariant. Then the probability that Alice accepts ρ reads

where \(\overline{\Omega }:= {\mathbb{I}}-\Omega\). Denote by σN+1 the reduced state on the remaining system when at most k failures are observed among the N tests. The fidelity between σN+1 and the ideal state \(\left\vert G\right\rangle\) reads Fk(ρ) = fk(ρ)/pk(ρ) [assuming pk(ρ) > 0], where

The actual verification precision can be characterized by the following figure of merit with 0 < δ ≤1,

where λ is determined by Eq. (6), and the minimization is taken over permutation-invariant states ρ on \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\).

If Alice accepts the state prepared by Bob, then she can guarantee (with significance level δ) that the reduced state σN+1 has infidelity at most \({\bar{\epsilon }}_{\lambda }(k,N,\delta )\) with the ideal state \(\left\vert G\right\rangle\). Consequently, according to the relation between the fidelity and trace norm, Alice can ensure the condition16

for any POVM element \(0\le E\le {\mathbb{I}}\); that is, the deviation of any measurement outcome probability from the ideal value is not larger than \(\sqrt{{\bar{\epsilon }}_{\lambda }(k,N,\delta )}\).

In view of the above discussions, the computation of \({\bar{\epsilon }}_{\lambda }(k,N,\delta )\) given in Eq. (11) is of central importance to analyzing the soundness of our protocol. Thanks to the analysis presented in the Methods section, this quantum optimization problem can actually be reduced to a classical sampling problem studied in the companion paper34. Using the results derived in ref. 34, we can deduce many useful properties of \({\bar{\epsilon }}_{\lambda }(k,N,\delta )\) as well as its analytical formula, which are presented in Supplementary Note 1. Here it suffices to clarify the monotonicity properties of \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) as stated in Proposition 1 below, which follows from Proposition 6.5 in ref. 34. Let \({{\mathbb{Z}}}^{\ge j}\) be the set of integers larger than or equal to j.

Proposition 1

Suppose 0 ≤ λ < 1, 0 < δ ≤ 1, \(k\in {{\mathbb{Z}}}^{\ge 0}\), and \(N\in {{\mathbb{Z}}}^{\ge k+1}\). Then \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) is nonincreasing in δ and N, but nondecreasing in k.

Verification with a fixed error rate

If the number k of allowed failures is sublinear in N, that is, k = o(N), then the acceptance probability \({p}_{N,k}^{{{{\rm{iid}}}}}(\tau )\) in Eq. (7) for the i.i.d. case approaches 0 as the number of tests N increases, which is not satisfactory. To achieve robust verification, here we set the number k to be proportional to the number of tests, that is, k = ⌊sνN⌋, where 0 ≤ s < 1 is the error rate, and ν = 1 − λ is the spectral gap of the strategy Ω. In this case, when Bob prepares i.i.d. states \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) with ϵτ < s, the acceptance probability \({p}_{N,k}^{{{{\rm{iid}}}}}(\tau )\) approaches one as N increases. In addition, we can deduce the following theorem, which is proved in Supplementary Note 6B.

Theorem 1

Suppose 0 < s, λ < 1, 0 < δ ≤ 1/4, and \(N\in {{\mathbb{Z}}}^{\ge 1}\). Then

Theorem 1 implies that \({\bar{\epsilon }}_{\lambda }(\lfloor \nu sN\rfloor ,N,\delta )\) converges to the error rate s when the number N of tests gets large, as illustrated in Fig. 3. To achieve a given infidelity ϵ and significance level δ, which means \({\bar{\epsilon }}_{\lambda }(\lfloor \nu sN\rfloor ,N,\delta )\le \epsilon\), it suffices to set s < ϵ and choose a sufficiently large N. By virtue of Theorem 1 we can derive the following theorem as proved in Supplementary Note 6C.

Theorem 2

Suppose 0 < λ < 1, 0 ≤ s < ϵ < 1, and 0 < δ ≤ 1/2. If the number N of tests satisfies

then \({\bar{\epsilon }}_{\lambda }(\lfloor \nu sN\rfloor ,N,\delta )\le \epsilon\).

Notably, if the ratio s/ϵ is a constant, then the sample cost is only \(O({\epsilon }^{-1}\ln {\delta }^{-1})\). The scaling behaviors in ϵ and δ are the same as the counterparts in the nonadversarial scenario, and are thus optimal.

The number of allowed failures

Next, we consider the case in which the number N of tests is given. To construct a concrete verification protocol, we need to specify the number k of allowed failures such that the conditions of soundness and robustness are satisfied simultaneously. According to Proposition 1, a small k is preferred to guarantee soundness, while a larger k is preferred to guarantee robustness. To construct a robust and efficient verification protocol, we need to find a good balance between the two conflicting requirements. The following proposition provides a suitable interval for the number k of allowed failures that can guarantee soundness; see Supplementary Note 6E for a proof.

Proposition 2

Suppose 0 < λ, ϵ < 1, 0 < δ ≤ 1/4, and \(N,k\in {{\mathbb{Z}}}^{\ge 0}\). If νϵN ≤ k ≤ N − 1, then \({\bar{\epsilon }}_{\lambda }(k,N,\delta ) >\, \epsilon\). If k ≤ l(λ, N, ϵ, δ), then \({\bar{\epsilon }}_{\lambda }(k,N,\delta )\le \epsilon\). Here

Next, we turn to the condition of robustness. When honest Bob prepares i.i.d. quantum states \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) with infidelity 0 < ϵτ < ϵ, the probability that Alice accepts τ is \({p}_{N,k}^{{{{\rm{iid}}}}}(\tau )\) given in Eq. (7), which is strictly increasing in k according to Lemma S4 in Supplementary Note 2. Suppose we set k = l(λ, N, ϵ, δ). As the number of tests N increases, the acceptance probability has the following asymptotic behavior if 0 < ϵτ < ϵ (see Supplementary Note 6F for a proof),

where \(D(p\parallel q):= p\ln \frac{p}{q}+(1-p)\ln \frac{1-p}{1-q}\) is the relative entropy between two binary probability vectors (p, 1−p) and (q, 1−q), and l is a shorthand for l(λ, N, ϵ, δ). Therefore, the probability of acceptance is arbitrarily close to one as long as N is sufficiently large, as illustrated in Fig. 4. Hence, our verification protocol is able to reach any degree of robustness.

Here λ = 1/2, infidelity ϵ = 0.1, and significance level δ = 0.01; ϵτ is the infidelity between τ and the target state \(\left\vert G\right\rangle\); and l(λ, N, ϵ, δ) is the number of allowed failures defined in Eq. (15).

Sample complexity of robust verification

Now we consider the resource cost required by our protocol to reach given verification precision and robustness. Let ρ be the state on \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\) prepared by Bob and σN+1 be the reduced state after Alice performs suitable tests and accepts the state ρ. To verify the target state within infidelity ϵ, significance level δ, and robustness r (with 0 ≤ r < 1) entails the following two conditions.

-

1.

(Soundness) If the infidelity of σN+1 with the target state is larger than ϵ, then the probability that Alice accepts ρ is less than δ.

-

2.

(Robustness) If ρ = τ⊗(N+1) with \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) and ϵτ ≤ rϵ, then the probability that Alice accepts ρ is at least 1−δ.

The tensor power ρ in Condition 2 can be replaced by the tensor product of N + 1 independent quantum states \({\tau }_{1},{\tau }_{2},\ldots ,{\tau }_{N+1}\in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) that have infidelities at most rϵ. All our conclusions do not change under this modification.

To achieve the conditions of soundness and robustness, we need to choose the test number N and the number k of allowed failures properly. To determine the resource cost, we define \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) as the minimum number of tests required for robust verification, that is, the minimum positive integer N such that there exists an integer 0 ≤ k ≤ N−1 which together with N achieves the above two conditions. Note that the conditions of soundness and robustness can be expressed as

So \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) can be expressed as

Algorithm 1

Minimum test number for robust verification

Input: λ, ϵ, δ ∈ (0, 1) and r ∈ [0, 1).

Output: \({k}_{\min }(\epsilon ,\delta ,\lambda ,r)\) and \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\).

1:if r = 0 then

2: \({k}_{\min }\leftarrow 0\)

3: else

4: for k = 0, 1, 2, … do

5: Find the largest integer M such that BM,k(νrϵ)≥1 − δ.

6: if M ≥ k + 1 and \({\bar{\epsilon }}_{\lambda }(k,M,\delta )\le \epsilon \), then

7: stop

8: end if

9: end for

10: \({k}_{\min }\leftarrow k\)

11: end if

12: Find the smallest integer N that satisfies \(N\ge {k}_{\min }+1\) and \({\bar{\epsilon }}_{\lambda }({k}_{\min },N,\delta )\le \epsilon \).

13: \({N}_{\min }\leftarrow N\)

14: return \({k}_{\min }\) and \({N}_{\min }\).

Next, we propose a simple algorithm, Algorithm 1, for computing \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\), which is very useful for practical applications. In addition to \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\), this algorithm determines the corresponding number of allowed failures, which is denoted by \({k}_{\min }(\epsilon ,\delta ,\lambda ,r)\). In Supplementary Note 7C we explain why Algorithm 1 works. Algorithm 1 is particularly useful for studying the variations of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) with the four parameters ϵ, δ, λ, r as illustrated in Fig. 5. When δ and r are fixed, \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) is inversely proportional to ϵ; when ϵ, r are fixed and δ approaches 0, \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) is proportional to \(\ln {\delta }^{-1}\). In addition, Fig. 5d indicates that a strategy Ω with small or large λ is not very efficient for robust verification, while any choice satisfying 0.3 ≤ λ ≤ 0.5 is nearly optimal.

a Variations of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) with ϵ−1 and λ, where robustness r = 1/2 and significance level δ = 0.01. b Variations of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) with ϵ−1 and r, where δ = 0.01 and λ = 1/2. c Variations of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) with \({\log }_{10}{\delta }^{-1}\) and r, where λ = 1/2 and infidelity ϵ = 0.01. d Variations of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) with λ and δ, where ϵ = 0.01 and r = 1/2.

The following theorem provides an informative upper bound for \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) and clarifies the sample complexity of robust verification; see Supplementary Note 6D for a proof.

Theorem 3

Suppose 0 < λ, ϵ < 1, 0 < δ ≤ 1/2, and 0 ≤ r < 1. Then the conditions of soundness and robustness in Eq. (17) hold as long as

For given λ and r, the minimum number of tests is only \(O({\epsilon }^{-1}\ln {\delta }^{-1})\), which is independent of the qudit number n of \(\left\vert G\right\rangle\) and achieves the optimal scaling behaviors with respect to the infidelity ϵ and significance level δ. The coefficient is large when λ is close to 0 or 1, while it is around the minimum for any value of λ in the interval [0.3, 0.5]. Numerical calculation based on Algorithm 1 shows that the upper bound for \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) provided in Theorem 3 is a bit conservative, especially when r is small. In other words, the actual sample cost is smaller than what can be proved rigorously. Nevertheless, the bound is quite informative about the general trends. If we choose r = λ = 1/2 for example, then Theorem 3 implies that

while numerical calculation yields \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\le 67\,{\epsilon }^{-1}\ln {\delta }^{-1}\). Compared with previous works16,30,31, our protocol improves the scaling behavior with respect to the significance level δ exponentially and even doubly exponentially, as illustrated in Fig. 2.

Discussion

Verification of resource graph states in the adversarial scenario is a crucial step in the verification of blind MBQC. We have proposed a highly robust and efficient protocol for achieving this task, which applies to any qudit graph state with a prime local dimension. To implement this protocol, it suffices to perform simple stabilizer tests based on local Pauli measurements, which is quite appealing to NISQ devices. For any given degree of robustness, to verify the target graph state within infidelity ϵ and significance level δ, only \(O({\epsilon }^{-1}\ln {\delta }^{-1})\) tests are required, which achieve the optimal sample complexity with respect to the system size, infidelity, and significance level. Compared with previous protocols, our protocol can reduce the sample cost dramatically in a realistic scenario; notably, the scaling behavior in the significance level can be improved exponentially.

So far we have focused on the verification of resource graph states with trustworthy and ideal local projective measurements. According to Eq. (12), if the blind MBQC is performed with ideal measurements after Alice accepts the state prepared by Bob, then the precision of the computation results is guaranteed by the precision of the graph state. However, in practice, it is unrealistic to assume that the measurement devices are perfect. So we need additional operations to guarantee the precision of the computation results when verifying blind MBQC in the receive-and-measure setting. As mentioned in the introduction, the client can calibrate her measurement devices before performing blind MBQC with a small overhead. In addition, we can convert the noise in measurements to noise in state preparation. To apply this method, we need the assumption that any measurement used in MBQC and graph state verification can be expressed as a composition of a measurement-independent noise process and the noiseless measurement. The detail of this conversion method is presented in Supplementary Note 4. When the noise process depends on the specific measurement, the situation is more complicated, and further study is required to deal with such noise.

After obtaining a reliable resource graph state accepted by the verification protocol, Alice can use it to perform MBQC. In this procedure, she needs to adaptively select local projective measurements to drive the computation. Nevertheless, these operations can be completed by using a classical computer, and the classical computation complexity scales linearly with the size of the original quantum computation13. Therefore, the most challenging part in the verification of blind MBQC is the verification of the resource graph state, which is the focus of this work.

In the above discussion, we assume that the measurement devices are controlled by the client and are trustworthy. It is also desirable to construct robust and efficient protocols for verifying blind MBQC when the measurement devices are not trustworthy. To this end, a device-independent (DI) verification protocol was proposed in ref. 37. However, this protocol has a quantum communication complexity of the order \(O({\tilde{n}}^{c})\), where \(\tilde{n}\) is the size of the delegated quantum computation and c > 2048, which is too prohibitive for any practical implementation. By combining the CHSH inequality and stabilizer tests applied to a qubit graph state, ref. 19 proposed a protocol for self-testing MBQC in the receive-and-measure setting. This protocol requires \(O({n}^{4}\log n)\) samples with n being the qubit number of the resource graph state, which is much more efficient than previous protocols, but is still far from the optimal scaling achieved in this work. In addition, it does not consider the problem of robustness. To further reduce the overhead and improve the robustness, it might be helpful to combine our approach with DI quantum state certification (DI QSC) developed recently38. See Supplementary Note 5 for details.

In addition to graph states, our protocol can also be used to verify many other pure quantum states in the adversarial scenario, where the state preparation is controlled by a potentially malicious adversary Bob, who can produce an arbitrary correlated or entangled state ρ on the whole system \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\). Let \(\left\vert \Psi \right\rangle \in {{{\mathcal{H}}}}\) be the target pure state to be verified. Then a verification strategy Ω for \(\left\vert \Psi \right\rangle\) is called homogeneous30,31 if it has the form

Efficient homogeneous strategies based on local projective measurements have been constructed for many important quantum states31,36,39,40,41,42,43,44,45,46.

If a homogeneous strategy Ω given in Eq. (22) can be constructed, then the target state \(\left\vert \Psi \right\rangle\) can be verified in the adversarial scenario by virtue of our protocol: Alice first randomly permutes all systems of ρ and applies the strategy Ω to the first N systems, then she accepts the remaining unmeasured system if at most k failures are observed among these tests. Most results (including Theorems 1, 2, 3, Algorithm 1, and Propositions 1, 2) in this paper are still applicable if the target graph state \(\left\vert G\right\rangle\) is replaced by \(\left\vert \Psi \right\rangle\). Therefore, our verification protocol is of interest not only to blind MBQC, but also to many other tasks in quantum information processing that entail high security. More results on quantum state verification (QSV) in the adversarial scenario are presented in Supplementary Note 7.

Up to now, we have focused on robust QSV in the adversarial scenario, in which the prepared state ρ can be arbitrarily correlated or entangled, which is pertinent to blind MBQC. On the other hand, robust QSV in the i.i.d. scenario is also important to many applications. Although this scenario is much simpler than the adversarial scenario, the sample complexity of robust QSV has not been clarified before. In the Methods section and Supplementary Note 8 we will discuss this issue in detail and clarify the sample complexity of robust QSV in the i.i.d. scenario in comparison with the adversarial scenario. Not surprisingly, most of our results on the adversarial scenario have analog for the i.i.d. scenario.

Methods

Protocols for realizing verifiable BQC

To put our work into context, here we briefly review existing protocols for realizing verifiable BQC, which can be broadly divided into four classes24. Many protocols in the four classes build on the model of MBQC due to its convenience and flexibility.

The first class of protocols works in the multi-prover setting8,37,47,48. These protocols can achieve a classical client (verifier), but a trade-off is the requirement of multiple non-communicating servers (provers) that share entanglement with each other, which is very difficult to realize in practice.

The second and third classes of protocols need only a single server, but assume that the client has limited quantum computational power. The second class of protocols works in the prepare-and-send setting10,49,50,51, in which the client has a trusted preparation device and the ability to send single-qudit quantum states to the server. This class includes the protocol based on quantum authentication49, protocol based on repeating indistinguishable runs of tests and computations50, and protocol based on trap qubits51, which has been demonstrated experimentally10. The third class of protocols works in the receive-and-measure setting16,17,18,20,21,37, in which the client receives quantum states from the server and has the ability to perform reliable local projective measurements. This class includes the protocol based on CHSH games37, protocols based on QSV in the adversarial scenario16,17,18,20,21, and our protocol. Notably, the above three classes of protocols are all information-theoretically secure24.

Recently, the fourth class of protocols based on computational assumptions has been developed52,53,54,55, which elegantly enables a classical client to hide and verify the quantum computation of a single server. However, these schemes are no longer information-theoretically secure, and their overheads are too prohibitive for any sort of practical implementation in the near future.

Simplifying the calculation of \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\)

Here we show how to simplify the calculation of the guaranteed infidelity \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) given in Eq. (11) by virtue of results derived in the companion paper34.

Recall that Ω is a homogeneous strategy for the target state \(\left\vert G\right\rangle \in {{{\mathcal{H}}}}\) as shown in Eq. (6). It has the following spectral decomposition,

where D is the dimension of \({{{\mathcal{H}}}}\), and Πj are mutually orthogonal rank-1 projectors with \({\Pi }_{1}=\left\vert G\right\rangle \left\langle G\right\vert\). In addition, ρ is a permutation-invariant state on \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\). Note that pk(ρ) defined in Eq. (9) and fk(ρ) defined in Eq. (10) only depend on the diagonal elements of ρ in the product basis constructed from the eigenbasis of Ω (as determined by Πj). Hence, we may assume that ρ is diagonal in this basis without loss of generality. In other words, ρ can be expressed as a mixture of tensor products of Πj. For i = 1, 2, …, N + 1, we can associate the ith system of ρ with a {0, 1}-valued variable Yi: we define Yi = 0 (1) if the state on the ith system is Π1 (Πj≠1). Since the state ρ is permutation invariant, the variables Y1, …, YN+1 are subject to a permutation-invariant joint distribution \({P}_{{Y}_{1},\ldots ,{Y}_{N+1}}\) on [N + 1] ≔ {1, 2, …, N + 1}. Conversely, for any permutation-invariant joint distribution on [N + 1], we can always find a diagonal state ρ, whose corresponding variables Y1, …, YN+1 are subject to this distribution.

Next, we define a {0, 1}-valued random variable Ui to express the test outcome on the ith system, where 0 corresponds to passing the test and 1 corresponds to failure. If Yi = 0, which means the state on the ith system is Π1, then the ith system must pass the test; if Yi = 1, which means the state on the ith system is Πj≠1, then the ith system passes the test with probability λ, and fails with probability 1 − λ. So we have the following conditional distribution:

Note that Ui is determined by the random variable Yi and the parameter λ in Eq. (6). Let K be the random variable that counts the number of 1, that is, the number of failures, among U1, U2, …, UN. Then the probability that Alice accepts is

given that Alice accepts if at most k failures are observed among the N tests. This probability only depends on the joint distribution \({P}_{{Y}_{1},\ldots ,{Y}_{N+1}}\). If at most k failures are observed, then the fidelity of the state on the (N + 1)th system can be expressed as the conditional probability

which also only depends on \({P}_{{Y}_{1},\ldots ,{Y}_{N+1}}\). Hence, the guaranteed infidelity defined in Eq. (11) can be expressed as

where the optimization is taken over all permutation-invariant joint distributions \({P}_{{Y}_{1},\ldots ,{Y}_{N+1}}\).

Equation (27) reduces the computation of \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) to the computation of a maximum conditional probability. The latter problem was studied in detail in our companion paper34, in which \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) is called the upper confidence limit. Hence, all properties of \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) derived in ref. 34 also hold in the current context. Notably, several results in this paper are simple corollaries of the counterparts in ref. 34. To be specific, Proposition 1 follows from Proposition 6.5 in ref. 34; Theorem S1 in Supplementary Note 1 follows from Theorem 6.4 in ref. 34; Lemma S6 in Supplementary Note 2 follows from Lemma 6.7 in ref. 34; Lemma S7 in Supplementary Note 2 follows from Lemma 2.2 in ref. 34; Proposition S7 in Supplementary Note 7 follows from Lemma 5.4 and Eq. (89) in ref. 34.

Although this paper and the companion paper34 study essentially the same quantity \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\), they have different focuses. In ref. 34, we mainly focus on asymptotic behaviors of \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) and its related quantities, which are of interest to the theory of statistical sampling and hypothesis testing. The main goal of ref. 34 is to show that the randomized test with parameter λ > 0 can substantially improve the significance level over the deterministic test with λ = 0. In this paper, by contrast, we focus on finite bounds for \({\overline{\epsilon }}_{\lambda }(k,N,\delta )\) and its related quantities, which are important to practical applications. In addition, the key result on robust verification, Theorem 3, has no analog in the companion paper. The main goal of this paper is to provide a robust and efficient protocol for verifying the resource graph state in blind MBQC and clarify the sample complexity. So the two papers are complementary to each other.

It is worth pointing out that the ‘randomized test’ considered in ref. 34 has a different meaning from the ‘quantum test’ in this paper because of different conventions in the two communities. The ‘randomized test’ in ref. 34 means the whole procedure that one observes the N variables U1, U2, …, UN and makes a decision based on the number of failures observed; while a ‘quantum test’ in this paper means Alice performs a two-outcome measurement on one system of the state ρ, in which one outcome corresponds to passing the test, and the other outcome corresponds to a failure.

Robust and efficient verification of quantum states in the i.i.d. scenario

Up to now we have focused on QSV in the adversarial scenario, in which the server Bob can prepare an arbitrary state ρ on the whole space \({{{{\mathcal{H}}}}}^{\otimes (N+1)}\). In this section, we turn to the i.i.d. scenario, in which the prepared state is a tensor power of the form ρ = σ⊗(N+1) with \(\sigma \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\). This verification problem was originally studied in refs. 39,40 and later more systematically in ref. 36. So far, efficient verification strategies based on local operations and classical communication (LOCC) have been constructed for various classes of pure states, including bipartite pure states42,43,56, stabilizer states (including graph states)16,31,33,36,57, hypergraph states33, weighted graph states58, Dicke states45,59, ground states of local Hamiltonians60,61, and certain continuous-variable states62, see refs. 28,29 for overviews. Verification protocols based on local collective measurements have also been constructed for Bell states40,63. However, most previous works did not consider the problem of robustness. Consequently, most protocols known so far are not robust, and the sample cost may increase substantially if robustness is taken into account, see Supplementary Note 8A for explanation. Only recently, several works considered the problem of robustness29,64,65,66,67; however, the degree of robustness of verification protocols has not been analyzed, and the sample complexity of robust verification has not been clarified, although this problem is apparently much simpler than the counterpart in the adversarial scenario.

In this section, we propose a general approach for constructing robust and efficient verification protocols in the i.i.d. scenario and clarify the sample complexity of robust verification. The results presented here can serve as a benchmark for understanding QSV in the adversarial scenario. To streamline the presentation, the proofs of these results [including Propositions 3–6 and Eq. (38)] are relegated to Supplementary Note 8.

Consider a quantum device that is expected to produce the target state \(\left\vert \Psi \right\rangle \in {{{\mathcal{H}}}}\), but actually produces the states σ1, σ2, …, σN in N runs. In the i.i.d. scenario, all these states are identical to the state σ, and the goal of Alice is to verify whether σ is sufficiently close to the target state \(\left\vert \Psi \right\rangle\). If a strategy Ω of the form in Eq. (22) can be constructed for \(\left\vert \Psi \right\rangle\), then our verification protocol runs as follows: Alice applies the strategy Ω to each of the N states, and counts the number of failures. If at most k failures are observed among the N tests, then Alice accepts the states prepared; otherwise, she rejects. Here 0 ≤ k ≤ N − 1 is called the number of allowed failures. The completeness of this protocol is guaranteed because the target state \(\left\vert \Psi \right\rangle\) can never be mistakenly rejected.

Most previous works did not consider the problem of robustness and can reach a meaningful conclusion only when k = 031,33,36,39,40,41,42,43,44,45, i.e., Alice accepts if all N tests are passed. However, the requirement of passing all tests is too demanding in a realistic scenario and leads to poor robustness, as clarified in Supplementary Note 8. To remedy this problem, several recent works considered modifications that allow some failures29,64,65,66,67. However, the robustness of such verification protocols has not been analyzed, and the sample complexity of robust verification has not been clarified.

Here we consider robust verification in which at most k failures are allowed. Then the probability of acceptance is given by

where ϵσ ≔ 1 − 〈Ψ∣σ∣Ψ〉 is the infidelity between σ and the target state. Similar to Eq. (11), for 0 < δ ≤ 1 we define the guaranteed infidelity in the i.i.d. scenario as

where the first maximization is taken over all states σ on \({{{\mathcal{H}}}}\), and the second equality follows from Eq. (28). By definition, if Alice accepts the state σ, then she can ensure (with significance level δ) that σ has infidelity at most \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(k,N,\delta )\) with the target state (soundness). Hence, \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(k,N,\delta )\) characterizes the verification precision in the i.i.d. scenario. Since the i.i.d. scenario has a stronger constraint than the full adversarial scenario, the guaranteed infidelity for the former scenario cannot be larger than that for the later scenario, that is,

as illustrated in Fig. 6.

Here λ = 1/2 and δ = 0.05; the green, blue, and red dots represent \({\bar{\epsilon }}_{\lambda }(\lfloor \nu sN\rfloor ,N,\delta )\) [by Eq. (6) in Supplementary Note 1] for the adversarial scenario, while the magenta, orange, and purple stars represent \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(\lfloor \nu sN\rfloor ,N,\delta )\) [by Eq. (29)] for the i.i.d. scenario; each horizontal line represents an error rate s.

The following proposition clarifies the monotonicities of \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(k,N,\delta )\). It is the counterpart of Proposition 1.

Proposition 3

Suppose 0 ≤ λ < 1, 0 < δ ≤ 1, \(k\in {{\mathbb{Z}}}^{\ge 0}\), and \(N\in {{\mathbb{Z}}}^{\ge k+1}\). Then \({\overline{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(k,N,\delta )\) is strictly decreasing in δ and N, but strictly increasing in k.

Next, we consider the verification with a fixed error rate in the i.i.d. scenario. Concretely, we set the number of allowed failures k to be proportional to the number of tests, i.e., k = ⌊sνN⌋, where 0 ≤ s < 1 is the error rate, and ν = 1 − λ is the spectral gap of the strategy Ω. The following proposition provides informative bounds for \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(\lfloor \nu sN\rfloor ,N,\delta )\). It is the counterpart of Theorem 1.

Proposition 4

Suppose 0 < s, λ < 1, 0 < δ ≤ 1/2, and \(N\in {{\mathbb{Z}}}^{\ge 1}\); then

Similar to the behavior of \({\bar{\epsilon }}_{\lambda }(\lfloor \nu sN\rfloor ,N,\delta )\), the guaranteed infidelity \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(\lfloor \nu sN\rfloor ,N,\delta )\) for the i.i.d. scenario converges to the error rate s as the number N gets large, as illustrated in Fig. 6. To achieve a given infidelity ϵ and significance level δ, which means \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(\lfloor \nu sN\rfloor ,N,\delta )\le \epsilon\), it suffices to set s < ϵ and choose a sufficiently large N. By virtue of Proposition 4 we can derive the following proposition, which is the counterpart of Theorem 2.

Proposition 5

Suppose 0 ≤ λ < 1, 0 ≤ s < ϵ < 1, and 0 < δ < 1. If the number of tests N satisfies

then \({\bar{\epsilon }}_{\lambda }^{\,{{{\rm{iid}}}}}(\lfloor \nu sN\rfloor ,N,\delta )\le \epsilon\).

In the rest of this section, we turn to study the sample complexity of robust verification in the i.i.d. scenario. To verify the target state within infidelity ϵ, significance level δ, and robustness r (with 0 ≤ r < 1) entails the following conditions,

-

1.

(Soundness) If the device prepares i.i.d. states \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) with infidelity ϵτ > ϵ, then the probability that Alice accepts τ is smaller than δ.

-

2.

(Robustness) If the device prepares i.i.d. states \(\tau \in {{{\mathcal{D}}}}({{{\mathcal{H}}}})\) with infidelity ϵτ ≤ rϵ, then the probability that Alice accepts τ is at least 1 − δ.

Here the condition of robustness is the same as the counterpart in the adversarial scenario, while the condition of soundness is different. In the adversarial scenario, once accepting, only the reduced state on the remaining unmeasured system can be used for application, so the condition of soundness only focuses on the fidelity of this state. In the i.i.d. scenario, by contrast, the prepared states are identical and independent, so the condition of soundness focuses on the fidelity of each state.

Given the total number N of tests and the number k of allowed failures, then the conditions of soundness and robustness can be expressed as

Let \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) be the minimum number of tests required for robust verification in the i.i.d. scenario. Then \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is the minimum positive integer N such that Eq. (33) holds for some 0 ≤ k ≤ N − 1, namely,

It is determined by νϵ, δ, r, and is the counterpart of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) in the adversarial scenario.

Next, we propose a simple algorithm, Algorithm 2, for computing \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\), which is very useful to practical applications. This algorithm is the counterpart of Algorithm 1 for computing \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\). In addition to the number of tests, Algorithm 2 also determines the corresponding number of allowed failures, which is denoted by \({k}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\). In Supplementary Note 8F we explain why Algorithm 2 works.

Algorithm 2

Minimum test number for robust verification in the i.i.d. scenario

Input: λ, ϵ, δ ∈ (0, 1) and r ∈ [0, 1).

Output: \({k}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) and \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\).

1: if r = 0 then

2: \({k}_{\min }^{{{{\rm{iid}}}}}\leftarrow 0\)

3: else

4: for k = 0, 1, 2, … do

5: Find the largest integer M such that BM,k(νrϵ) ≥ 1 − δ.

6: if M ≥ k + 1 and BM,k(νϵ) ≤ δ then

7: stop

8: end if

9: end for

10: \({k}_{\min }^{{{{\rm{iid}}}}}\leftarrow k\)

11: end if

12: Find the smallest integer N that satisfies \(N\ge {k}_{\min }^{{{{\rm{iid}}}}}+1\) and \({B}_{N,{k}_{\min }^{{{{\rm{iid}}}}}}(\nu \epsilon )\le \delta \).

13: \({N}_{\min }^{{{{\rm{iid}}}}}\leftarrow N\)

14: return \({k}_{\min }^{{{{\rm{iid}}}}}\) and \({N}_{\min }^{{{{\rm{iid}}}}}\).

Algorithm 2 is quite useful to studying the variations of \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) with λ, δ, ϵ, and r as illustrated in Fig. 7. When ϵ, r are fixed and δ approaches 0, \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is proportional to \(\ln {\delta }^{-1}\). When δ and r are fixed, \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is inversely proportional to νϵ. This fact shows that strategies with larger spectral gaps are more efficient, in sharp contrast with the adversarial scenario.

At this point it is instructive to compare the minimum number of tests for robust verification in the adversarial scenario with the counterpart in the i.i.d. scenario. Numerical calculation shows that the ratio of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) over \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is decreasing in λ, as reflected in Fig. 8. For a typical value of λ, say λ = 1/2, this ratio is smaller than 2, so the sample complexity in the adversarial scenario is comparable to the counterpart in the i.i.d. scenario. When λ is small, one can construct another strategy with a larger λ by adding the trivial test [see Eq. (6)], which can achieve a higher efficiency in the adversarial scenario. Due to this reason, the ratio of \({N}_{\min }(\epsilon ,\delta ,\lambda ,r)\) over \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is not so important when λ ≤ 0.3.

The following proposition provides a guideline for choosing appropriate parameters N and k for achieving a given verification precision and robustness.

Proposition 6

Suppose 0 < δ, ϵ, r < 1 and 0 ≤ λ < 1. Then the conditions of soundness and robustness in Eq. (33) hold as long as s ∈ (rϵ, ϵ), k = ⌊νsN⌋, and

For 0 < p, r < 1 we define functions

By virtue of Proposition 6 we can derive the following informative bounds (for 0 < δ, ϵ, r < 1),

These bounds become tighter when the significance level δ approaches 0, as shown in Supplementary Figure 4. The coefficient ξ(r) in the second bound is plotted in Supplementary Figure 5. When r = λ = 1/2 for instance, the second upper bound in Eq. (38) implies that

while numerical calculation shows that \({N}_{\min }^{{{{\rm{iid}}}}}(\epsilon ,\delta ,\lambda ,r)\) is smaller than \(41\,{\epsilon }^{-1}\ln {\delta }^{-1}\) for δ ≥ 10−10 and approaches \(2\,\xi (1/2)\,{\epsilon }^{-1}\ln {\delta }^{-1}\) when δ, ϵ → 0. Therefore, our protocol can enable robust and efficient verification of quantum states in the i.i.d. scenario.

Finally, it is instructive to clarify the relation between QSV in the i.i.d. scenario, nonadversarial scenario, and adversarial scenario. In the i.i.d. scenario, the assumptions on the source are the strongest, so QSV is the easiest, and the sample cost is the smallest. In the adversarial scenario, by contrast, the assumptions on the source are the weakest, so QSV is the most difficult, and the sample cost is the largest. For graph states with a prime local dimension, the sample cost in the adversarial scenario is comparable to the counterpart in the i.i.d. scenario thanks to our analysis above, which means the sample costs in all three scenarios are comparable.

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

Code availability

The codes used for numerical analysis are available from the corresponding author upon request.

References

Shor, P. W. Algorithms for quantum computation: discrete logarithms and factoring. In: 1994 IEEE 35th Annual Symposium on Foundations of Computer Science (FOCS) (1994), pp. 124–134.

Nielsen, M. A. & Chuang, I. L. Quantum computation and quantum information (Cambridge University Press, Cambridge, U.K., 2000).

Preskill, J. Quantum computing in the NISQ era and beyond. Quantum 2, 79 (2018).

Fitzsimons, J. F. Private quantum computation: an introduction to blind quantum computing and related protocols. npj Quantum Inf. 3, 23 (2017).

Broadbent, A., Fitzsimons, J. F. & Kashefi, E. Universal blind quantum computation. In: 2009 IEEE 50th Annual Symposium on Foundations of Computer Science (FOCS), pp. 517–526 (2006).

Morimae, T. & Fujii, K. Blind quantum computation protocol in which Alice only makes measurements. Phys. Rev. A 87, 050301(R) (2013).

Mantri, A., Pérez-Delgado, C. A. & Fitzsimons, J. F. Optimal blind quantum computation. Phys. Rev. Lett. 111, 230502 (2013).

Reichardt, B. W., Unger, F. & Vazirani, U. Classical command of quantum systems. Nature 496, 456 (2013).

Barz, S. et al. Demonstration of blind quantum computing. Science 335, 303 (2012).

Barz, S., Fitzsimons, J. F., Kashefi, E. & Walther, P. Experimental verification of quantum computation. Nat. Phys. 9, 727–731 (2013).

Greganti, C., Roehsner, M.-C., Barz, S., Morimae, T. & Walther, P. Demonstration of measurement-only blind quantum computing. New J. Phys. 18, 013020 (2016).

Jiang, Y.-F. et al. Remote blind state preparation with weak coherent pulses in the field. Phys. Rev. Lett. 123, 100503 (2019).

Raussendorf, R. & Briegel, H. J. A one-way quantum computer. Phys. Rev. Lett. 86, 5188 (2001).

Raussendorf, R., Browne, D. E. & Briegel, H. J. Measurement-based quantum computation on cluster states. Phys. Rev. A 68, 022312 (2003).

Briegel, H. J., Dür, W., Raussendorf, R. & Van den Nest, M. Measurement-based quantum computation. Nat. Phys. 5, 19 (2009).

Hayashi, M. & Morimae, T. Verifiable measurement-only blind quantum computing with stabilizer testing. Phys. Rev. Lett. 115, 220502 (2015).

Fujii, K. & Hayashi, M. Verifiable fault tolerance in measurement-based quantum computation. Phys. Rev. A 96, 030301(R) (2017).

Morimae, T., Takeuchi, Y. & Hayashi, M. Verification of hypergraph states. Phys. Rev. A 96, 062321 (2017).

Hayashi, M. & Hajdušek, M. Self-guaranteed measurement-based blind quantum computation. Phys. Rev. A 97, 052308 (2018).

Takeuchi, Y., Mantri, A., Morimae, T., Mizutani, A. & Fitzsimons, J. F. Resource-efficient verification of quantum computing using Serfling’s bound. npj Quantum Inf. 5, 27 (2019).

Xu, Q., Tan, X., Huang, R. & Li, M. Verification of blind quantum computation with entanglement witnesses. Phys. Rev. A 104, 042412 (2021).

Arute, F. et al. Quantum supremacy using a programmable superconducting processor. Nature 574, 505 (2019).

Zhong, H.-S. et al. Quantum computational advantage using photons. Science 370, 1460 (2020).

Gheorghiu, A., Kapourniotis, T. & Kashefi, E. Verification of quantum computation: an overview of existing approaches. Theory Comput. Syst. 63, 715–808 (2019).

Šupić, I. & Bowles, J. Self-testing of quantum systems: a review. Quantum 4, 337 (2020).

Eisert, J. et al. Quantum certification and benchmarking. Nat. Rev. Phys. 2, 382–390 (2020).

Carrasco, J., Elben, A., Kokail, C., Kraus, B. & Zoller, P. Theoretical and experimental perspectives of quantum verification. PRX Quantum 2, 010102 (2021).

Kliesch, M. & Roth, I. Theory of quantum system certification. PRX Quantum 2, 010201 (2021).

Yu, X.-D., Shang, J. & Gühne, O. Statistical methods for quantum state verification and fidelity estimation. Adv. Quantum Technol. 5, 2100126 (2022).

Zhu, H. & Hayashi, M. Efficient verification of pure quantum states in the adversarial scenario. Phys. Rev. Lett. 123, 260504 (2019).

Zhu, H. & Hayashi, M. General framework for verifying pure quantum states in the adversarial scenario. Phys. Rev. A 100, 062335 (2019).

Takeuchi, Y. & Morimae, T. Verification of Many-Qubit states. Phys. Rev. X 8, 021060 (2018).

Zhu, H. & Hayashi, M. Efficient verification of hypergraph states. Phys. Rev. Appl. 12, 054047 (2019).

Li, Z., Zhu, H. & Hayashi, M. Significance improvement by randomized test in random sampling without replacement. Preprint at https://arxiv.org/abs/2211.02399 (2022).

Keet, A., Fortescue, B., Markham, D. & Sanders, B. C. Quantum secret sharing with qudit graph states. Phys. Rev. A 82, 062315 (2010).

Pallister, S., Linden, N. & Montanaro, A. Optimal verification of entangled states with local measurements. Phys. Rev. Lett. 120, 170502 (2018).

Gheorghiu, A., Kashefi, E. & Wallden, P. Robustness and device independence of verifiable blind quantum computing. New J. Phys. 17, 083040 (2015).

Gočanin, A., Šupić, I. & Dakić, B. Sample-efficient device-independent quantum state verification and certification. PRX Quantum 3, 010317 (2022).

Hayashi, M., Matsumoto, K. & Tsuda, Y. A study of LOCC-detection of a maximally entangled state using hypothesis testing. J. Phys. A: Math. Gen. 39, 14427 (2006).

Hayashi, M. Group theoretical study of LOCC-detection of maximally entangled state using hypothesis testing. New J. Phys. 11, 043028 (2009).

Zhu, H. & Hayashi, M. Optimal verification and fidelity estimation of maximally entangled states. Phys. Rev. A 99, 052346 (2019).

Li, Z., Han, Y.-G. & Zhu, H. Efficient verification of bipartite pure states. Phys. Rev. A 100, 032316 (2019).

Wang, K. & Hayashi, M. Optimal verification of two-qubit pure states. Phys. Rev. A 100, 032315 (2019).

Li, Z., Han, Y.-G. & Zhu, H. Optimal verification of greenberger-Horne-Zeilinger states. Phys. Rev. Appl. 13, 054002 (2020).

Li, Z., Han, Y.-G., Sun, H.-F., Shang, J. & Zhu, H. Verification of phased Dicke states. Phys. Rev. A 103, 022601 (2021).

Liu, Y.-C., Li, Y., Shang, J. & Zhang, X. Verification of arbitrary entangled states with homogeneous local measurements. Adv. Quantum Technol. 6, 2300083 (2023).

Hajdušek, M., Pérez-Delgado, C. A. & Fitzsimons, J. F. Device-independent verifiable blind quantum computation. Preprint at https://arxiv.org/abs/1502.02563 (2015).

Coladangelo, A., Grilo, A. B., Jeffery, S. & Vidick, T. Verifier-on-a-Leash: new schemes for verifiable delegated quantum computation, with quasilinear resources. In: Annual international conference on the theory and applications of cryptographic techniques, pp. 247–277, (Springer, 2019).

Aharonov, D., Ben-Or, M. & Eban, E. Interactive proofs for quantum computations. In: Innovations in computer science (ICS), pp. 453–469 (Tsinghua University Press, 2010).

Broadbent, A. How to verify a quantum computation. Theory Comput. 14, 09 (2015).

Fitzsimons, J. F. & Kashefi, E. Unconditionally verifiable blind quantum computation. Phys. Rev. A 96, 012303 (2017).

Mahadev, U. Classical verification of quantum computations. In: 2018 IEEE 59th Annual Symposium on Foundations of Computer Science (FOCS), pp. 259–267 (2018).

Gheorghiu, A. & Vidick, T. Computationally-secure and composable remote state preparation. In: 2019 IEEE 60th Annual Symposium on Foundations of Computer Science (FOCS), pp. 1024–1033 (2019).

Bartusek, J. et al. Succinct classical verification of quantum computation. In: Advances in Cryptology - CRYPTO 2022: 42nd Annual International Cryptology Conference, pp. 195–211 (Springer, 2022).

Zhang, J. Classical verification of quantum computations in linear time. In: 2022 IEEE 63rd Annual Symposium on Foundations of Computer Science (FOCS), pp. 46–57 (2022).

Yu, X.-D., Shang, J. & Gühne, O. Optimal verification of general bipartite pure states. npj Quantum Inf. 5, 112 (2019).

Dangniam, N., Han, Y.-G. & Zhu, H. Optimal verification of stabilizer states. Phys. Rev. Res. 2, 043323 (2020).

Hayashi, M. & Takeuchi, Y. Verifying commuting quantum computations via fidelity estimation of weighted graph states. New J. Phys. 21, 093060 (2019).

Liu, Y.-C., Yu, X.-D., Shang, J., Zhu, H. & Zhang, X. Efficient verification of Dicke states. Phys. Rev. Appl. 12, 044020 (2019).

Zhu, H., Li, Y. & Chen, T. Efficient verification of ground states of frustration-free Hamiltonians. Preprint at https://arxiv.org/abs/2206.15292 (2022).

Chen, T., Li, Y. & Zhu, H. Efficient verification of Affleck-Kennedy-Lieb-Tasaki states. Phys. Rev. A 107, 022616 (2023).

Liu, Y.-C., Shang, J. & Zhang, X. Efficient verification of entangled continuous-variable quantum states with local measurements. Phys. Rev. Res. 3, L042004 (2021).

Miguel-Ramiro, J., Riera-Sàbat, F. & Dür, W. Collective operations can exponentially enhance quantum state verification. Phys. Rev. Lett. 129, 190504 (2022).

Zhang, W.-H. et al. Experimental optimal verification of entangled states using local measurements. Phys. Rev. Lett. 125, 030506 (2020).

Zhang, W.-H. et al. Classical communication enhanced quantum state verification. npj Quantum Inf. 6, 103 (2020).

Jiang, X. et al. Towards the standardization of quantum state verification using optimal strategies. npj Quantum Inf. 6, 90 (2020).

Xia, L. et al. Experimental optimal verification of three-dimensional entanglement on a silicon chip. New J. Phys. 24, 095002 (2022).

Acknowledgements

The work at Fudan is supported by the National Natural Science Foundation of China (Grants no. 92165109 and no. 11875110), the National Key Research and Development Program of China (Grant no. 2022YFA1404204), and Shanghai Municipal Science and Technology Major Project (Grant no. 2019SHZDZX01). MH is supported in part by the National Natural Science Foundation of China (Grants no. 62171212 and no. 11875110) and Guangdong Provincial Key Laboratory (Grant no. 2019B121203002).

Author information

Authors and Affiliations

Contributions

H.Z. and M.H. proposed this project. Z.L., H.Z., and M.H. derived the theoretical results. Z.L. performed numerical calculations and plotted the figures. Z.L., H.Z., and M.H. wrote the paper.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

41534_2023_783_MOESM1_ESM.pdf

Robust and efficient verification of graph states in blind measurement-based quantum computation: Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Li, Z., Zhu, H. & Hayashi, M. Robust and efficient verification of graph states in blind measurement-based quantum computation. npj Quantum Inf 9, 115 (2023). https://doi.org/10.1038/s41534-023-00783-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41534-023-00783-9