Abstract

The detection of the accelerated expansion of the Universe has been one of the major breakthroughs in modern cosmology. Several cosmological probes (Cosmic Microwave Background, Supernovae Type Ia, Baryon Acoustic Oscillations) have been studied in depth to better understand the nature of the mechanism driving this acceleration, and they are being currently pushed to their limits, obtaining remarkable constraints that allowed us to shape the standard cosmological model. In parallel to that, however, the percent precision achieved has recently revealed apparent tensions between measurements obtained from different methods. These are either indicating some unaccounted systematic effects, or are pointing toward new physics. Following the development of CMB, SNe, and BAO cosmology, it is critical to extend our selection of cosmological probes. Novel probes can be exploited to validate results, control or mitigate systematic effects, and, most importantly, to increase the accuracy and robustness of our results. This review is meant to provide a state-of-art benchmark of the latest advances in emerging “beyond-standard” cosmological probes. We present how several different methods can become a key resource for observational cosmology. In particular, we review cosmic chronometers, quasars, gamma-ray bursts, standard sirens, lensing time-delay with galaxies and clusters, cosmic voids, neutral hydrogen intensity mapping, surface brightness fluctuations, stellar ages of the oldest objects, secular redshift drift, and clustering of standard candles. The review describes the method, systematics, and results of each probe in a homogeneous way, giving the reader a clear picture of the available innovative methods that have been introduced in recent years and how to apply them. The review also discusses the potential synergies and complementarities between the various probes, exploring how they will contribute to the future of modern cosmology.

Similar content being viewed by others

1 Introduction

The discovery of the accelerated expansion of the Universe (Perlmutter et al. 1998, 1999; Riess et al. 1998) has been one of the major breakthrough in modern cosmology, and also in physics in general. The general framework established in the previous century, where the entire evolution of the Universe was thought to be dominated by matter and radiation, needed to readjust to make space for a new form of energy with negative pressure that can be responsible for this acceleration (that was named dark energy), or, alternatively, to account for some breaking of the well-known general relativity at very large scales. Driven by these pioneering results, in the subsequent decades the scientific and technical efforts of the scientific community were dedicated to the study of methods to measure and characterize this accelerated expansion, and to the development of large facilities providing massive datasets to be analyzed. In this process, a few of these methods, also referred to as cosmological probes, have become standard approaches in the cosmological analysis given the large efforts spent in measurements, theoretical analyses, systematics characterization, and also investments.

A comprehensive review on these methods is provided in Huterer and Shafer (2018). Here we just recall that most of these approaches are based on the determination of some standard properties of astrophysical objects that can be used to calibrate observations and measure the expansion history of the Universe. In particular, it was discovered that the peculiar physical characteristics of some objects allow us to infer a-priori their absolute luminosity, making them standard candles (or standardizable candles) with which it became possible to measure their luminosity distance. Locally, it was found that some stars have a variable luminosity (Cepheids, RR-Lyrae) whose period of variability can be used to determine precisely their absolute luminosity; detached eclipsing binaries have been also used as local distance indicators to determine the distance to the Large Magellanic Cloud (LMC) to <1% (Pietrzyński et al. 2019). At larger distances, it was discovered that also the stars at the Tip of the Red Giant Branch (TRGB), easily identifiable in the upper part of the the Hertzsprung–Russell diagram, can be used as standard candles, having an almost constant I-band magnitude (Lee et al. 1993). Finally, at cosmological distances, Type Ia Supernovae (SNe) have been found to be ideal standardizable candles, since their peak luminosity is found to strictly correlate with their absolute luminosity after a proper calibration (Phillips 1993), allowing us to probe the Universe with precise distance indicators up to \(z\sim 1.5\). Similarly, the analysis of large-scale structures in the Universe highlighted, among other features, the presence of correlated over-densities in the matter distribution at a specific separation of \(r\sim 100\) Mpc/h. This effect, known as Baryon Acoustic Oscillations (BAO), was clearly seen both as wiggles in the power spectrum of galaxies and as a peak in the two-point correlation function (Percival et al. 2001; Cole et al. 2005; Eisenstein et al. 2005), and can be interpreted as the imprint of the sound horizon in the original fluctuations in the photo-baryonic fluid present in the very early Universe. These oscillations have been in particular used as a standard ruler to study the expansion history of the Universe. While the BAO is the most direct probe of the expansion history from large scale-stucture, the massive galaxies and quasars surveys that have enabled the BAO success, enclose also additional signals of great cosmological interest. These analyses are also well established, and, while they might not have reached their full potential yet, they do not qualify as “emerging”.

As a parallel effort, the observation and study of the first light emitted in the Universe, the Cosmic Microwave Background (CMB) radiation, done with several ground- and space-based missions (Smoot et al. 1992; Bennett et al. 2003; Planck Collaboration et al. 2014b; Swetz et al. 2011; Carlstrom et al. 2011) gave us a privileged view on the early Universe, providing fundamental insights on the process of formation and on the main components in that early times. In addition to those, other cosmological probes have been widely used in the past decades to constrain the expansion of the Universe and the evolution of the matter within it. Amongst the most important ones, here we just mention the weak gravitational lensing (see, e.g., Bartelmann and Schneider 2001) and the properties of massive clusters of galaxies, in particular the cluster counts (see, e.g., Allen et al. 2011). Weak gravitational lensing, while being a younger field than CMB or galaxy surveys, has matured tremendously in the past two decades; efforts in this direction have culminated recently with the DES analysis (Dark Energy Survey Collaboration et al. 2016; Abbott et al. 2022) and weak lensing is one of the science driver of future surveys such as the Legacy Survey of Space and Time (LSST) on the Vera Rubin Observatory.Footnote 1

While CMB, BAO, SNe, and the other previously quoted probes have increasingly gained interest in these years in the cosmological community, it soon became clear also that a single probe is not sufficient to constrain accurately and precisely the properties of the components of the Universe. Ultimately, each probe has its own strengths and weaknesses, being sensitive to specific combinations of cosmological parameters, to specific physical processes, specific range of cosmic time, and affected by specific set of systematics. In the end, the only road to move forward in our knowledge of the Universe is found to reside in the combination between complementary cosmological probes, allowing us to break degeneracies between the estimate of parameters, and also to keep under control systematic effects (see, e.g., Scolnic et al. 2018). This point was clearly first highlighted in the Dark Energy Task Force report (Albrecht et al. 2006), and since then the effort of the scientific community proceeded towards that direction, also with space missions specifically designed to take advantage of the synergy between different probes.Footnote 2

With the development of these cosmological probes, it soon begun the era of precision cosmology, where the advances in the instrumental technology, supported by a more mature assessment and reduction of systematic uncertainties and by an increasing volume of data, led to percent and sub-percent measurements of cosmological parameters. However, instead of eventually closing all the questions related to the nature of the accelerated expansion of our Universe and of its constituents, this newly achieved accuracy actually opened even more the Pandora’s box. One of the most pressing issues is that the Hubble constant \(H_0\) as determined from early-Universe probes (CMB) appears to be in significant disagreement with respect to the estimates provided by late Universe (Cepheids, TRGB, masers,...). Many analyses addressed whether this might be due to some systematics hidden in either measurement, but, as of the current status, this seems disfavored (Riess et al. 2011, 2016; Bernal et al. 2016; Di Valentino et al. 2016; Efstathiou 2020; Riess et al. 2020; Di Valentino et al. 2021; Efstathiou 2021; Riess et al. 2021; Dainotti et al. 2021; Riess et al. 2022). At the same time, smaller and less statistically significant differences started arising also in other cosmological parameters as estimated from early- and late-Universe probes, such as the tension in the estimate of dark matter energy density \(\varOmega _{\mathrm{m}}\) and of \(\sigma _8\), the matter power spectrum normalization at 8\(\, h^{-1} \, \mathrm{Mpc}\), often summarized in the quantity \(S_8\equiv \sigma _8\sqrt{\varOmega _{\mathrm{m}}/0.3}\) (Heymans et al. 2013; MacCrann et al. 2015; Joudaki et al. 2017; Hildebrandt et al. 2017; Asgari et al. 2020; Park and Rozo 2020; Joudaki et al. 2020; Tröster et al. 2021; Asgari et al. 2021; Heymans et al. 2021; Amon et al. 2022; Secco et al. 2022; Abbott et al. 2022). All these constraints are pointing toward significant differences of the order of 4–5\(\sigma \), and in the case (if confirmed) this is not attributable to some problems with the data, this may open the road to new physics with which to explain such discrepancies in the measurement of the same quantity probing different cosmic times.

Now that the precision in many standard cosmological probes is close to reaching its maximum, given the current analyses or the ones planned in the near future, a way to take a step forward in our understanding of the Universe is to look for new independent cosmological probes (as also highlighted by Verde et al. 2019; Di Valentino et al. 2021), that could either confirm the discrepancies found, pointing us toward the need of new models, or deny those, helping us to understand better possible systematics, or unknown unknowns. Moreover, the synergy and complementarity between different probes can also help to reduce, when different probes are combined, the uncertainty on cosmological parameters. In general, the diversity between different methods will not only enrich the panorama of ways to look at and study our Universe, but also possibly open new observational and theoretical windows, as happened in the past with the study of CMB, SNe, and BAO.

This is an exciting time for cosmology, and in this review we aim to provide a state-of-art review of the new emerging cosmological probes, discussing how to apply them, the systematics involved, the measurements obtained, and the forecasts of how they could contribute to understand the evolution of the Universe. In particular, we will review cosmic chronometers, quasars, gamma-ray bursts, gravitational waves as standard sirens, time-delay cosmography, cluster strong lensing, cosmic voids, neutral hydrogen intensity mapping, surface brightness fluctuations, stellar ages, secular redshift drift, and clustering of standard candles. In Sect. 2 we will provide a general overview of the basic notation and fundamental equations assumed in the review, in Sect. 3 we will discuss separately each emerging cosmological probe, in Sect. 4 we will discuss the synergy and complementarity between the various described cosmological probes, and in Sect. 5 we will draw our conclusions.

2 Notations and fundamental equations

One of the main assumptions in modern cosmology is the cosmological principle, which describes our Universe at very large scales based on two main premises: the homogeneity (the Universe is the same in every positions) and isotropy (there is no preferential spatial direction). Under this principle, the space-time metric can be described by the Friedmann–Lemaître–Robertson–Walker (FLRW) metric:

where a(t) is the scale factor, that describes how the universe is expanding relating physical and comoving distances as \(R(t)=a(t) r\), c is the speed of light, \(\theta \) and \(\phi \) are the angles describing the spherical coordinates, and k is the parameter describing the curvature of space; in particular, a \(k=0\) corresponds to a flat universe described by an Euclidean geometry, a positive \(k>0\) to a closed universe with a spherical geometry, and a negative \(k<0\) to an open universe with a hyperbolic geometry. Within a FRLW metric, it is also possible to relate the scale factor with the redshift z, having:

If we define the expansion rate of the universe H(t) as the rate with which the scale factor evolves with time, \(H(t)\equiv \left( \frac{{\dot{a}}}{a}\right) \), we can describe how it evolves with cosmic time t through the Friedmann equations:

where G is the gravitational constant, \(\rho \) and p are the total energy density and pressure, \(\Lambda \) is the cosmological constant, and the dot indicates a derivative with respect to time. Historically, a critical value of density producing a flat universe has been defined by equating, in the absence of a \(\Lambda \) term, Eq. (3) to zero, obtaining \(\rho _\mathrm{crit}=\frac{3H^2}{8\pi G}\). This quantity has proven to be extremely useful to define adimensional density parameters for the various constituents of the universe as \(\varOmega _i=\frac{\rho _i}{\rho _\mathrm{crit}}\). This allows us to write the total energy density of the universe as the sum of the contribution of various components, namely matter and radiation; analogously, considering the terms on the right-hand side of Eq. (3), we can define an energy density for the curvature \(\varOmega _k\equiv \frac{k}{H^2}\) and for dark energy (in the case of a Cosmological Constant) \(\varOmega _{{\Lambda }}\equiv \frac{\varLambda }{3H^2}\). In this way, we have:

where the density parameters are here defined at any given time, so as a function of redshift z. In this context, it is also useful to define the equation of state (EoS) parameter of a generic component as the value w relating its pressure and density, \(w=p/\rho \). In general, we can express the evolution of the energy density as:

While the EoS could depend on time, we recall here that the different components have different EoS parameters, namely \(w=1/3\) for radiation, \(w=0\) for matter, and \(w=-1\) for the term we referred as to dark energy (in the case it is a Cosmological Constant). If we consider Eq. (6) in the case of a constant \(w_i\), it simplifies to:

Combining Friedmann equations (3) and (4) with Eqs. (5) and (7), it is possible to express the expansion rate of the universe as a function of the evolution with redshift of its main components:

where each component evolves with a different power of \((1+z)\) due to the different EoS parameter of each term; here, we implicitly assumed the density parameters defined as constant, referred to as today’s values \(\varOmega _{i,0}\). We will assume this convention throughout the review, unless otherwise specified. In Eq. (8) we also introduced the dark energy density as \(\varOmega _{\mathrm{de}}\), since in this case its EoS parameter is considered having a generic value w. While, in principle, one could take into account also the contribution of radiation \(\varOmega _{\mathrm{r}}\) that scales as \((1+z)^4\), typically this is not considered given the current constraint \(\varOmega _\mathrm{r}\sim 2.47\,10^{-5}h^{-2}\) (Fixsen 2009), and in the following we will neglect its contribution.

So far, we have considered the dark energy as having a constant EoS parameter \(w=-1\); however, to be more generic, we can allow it to vary with cosmic time, as different cosmological model would actually suggest. The most widely used way to parameterize this evolution is the Chevallier, Polanski, Linder (CPL) parameterization (Chevallier and Polarski 2001; Linder 2003), where:

Considering Eqs. (6) and (9), we can therefore generalize Eq. (8) as follows:

From this more general formulation where most of the cosmological parameters are let free to vary (which we will refer as to open \(w_{0}w_{a}\)CDM model, o\(w_{0}w_{a}\)CDM), it is possible to derive more specific cases. In the case we fix the curvature of the universe to be flat (\(\varOmega _k=0\)), we will have a flat \(w_{0}w_{a}\)CDM model (f\(w_{0}w_{a}\)CDM); in case we also fix the time evolution of the dark energy EoS to be null (\(w_{a}=0\)), we will have a flat wCDM model (fwCDM); finally, if we assume the dark energy EoS to be constant and equal to \(w=-1\), we will obtain the standard \(\Lambda \)CDM model. In this context, it is also useful to define the normalized Hubble parameter as:

The previously discussed equations describe how the cosmological background evolves. From these, we can introduce several additional quantities that will be extremely relevant in describing astrophysical phenomena, namely distances and times. Following Huterer and Shafer (2018), the comoving distance can be defined as:

It is interesting to notice that in the case of a standard flat \(\Lambda \)CDM cosmology, this equation can be significantly simplified to:

From this equation, we can define two fundamental quantities in astrophysics, namely the luminosity distance \(D_{\mathrm{L}}(z)\) and the angular diameter distance \(D_{\mathrm{A}}(z)\) as:

where we have assumed that the Etherington relation holds, and therefore we rely on assumptions such as a metric theory and photon number conservation. Similarly, considering the previous definition of H(t) and considering Eq. (2), we can write:

and by integrating it we obtain the expression of the age of the universe as a function of redshift:

3 Cosmology with emerging cosmological probes

All the new emerging cosmological probes are presented following a common scheme, introducing at the beginning of each section the basic idea of the method and its main equations, describing how to optimally select each probe, discussing how it can be (and has been) applied, reviewing the current status of the art of the measurements, and providing forecasts on how the method is expected to improve its performance in the near future. A fundamental part is dedicated, in particular, to the presentation of the systematics involved in each probe, discussing how they impact the measurements and possible strategies to handle and mitigate them.

3.1 Cosmic chronometers

The age of the Universe has been an important (derived) cosmological parameter, being closely related to the Hubble constant and the background parameters governing Universe’s expansion history. Determinations of the age of the Universe today from the age of old cosmological objects at \(z\sim 0\) (see, e.g., the reviews by Catelan 2018; Soderblom 2010; Vandenberg et al. 1996 and recent determinations by O’Malley et al. 2017; Valcin et al. 2020, 2021) and of the look-back time at higher redshifts (Dunlop et al. 1996; Spinrad et al. 1997) have been very influential in the establishment of the (now) standard cosmological model.

The age of the Universe or the look-back time, being an integrated quantity of H(z), has some limitations (both in terms of statistical power and in terms of susceptibility to systematics) that the cosmic chronometers approach attempts to overcome.

3.1.1 Basic idea and equations

The accurate determination of the expansion rate of the Universe, or Hubble parameter H(z) has become in recent years one of the main drivers of modern cosmology, since it can provide fundamental information about the energy content and on the main physical mechanisms driving its current acceleration. Its measurement is, however, very challenging, and while many works have focused on the estimate of its local value at \(z=0\) (the Hubble constant \(H_0\), see Sect. 2), we have nowadays few determinations of H(z), and mainly based on few methods (e.g., on the detection of the BAO signal in the clustering of galaxies and quasars, or on the analysis of SN data, see Font-Ribera et al. 2014; Delubac et al. 2015; Alam et al. 2017; Riess et al. 2018; Scolnic et al. 2018; Bautista et al. 2021; Hou et al. 2021; Raichoor et al. 2021; Riess et al. 2021). These measurements, while having their own strengths, rely on the adoption of a cosmological scenario such as assumption of flatness, on early physics assumptions (in the case of BAO) and on calibration of the cosmic distance ladder (in the case of SNe); without these assumptions, these probes yield the determination of the normalized expansion E(z) instead of H(z).

In this context, it is very important to explore alternative ways to determine the Hubble parameter, that can be compared, and eventually combined, with other determinations. The cosmic chronometers method is a novel cosmological probe able to provide a direct and cosmology-independent estimate of the expansion rate of the Universe. The main idea, introduced by Jimenez and Loeb (2002), is based on the fact that in a universe described by a FLRW metric the scale factor a(t) can be directly related with the redshift z as in Eq. (2). With this minimal assumption, it is therefore possible to directly express the Hubble parameter as a function of the differential time evolution of the universe dt in a given redshift interval dz, as provided by Eq. (15):

Here dt/dz can be taken to be the look-back time differential change with redshift. Since redshift is a direct observable, the challenge is to find a reliable estimator for look-back time, or age, over a range of redshifts, i.e. to find cosmic chronometers (CC).

The novelty and added value of this method with respect to other cosmological probes is that it can provide a direct estimate of the Hubble parameter without any cosmological assumption (beyond that of an FLRW metric, see also Koksbang 2021). From this point of view, the strength of this method is its (cosmological) model independence: no assumption is made about the functional form of the expansion history or about spatial geometry; it only assumes homogeneity and isotropy, and a metric theory of gravity. Constrains obtained with this method, therefore, can be used under extremely varied cosmological models.

There are three main ingredients at the basis of the CC method:

-

1.

the definition of a sample of optimal CC tracers. As highlighted in Eq. (15), a sample of objects able to trace, at each redshift, the differential age evolution of the Universe is needed. It is fundamental that this sample of cosmic chronometers is homogeneous as a function of cosmic time (i.e., the chronometers started ticking in a synchronized way independently of the redshift they are observed at), and optimized in order to minimize the contamination due to outliers. The optimal selection process will be described in detail in Sect. 3.1.2.

-

2.

the determination of the differential age dt. The CC method is typically applied on tracers identified through spectroscopic analysis, where the redshift determination is extremely accurate (\(\delta z/(1+z)\lesssim 0.001\), see e.g. Moresco et al. 2012a). As a consequence, as can be seen from Eq. (15), the only remaining unknown is the differential age dt. Different techniques have been explored to obtain robust and reliable differential age estimates for CC, to estimate statistical and systematic uncertainties, and they will be presented in Sect. 3.1.3.

-

3.

the assessment of the systematic effects. As any other cosmological probe, one of the fundamental issues to be assessed is the sensitivity of the method to effects that can systematically bias the measurement. All the various systematic effects will be examined in Sect. 3.1.4.

3.1.2 Sample selection

Cosmic chronometers are objects that should allow us to trace robustly and precisely the differential age evolution of the Universe across a wide range of cosmic times. For this reason, the most useful astrophysical objects are galaxies: with current ground and space-based facilities, these objects can be observed with reasonably high signal-to-noise over a wide area and range of redshifts. Two different approaches have been explored.

Imagine to select, in a given redshift range, a complete sample of galaxies, independently of their properties, and estimate their age as to homogeneously populate the age(z) plane. With enough statistics, it becomes possible to estimate the upper envelope (also called red envelope) of the age(z) distribution. Under the assumption that all galaxies formed at the same time independently of the observed redshift (which relies on the Copernican principle) and that the sample is complete, the envelope can be used to measure the differential age of the Universe. The advantage of this kind of approach is that the selection of the sample is very straightforward, at the cost of being significantly demanding, since to determine robustly the “edge” of the distribution and its associated error, very high statistics are needed in order not to be biased by random fluctuations in the determination of the ages of the population (e.g., see Jimenez and Loeb 2002; Jimenez et al. 2003; Simon et al. 2005; Moresco et al. 2012a, where over 11000 massive and passive galaxies have been selected to apply this method).

A more practical solution, therefore, is to (pre-)select an homogeneous population representing at each redshift the oldest objects in the Universe. The best cosmic chronometers that have been identified are extremely massive (\(\log (M/M_{\odot })>\)10.5–11) and passively evolving galaxies (sometime also inappropriately referred as early-type galaxies). These objects represent the most extreme tails in the mass function (MF) and luminosity function (LF), from the local Universe (Baldry et al. 2004, 2006, 2008; Peng et al. 2010) up to high redshift (Pozzetti et al. 2010; Ilbert et al. 2013; Zucca et al. 2009; Davidzon et al. 2017). Many recent studies (e.g., Daddi et al. 2004; Fontana et al. 2006; Ilbert et al. 2006; Wiklind et al. 2008; Caputi et al. 2012; Castro-Rodríguez and López-Corredoira 2012; Muzzin et al. 2013; Stefanon et al. 2013; Nayyeri et al. 2014; Straatman et al. 2014; Wang et al. 2016; Mawatari et al. 2016; Deshmukh et al. 2018; Merlin et al. 2018, 2019; Girelli et al. 2019) have identified a population of massive quiescent galaxies at high redshift (\(z \gtrsim 2.5\)). There is a large literature supporting the scenario in which these systems have built up their mass very rapidly (\(\varDelta t<0.3\) Gyr, Thomas et al. 2010; McDermid et al. 2015; Citro et al. 2017; Carnall et al. 2018) and at high redshifts (\(z>2-3\), Daddi et al. 2005; Choi et al. 2014; McDermid et al. 2015; Pacifici et al. 2016; Carnall et al. 2018; Estrada-Carpenter et al. 2019; Carnall et al. 2019), having quickly exhausted their gas reservoir and being then evolving passively. For this reason, such objects constitute a very homogeneous population also in terms of metal content, having been found to have a solar to slightly oversolar metallicity from \(z\sim 0\) up to \(z\sim 2\) (Gallazzi et al. 2005; Onodera et al. 2012; Gallazzi et al. 2014; Conroy et al. 2014; Onodera et al. 2015; McDermid et al. 2015; Citro et al. 2016; Comparat et al. 2017; Saracco et al. 2019; Morishita et al. 2019; Estrada-Carpenter et al. 2019; Kriek et al. 2019). The mere existence of a population of passive and massive galaxies already at \(z\sim 2\) further supports this scenario (Franx et al. 2003; Cimatti et al. 2004; Onodera et al. 2015; Kriek et al. 2019; Belli et al. 2019). A clear pattern has also been found strictly connecting the mass, the star formation history (SFH), and the redshift of formation of these galaxies; within this scenario, referred to to as mass downsizing, more massive galaxies are found to have been formed earlier, to have experienced a more intense, even if short, episode of star formation, and to have a very homogeneous SFH (Heavens et al. 2004; Cimatti et al. 2004; Thomas et al. 2010). To summarize, these galaxies represent a population where the age difference dt between two suitable separated (and suitably narrow) redshift bins is significantly larger than their internal time-scale evolution, making them optimal chronometers. For a more detailed review on massive and passive galaxies, we refer to Renzini (2006).

Many different prescriptions have been suggested in the literature to select passive galaxies, based on rest-frame colors (Williams et al. 2009; Ilbert et al. 2010, 2013; Arnouts et al. 2013), the shape of the spectral energy distribution (SED) (Zucca et al. 2009; Ilbert et al. 2010), star formation rate (SFR) or specific SFR (sSFR) (see, e.g., Ilbert et al. 2010, 2013; Pozzetti et al. 2010), presence or absence of emission lines (see, e.g., Mignoli et al. 2009; Wang et al. 2018), and even morphology. The important question in this context is whether these different selection criteria are all equivalent to select CC. The short answer is no. In several papers (Franzetti et al. 2007; Moresco et al. 2013; Belli et al. 2017; Schreiber et al. 2018; Fang et al. 2018; Merlin et al. 2018; Leja et al. 2019; Díaz-García et al. 2019) it has been found that a simple criterion is not able per-se to select a pure sample of passively evolving galaxies, and that, depending on the criterion, a conspicuous number of contaminants might remain. This is clearly shown in the left panel of Fig. 1, reproduced from Moresco et al. (2013). The reference and the figure highlight how passive galaxies selected with several different criteria still shows evidence of emission lines, with a residual contamination by blue/star-forming objects that, depending on the criterion, can be as high as 30–50%. In the same work, as also reported by the figure, it was also shown that a cut in stellar mass is helpful to increase the purity of the sample, and that, at fixed criterion, the contamination is significantly smaller at high masses (decreasing by a factor 2–3 from \(\log (M/M_{\odot })<10.25\) to \(\log (M/M_{\odot })>10.75\)).

Images reproduced with permission from [left] Moresco et al. (2013), copyrighty by ESO; and [right] from Borghi et al. (2022b), copyright by the authors

Impact of selection criteria on the purity of CC samples. Left panel: stacked spectra of differently selected samples of passive galaxies from the zCOSMOS survey in two different mass bins (\(\log (M/M_{\odot })<10.25\) and \(\log (M/M_{\odot })>10.75\)), showing how, in many selection criteria, the contamination by significant emission lines is still clearly evident, especially in the low mass bin. Note that in the high mass bin emission lines are not visible indicating much reduced contamination of the sample. Right panel: NUVrJ diagram for galaxies from the LEGA-C survey. The points have been colored by their H/K ratio, where the dashed line shows the division between passive and star-forming objects (Ilbert et al. 2013) (the shaded region identifies the green valley Davidzon et al. 2017), and the points highlighted in black the selected CC.

Both in Moresco et al. (2013) and in Borghi et al. (2022b) it has been demonstrated that, in order to maximize the purity of the sample and to select the best possible sample of CC, different criteria should be combined (photometric, spectroscopic, stellar mass/velocity dispersion cut, potentially morphological). In Moresco et al. (2018), a detailed selection workflow has been proposed, which can be summarized in the following three criteria:

-

(i)

a photometric criterion to select the reddest objects, based on the available photometric data. Among the best ones there is the one based on the NUVrJ diagram (Ilbert et al. 2013), but other alternatives are the UVJ diagram (Williams et al. 2009), or the NUVrK (Arnouts et al. 2013), or also selections based on full SED modeling (e.g., see Ilbert et al. 2009; Zucca et al. 2009). It is important to underline, however, that having information about the UV flux is proven to be very important to discard the contamination by a young (0.1–1 Gyr) population, and that the NUVrJ diagram has been demonstrated to be the most robust one to distinguish star-forming and passive populations.

-

(ii)

a spectroscopic criterion, in order to check that no residual emission lines, that might trace the presence of on-going star formation, are present in the spectrum. Depending on the redshift and on the wavelength coverage of the data, the most important emission lines to be checked are [OII]\(\lambda \)3727, H\(\beta \) (\(\lambda =4861\)Å), [OIII]\(\lambda \)5007, and H\(\alpha \) (\(\lambda =6563\)Å), and different kind of cuts can be adopted, based on the equivalent width (EW) of the line (e.g., EW<5ÅMignoli et al. 2009; Moresco et al. 2012a; Borghi et al. 2022b), on its signal-to-noise ratio (S/N, e.g., Moresco et al. 2016b; Wang et al. 2018), or a combination of these. In general, it is important that the selected spectra do not show any sign of emission lines (as an example, see Fig. 3).

-

(iii)

a cut in stellar mass, or, equivalently, in stellar velocity dispersion \(\sigma _{\star }\). As discussed above, the more massive a system is, the oldest, more coeval, and less contaminated it is. Therefore, typically a cut around \(\log (M/M_{\odot })>\)10.6–11 is adopted.

Selection workflow for CC (adapted from Moresco et al. 2018). The rounded boxes show the selected samples at the different steps, from the parent sample to the final CC sample, while the blue diamond boxes represent the incremental selection criteria adopted, with a green (red) arrow indicating when the criterion is met (or not) and the galaxy included (or excluded) from the sample. Each criterion is fundamental to maximize the purity of the sample, removing star-forming contaminants at different typical young ages (see Moresco et al. 2018)

Any other less stringent selection criteria will yield a sample with a residual degree of contamination by star-forming objects, which we will address in Sect. 3.1.4. It is interesting to notice that recently some alternative estimator has been suggested that can help to track the purity of the sample. In Moresco et al. (2018), the ratio between the CaII H (\(\lambda =3969\)Å) & K (\(\lambda 3934\)Å) lines has been introduced as a novel way to trace the degree of contamination by a star-forming component. The reason is that, while for a passive population typically the ratio H/K is larger than one (being the K line deeper than the H line), the presence of a young component affects this quantity, being characterized by non-negligible Balmer line absorptions, and in particular by the presence of H\(\epsilon \) (\(\lambda =3970\)Å) that get summed with the CaII H line, inverting the ratio. This new diagnostic has been demonstrated to be extremely powerful, since it correlates extremely well with almost all other indicators of ongoing star formation, as shown in the right panel of Fig. 1 (NUV and optical colors, SFR, emission lines, see Borghi et al. 2022b), and can be an useful independent indicator of the presence of a residual ongoing star formation. The workflow for the selection criteria is summarized in Fig. 2.

3.1.3 Measurements

Measuring the age of a stellar population presents several challenges. One of the main issues is the existence of degeneracies between the physical parameters, so that the spectral energy distribution (SED) of a galaxy can be approximately reproduced with quite different combinations of age and other parameters. The most well-known one is the age-metallicity degeneracy (Worthey 1994; Ferreras et al. 1999), and it is connected to the fact that both an older age and an higher metallicity produce a reddening of galaxies spectra; in particular, it has been found from synthetic stellar population models that the optical colors of early-type galaxies obtained by changing their ages and metallicities while keeping the ratio \(\varDelta {\mathrm{age}}/\varDelta [Z/H]\sim 3/2\) are almost the same. The degeneracy between the age of a galaxy and its star formation history (SFH) (Gavazzi et al. 2002) or the dust content should also be mentioned (even though we note that the second one is typically negligible at most for accurately selected passive galaxies, due to their low contamination by dust, see Pozzetti and Mannucci 2000). Therefore, while age estimates for galaxies obtained from multi-band SED-fitting are quite common in the literature, they are not suitable for this purpose.

With the advent of high-resolution spectroscopy over a wide wavelength range and for large galaxy samples, and more accurate stellar model and fitting methods, it has become possible to lift these degeneracies and estimate the ages of stellar population of galaxies much more accurately and precisely. Moreover the main strength of the CC method is that it is a differential approach, where the quantity to be measured is the differential age dt, and not the absolute age t. The advantage is that any systematic effect that might be introduced by any method in the estimate of t is significantly minimized in the measurement of dt; any systematic offset in the absolute age estimation will not impact the determination of dt. This is confirmed also by independent analysis (e.g., see Marín-Franch et al. 2009), demonstrating that the accuracy reached in the determination of relative ages is much higher than the one on absolute ages.

Different methods have been proposed in the literature to obtain a robust estimate of dt from galaxy spectra. These can be roughly classified in two “philosophies”: using the full spectral information versus selecting only specific features sensitive to the age and well localized in wavelength. Using the full spectral information extracts the maximal amount of information possible (minimizes statistical errors) but is more sensitive to systematics, i.e., other physical process than age that leave their imprint on the spectrum, and exhibit some dependence of the age estimate on evolutionary stellar population synthesis models. Using localized features attempts to mitigate that, at the expense of possibly larger statistical errors. To keep systematic errors well below the statistical ones, the preferred methodology might change depending on the statistical power of the datasets available. With very large, high statistics datasets becoming available, the focus has shifted from full spectral fitting to using only specific features.

The main methods to measure dt from galaxy spectra can be summarized as follows.

3.1.3.1 Full-spectrum fitting

The most straightforward approach is to take advantage of the full spectroscopic information available by fitting the entire spectrum with theoretical models. Different components, obtained from stellar population synthesis models, are typically combined with a mixture of different physical properties (age, metal content, mass), and properly weighted to reproduce the observed spectrum in a given wavelength window (usually within the optical range). The strength of this approach is therefore to be able to reconstruct, together with the age and metallicity of the population, also its star formation history, either in a parametric or non-parametric way. Currently, several codes have been developed and are publicly available to perform a full spectrum fitting, differing slightly for the model implemented, how the SFH is reconstructed, and on the statistical methods. The first such method that started the field is the MOPED algorithm (Heavens et al. 2000, 2004); after that, amongst the most used we can find STARLIGHT (Cid Fernandes et al. 2005), VESPA (Tojeiro et al. 2007), ULySS (Koleva et al. 2009), BEAGLE (Chevallard and Charlot 2016), FIREFLY (Wilkinson et al. 2017), pPXF (Cappellari 2017), and BAGPIPES (Carnall et al. 2018). In Fig. 3 we show as an example the typical spectrum of a passively evolving population obtained by stacking roughly 100000 spectra extracted from the Sloan Digital Sky Survey Data Relase 12 (SDSS-DR12). The figure also highlights the locations of relevant spectral features.

3.1.3.2 Absorption features (Lick indices) analysis

Another approach is to analyze, instead of the full spectrum, only some specific regions characterized by well understood absorption features, also known as Lick indices. These indices, originally introduced by Worthey (1994); Worthey and Ottaviani (1997) are characterized by a strength that can be directly linked to a variation of the property of the stellar population; some indices are more useful to trace to the age of the population (typically the Balmer lines), others the stellar metallicity (typically Fe lines), and others the alpha-enhancement (e.g. Mg lines). Also in this case, public codes exist to measure Lick indices (see, e.g., indexfCardiel 2010 and pyLick Borghi et al. 2022b). The specific dependence of each index (shown in Fig. 3) on physical properties has been at first assessed in Worthey (1994). A significant step forward in their use to quantitatively determine the age of a stellar population has been done by Thomas et al. (2011); this consists in constructing stellar population models specifically suited for modeling Lick indices, including a variable element abundance ratio, that can be compared with the data (e.g., with a Bayesian approach). This step is fundamental since it overcomes the limitation of the full-spectrum fitting, allowing also the possibility to determine, together with the age and metallicity, also the alpha-enhancement of a stellar population. It is worth noting that more recently other models with variable element ratios that could be used for this purpose have also been proposed by Conroy and van Dokkum (2012) and Vazdekis et al. (2015).

Stacked spectrum of \(\sim \)100,000 massive and passive CC selected from SDSS DR12. It is possible to see clearly how it is characterized by a red continuum, several absorption lines (identified by the black boxes), and by the absence of significant emission lines (whose position is highlighted by the red boxes)

3.1.3.3 Calibration of specific spectroscopic features

Finally, one of the more commonly adopted approach in the CC works, is to focus on a single spectroscopic feature found to have a tight correlation with the age of the population. This approach was introduced by Moresco et al. (2012a), who proposed to use the break in the spectrum at 4000 Å rest-frame (D4000, one of the main characteristic of the spectrum of a passive galaxy, as also shown in Fig. 3). The D4000 has been demonstrated to correlate extremely well with the stellar age (at fixed metallicity). Moreover it has been shown that the dependence of D4000 on the two quantities (age and metallicity Z) can be described by a simple (piece-wise) linear relation in the range of interest for the analysis:

where B is a constant and A(Z, SFH) is a parameter, which for a broad age range depends only on the metallicity Z and on the SFH, and can be calibrated on stellar population synthesis (SPS) models. By differentiating Eq. (17), it is possible to derive the relation between the differential age evolution of the population dt and the differential evolution of the feature, dD4000, in the form \(dD4000=A(Z, SFH)\times \mathrm{dt}\). This allows us to rewrite Eq. (15) as:

with the advantage of having decoupled statistical (all included in the observationally measurable term dz/dD4000) from systematic effects (captured by the coefficient A(Z, SFH)). We note here that different definitions have been proposed in the literature to measure the D4000, which is the ratio between the average flux \(F(\nu )\) in two windows adjacent to 4000 Å rest-frame, one assuming wider bands (\(D4000_w\), [3750–3950] Å and [4050–4250] Å, Bruzual A. 1983) and one with narrower ones (\(D4000_n\), [3850–3950] Å and [4000–4100] Å, Balogh et al. 1999); in the following, we will consider \(D4000_n\), since it has been shown that it has been demonstrated to have a significant smaller dependence on potential reddening effects (Balogh et al. 1999).

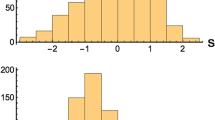

Application of the CC method. In the left panel is shown an example of averaged \(D4000-z\) (with uncertainties smaller than the symbol size, thus differences can be robustly computed) relations for a CC sample extracted from SDSS-DR12, in different velocity dispersion bins as shown in the label. Each point has been estimated from a stacked spectrum of \(\sim 1000\) objects, and its uncertainty is the error on D4000 measured from the stacked spectrum. It is clearly evident a downsizing pattern, for which more massive (with higher \(\sigma \)) galaxies have also larger D4000 values, corresponding to higher ages; it also shows the expected decrease of D4000 with redshift. The brackets show for an illustrative couple of points the calculation of dD4000 and dz. The right panel shows theoretical D4000-age relations obtained with SPS models by Maraston and Strömbäck (2011) used to calibrate Eq. (18). Lines from the upper to the lower ones show different stellar metallicities, from twice as solar, to solar and half solar. Different lines present, at fixed metallicities, different SFH, namely with \(\tau =[0.05,0.1,0.2,0.3]\) Gyr (from left to right). The colored lines show, for one SFH for each metallicity, the best fit obtained with a piece-wise linear relation. The arrows indicate how different parameters affect the D4000-age relations, being important to keep in mind that the calibration parameter A(Z, SFH) is the slope of the relation

To apply the improved CC method as described by Eq. (18), it is therefore necessary to measure the following quantities:

-

1.

the differential \(\varDelta D4000\) of a sample of CC over a redshift interval \(\varDelta z\). Since this process involves the estimate of a derivative, to increase its accuracy and minimize the noise due to statistical fluctuation of the signal, it should be done both averaging the D4000 of galaxies in redshift slices and then estimating dD4000, or stacking multiple spectra of CC to increase the spectral S/N, and measuring the D4000 on the stacked spectra, as shown in Fig. 4. Equation (18) disentangles observational errors from the systematic errors associated with the interpretation (such as dependence on the SSP model, degeneracies with metallicity, etc.). The D4000 is a purely observational quantity, and thus, barring observational systematics such as wavelength calibration or instrument response, its measurement is affected only by statistical uncertainty which can be reduced by increasing the number of objects with spectra and/or increasing the S/N per spectrum.

-

2.

the metallicity Z and SFH of the selected sample. As a result of the strict selection criteria (see Sect. 3.1.2), selected galaxies are characterized by a SFH with a very small duration: \(\tau <0.5\) Gyr (in many cases \(<0.2\) Gyr) when parameterized with an exponentially declining SFH with \(\tau \) the formation time scale (in Gyr). Nevertheless, SFH should be taken into account and correctly propagated in the measurement, since despite the selection, describing those system as single stellar population (SSP) would be over-simplistic. The method to estimate the SFH are mostly based on SED-fitting or on full-spectrum fitting, or on a combination of those (see, e.g, Tojeiro et al. 2007; Chevallard and Charlot 2016; Citro et al. 2016; Carnall et al. 2018, 2019). Despite the fact that by construction the CC population is very homogeneous also in metal content, and it has been observed to have a solar to slightly over-solar metallicity over a very wide range of cosmic times (see Sect. 3.1.2), the stellar metallicity Z need to be determined too. Also in this case, different approaches are viable, from considering a data-driven prior on it (Moresco et al. 2012a), estimating it with full-spectrum fitting considering different codes and models (Moresco et al. 2016b), or measuring it from Lick index analysis (Gallazzi et al. 2005; Borghi et al. 2022b).

-

3.

the calibration parameter A(Z/SFH) to connect variations in D4000 to variations in the age of the stellar population, assuming different SPS models. This involves generating several D4000-age relations exploring different metallicities and SFH, and adopting several different SPS models.

As already discussed, these relation can be well approximated to be linear (or, better, piece-wise linear, as shown in Fig. 4), whose slopes are the parameter A(Z, SFH) in the regime of interest. At fixed metallicity and in a given D4000 regime, it is then possible to estimate the spread in the slopes obtained by varying the SFH within the observed ranges, and use this as associated uncertainty to the calibration parameter, i.e. \(A(Z,SFH)=A(Z)\pm \sigma _A(SFH)\). These measurements, available from models at given metallicities (e.g. \(Z/Z_{\odot }=0.5,1,2\) for the example in Fig. 4), can be afterwards interpolated, to obtain a value with its error for any given metallicity. The correct calibration parameter for each point will be therefore estimated from the measured (or assumed) metallicity, together with its error, for a global \(A\pm \sigma _A\) that takes into account both the uncertainty on SFH and on metallicity. We will explore the impact of the SPS model choice on the systematic error budget in Sect. 3.1.4.

All these quantities will be combined in Eq. (18) to obtain an estimate of the Hubble parameter H(z) and of its uncertainty.

A final, yet important point to keep in mind is that, in order to be cosmology-independent, the CC approach must rely on age estimates that do not assume any cosmological prior. This is a very important point, since in many (if not in most) analyses, a cosmologically-motivated upper prior on age is adopted in order to break or minimize the previously discussed degeneracies. Of course, for the CC method to be used as a test for cosmology, it is of paramount importance to obtain a robust age estimate without introducing any (prior) dependence on a cosmological model, in order to avoid circularity and, basically, retrieve the cosmological model used as a prior.

3.1.4 Systematic effects

In this section, we give an overview of the possible systematic effects that can affect the CC method, discussing approaches to minimize them and propagate them to the total covariance matrix. We begin by discussing effects and assumptions that have a direct impact on the uncertainty on H(z), and conclude presenting additional possible issues that might impact on the measurement, but that turn out to be negligible.

The main systematic effects can be divided into four components, and are summarized below. Each one of those will provide a contribution in the total systematic covariance matrix \({\mathrm{Cov}}_{ij}^\mathrm{sys}\).

3.1.4.1 Error in the CC metallicity estimate

\({\mathrm{Cov}}_{ij}^{\mathrm{met}}\). The metallicity estimate enters in Eq. (18) by changing the calibration parameter A(Z, SFH). An error in its value, therefore, directly affects the H(z) measurement and its associated error budget. In Moresco et al. (2020), this issue has been addresses by performing a Monte Carlo simulation of SSP-generated galaxy spectra considering a variety of SPS models, with metallicities spanning different ranges (±10%,5%,1%) and estimating the Hubble parameter. In this way, it was estimated that the error induced on H(z) scales almost linearly with the uncertainty on the stellar metallicity, which is corroborated observationally by the analysis in Moresco et al. (2016b), where a 10% error on the metallicity was found to correspond to a 10% error on the Hubble parameter. Hence, the uncertainty on stellar metallicity (if known and quantified correctly) can be quantitatively propagated to an error on H(z) following the procedure highlighted in Sect. 3.1.3. This contribution does not introduce off-diagonal terms in the covariance matrix because it depends on the stellar metallicity of each spectra (be of an individual object or a co-add) and does not correlate different spectra.

3.1.4.2 Error in the CC SFH

\({\mathrm{Cov}}_{ij}^{\mathrm{SFH}}\). Even if CC have SFH characterized by very short timescales, assuming that the entire SFH is concentrated in a single burst (SSP) introduce a systematic error which must be accounted for as described in Sect. 3.1.3. This is typically a systematic contribution of the order of 2–3%; as an example, in Moresco et al. (2012a), where the estimated uncertainty on the SFH timescale was \(0<\tau <0.3\) Gyr, the contribution to the final error on H(z) was of \(\sim \)2.5%. Also this contribution to the covariance matrix is taken to be purely diagonal.

3.1.4.3 Assumption of SPS model

\({\mathrm{Cov}}_{ij}^\mathrm{model}\). The major source of systematic uncertainty in the CC method, independently of the process adopted to estimate dt, is the assumption of the SPS model. This is also by definition a term that introduces non-diagonal elements in the total covariance matrix, as the errors are highly correlated across different spectra. The estimation of its impact on the H(z) error was assess in Moresco et al. (2020). In this work, a wide combination of models was studied, including a variety of SPS models (BC03 and BC16 Bruzual and Charlot 2003, M11 Maraston and Strömbäck 2011, FSPS Conroy et al. 2009; Conroy and Gunn 2010, and E-MILES Vazdekis et al. 2016), initial mass functions (IMF, including Salpeter 1955, Kroupa 2001 and Chabrier 2003), and stellar libraries (STELIB Le Borgne et al. 2003 and MILES Sánchez-Blázquez et al. 2006). These models have then been used with a MC approach by simulating a measurement assuming a model and measuring the Hubble parameter with all the other ones, estimating in this way the contribution to the total covariance matrix due to the assumption of a specific SPS model, IMF and stellar library. It was demonstrated that the error introduced on H(z) is, on average, smaller than 0.4% for the IMF contribution, and of the order of 4.5% for the SPS model contribution.

The component due to stellar library is slightly higher, however this estimate is overly-conservative as the effect is driven by the inclusion of a stellar library model that has now been superseded. More importantly, it has been found that this uncertainty is also redshift dependent, and an explicit estimate for each component is provided as a function of z.

3.1.4.4 Rejuvenation effect \({\mathrm{Cov}}_{ij}^{\mathrm{young}}\)

Another possible bias to take into account is if the CCs selected present a residual contamination by a young component. We can divide this systematic effect into two cases. On the one side, we can have a part of the selected CC population composed by star-forming or intermediate systems; this event should be avoided, or maximally mitigated, by the accurate and combined selection process described in Sect. 3.1.2. On the other side, despite the accurate selection we could have that the population of a single CC, even if dominated by an old component, still have a minor contribution by a young underlying component of stars. This effect can bias the H(z) determination because it influences the overall shape of the spectrum due to the bluer color of younger stars, causing the measurement of younger ages and hence a biased dt. This issue has been studied in detail in Moresco et al. (2018), where several indicators have been explored and proposed to trace the eventual presence of o residual young sub-population, from the UV flux (Kennicutt 1998) to the presence of emission lines (see, e.g., Magris C. et al. 2003) or of strong absorption higher-order Balmer lines (like H\(\delta \) Le Borgne et al. 2006). In particular, by studying theoretical SPS models, the previously discussed CaII H/K indicator was proposed to quantitatively trace the percentage level of contamination, taking advantage of the fact that the H\(\epsilon \) line, characteristic of a young stellar component, directly affects the CaII H line, and therefore the ratio. It was then assessed, given a certain degree of contamination, how much the D4000 would be decreased, and, therefore, how much the estimate of H(z) is impacted, giving in this was a direct recipe between the measured CaII H/K (or upper limit due to non-detection) and an additional error on the Hubble parameter. A contamination by a star-forming young component of 10% (1%) of the total light was found to propagate to an H(z) error of 5% (0.5%); in particular, for the CC samples analyzed so far (Moresco et al. 2012a; Moresco 2015; Moresco et al. 2016b; Borghi et al. 2022b), it has been found this contamination to be below the detectable threshold, with an eventual additional error on \(H(z)<\)0.5%. In case of a lack of detection and given the stringent upper limit on a possible residual contamination this contribution to the covariance is also taken to be diagonal.

Following Moresco et al. (2020), the total covariance matrix for CC is defined as the combination of the statistical and systematic part as:

where \(\mathrm{Cov}_{ij}^{\mathrm{syst}}\), for simplicity and transparency, is decomposed the several contributions discussed above:

where the latest component can be further decomposed in:

As discussed above, \(\mathrm{Cov}_{ij}^{\mathrm{met}}\), \(\mathrm{Cov}_{ij}^\mathrm{SFH}\) and \(\mathrm{Cov}_{ij}^{\mathrm{young}}\) are purely diagonal terms, since they are related to the estimate of physical property of a galaxy (the stellar metallicity, and the eventual contamination by a younger subdominant population) uncorrelated for objects at different redshifts. \(\mathrm{Cov}_{ij}^{\mathrm{model}}\), instead, has been conservatively estimated as the contribution from different redshifts are fully correlated. In the published analyses of currently available datasets, the contributions \(\mathrm{Cov}_{ij}^\mathrm{met}\), \(\mathrm{Cov}_{ij}^{\mathrm{SFH}}\) and \(\mathrm{Cov}_{ij}^{\mathrm{young}}\) are already included in the errors provided (and discussed later in Sect. 3.1.5 and Table 1); the other terms have instead to be included following these recipes.Footnote 3

Other effects, which have been demonstrated to o have a negligible impact on the measurement, but which should be mentioned are the following:

-

progenitor bias. A common observational effect that can introduce biases in the analysis of early-type galaxies is the so called progenitor bias (Franx and van Dokkum 1996; van Dokkum et al. 2000): a given selection criterion might be effectively more stringent when applied at high redshift than at low redshift. In particular, high redshift objects that pass the sample selection might be older and more massive than those selected at low redshift, effectively representing the progenitor population of the low redshift sample. This bias becomes increasingly relevant when comparing objects spanning a wide range of redshifts, and, if not properly taken into account, could significantly affect the CC approach, since by definition it flattens the \(age-z\) relations, changing its slope and hence producing a biased H(z). The differential approach at the basis of CC by definition acts to minimize this effect, since in all cases galaxies being compared span a very small range of redshifts. A quantitative estimate of its impact on the CC approach has been done in Moresco et al. (2012a) with two different methods. On the one side, the analysis has been repeated considering only the upper envelope of the \(age-z\) distribution, that, by definition, could not be biased by the progenitor bias effect. The resulting H(z) obtained is in perfect agreement with the baseline analysis, even if with larger error-bars due to the lower statistics on which the upper envelope approach is based on (see Sect. 3.1.2). On the other side, the expected change in slope of the \(age-z\) relation, assuming a very conservative change in formation times for the CCs considered, has also been estimated. In this conservative estimate, it was found that the error induced on the estimated H(z) is \(\sim \)1% on average, which is negligible considering the rest of the error-budget.

-

mass-dependence. A final effect to be further explored is if the results have some mass-dependent bias. This effect has been explored thoroughly in many analyses (Moresco et al. 2012a, 2016b; Borghi et al. 2022a), and in all cases the H(z) measured in different mass (or velocity dispersion) bins have been found to be mutually consistent, and with no systematic trends. This is in agreement with the expectation since CC are selected to be already very massive galaxies (\(\log (M/M_{\odot })\gtrsim 11\)), comprising very homogeneous systems, as discussed in Sect. 3.1.2.

3.1.5 Main results

The first measurement with the CC method dates back to Simon et al. (2005), where they analyzed a sample of passively evolving galaxies from the luminous red galaxy (LRG) sample from SDSS early data release combined with higher redshift data from GDDS survey and archival data. The ages of these objects have been estimated with a full-spectrum fitting using SPEED models (Jimenez et al. 2004) estimating the age of the oldest components marginalizing over metallicity and SFH. Applying then the CC approach, 8 H(z) measurements were obtained in the range \(0<z<1.75\).Footnote 4

Similarly, also Zhang et al. (2014) and Ratsimbazafy et al. (2017) determined new values of the Hubble parameter measuring dt with a full-spectrum fitting technique. They studied a sample of \(\sim \)17,000 LRGs from SDSS Data Release Seven (DR7) and of \(\sim \)13,000 LRGs from 2dF-SDSS LRG and QSO catalog, respectively, both extracting differential age information for their sample using the UlySS code and BC03 models, obtaining four additional estimates of H(z) at \(z<0.3\) and one at \(z\sim 0.47\), respectively.

The results by Moresco et al. (2012a), Moresco (2015), and Moresco et al. (2016b) are instead based on the analysis of the D4000 feature described in Sect. 3.1.3. The first paper examined a compilation of very massive and passively evolving galaxies extracted from SDSS Data Release 6 Main Galaxy Sample and Data Release 7 LRG sample and from a combination of spectroscopic surveys at higher redshifts (zCOSMOS, K20, UDS), comprising in total \(\sim \)11,000 galaxies in the range \(0.15<z<1.3\). The second paper analyzed a significantly smaller sample (29 objects) of massive and passive galaxies available in the literature at very high redshifts \(z>1.4\). Finally, in the last paper considers the SDSS BOSS Data Release 9, selecting a sample of more than 130000 CC in the range \(0.3<z<0.55\). In total, 15 additional H(z) estimates are presented in the range \(0.18<z<2\).

Most recently, in Borghi et al. (2022b) a new approach was explored, using a Lick-indices-based analysis applied on CC extracted from the LEGA-C survey to derive information of the physical properties (age, metallicity and \(\alpha \)-enhancement) of the population, and in Borghi et al. (2022a) the resulting dt measurements were used to obtain a new estimate of the Hubble parameter.

The current, most updated compilation of H(z) measurements obtained with CC is shown in Fig. 5, and provided in Table 1. All these measurements have been obtained assuming a SPS model (BC03, Bruzual and Charlot 2003), except from the measurements from Moresco et al. (2012a), Moresco (2015), and Moresco et al. (2016b), that are available also with a different set of SPS models (M11, Maraston and Strömbäck 2011). Since, as discussed above, one of the main source of systematic uncertainties is the SPS model assumed, for a coherent analysis the systematic off-diagonal component to the covariance has to be added following the recommendations of Sect. 3.1.4, and with the recipes presented in Moresco et al. (2020).

These data have been widely used in the literature in a variety of applications, which we proceed to present below.

3.1.5.1 Independent estimates of the Hubble constant \(H_0\)

In the framework of the well-established tension between early- and late-Universe-based determinations of the Hubble constant (Verde et al. 2019; Di Valentino et al. 2021), obtaining independent estimates of \(H_0\) is of great importance as it can provide additional information to test or constrain the underlying cosmological models. By providing cosmology-independent estimates of H(z), whose calibration does not depend on early-time physics or on the traditional cosmic distance ladder, CCs are of value and, by extrapolating H(z) to \(z=0\), could inform the current debate over the Hubble tension.

This analysis can be done either by directly fitting CC data with a cosmological model (Moresco et al. 2011, 2012b, 2016a), or to take full advantage of the cosmology-independent approach, employing extrapolation techniques that do not rely on cosmological models, such as Gaussian Processes or Pade’ approximation (Verde et al. 2014; Montiel et al. 2014; Haridasu et al. 2018; Gómez-Valent and Amendola 2018; Capozziello and Ruchika 2019; Sun et al. 2021b; Bonilla et al. 2021; Colgáin and Sheikh-Jabbari 2021), or also based on alternative diagnostics (e.g., see Sapone et al. 2014; Krishnan et al. 2021). For currently published analyses using CC alone, the size of the error-bars on \(H_0\) including systematic uncertainties is still too large to weigh in on the tension.

3.1.5.2 Comparison with independent probes

With respect to other probes, one of the strengths of CC method is that it is a direct probe of the Hubble parameter H(z), instead of one of its integrals (see, e.g., Eqs. (14)). As a consequence, as highlighted in Jimenez and Loeb (2002), it is more sensitive to cosmological parameters which affect the evolution of the expansion history, where a difference in luminosity distance of 5% correspond to a difference in H(z) of 10%. In several works the performance of CC in constraining cosmological parameters has been compared with that other probes. In Moresco et al. (2016b) constraints from CC have been compared with the ones from SNe Ia and BAO considering different cosmological models, finding that for a flat \(w_{0}w_{a}\)CDM model, the accuracy on cosmological parameters that can be obtained from CC and BAO are comparable, and that in comparison with other probes CC are in particular useful to measure \(H_0\) and \(\varOmega _{\mathrm{m}}\). Similar conclusions are also found by Vagnozzi et al. (2021) and Gonzalez et al. (2021), where the results from CC are found in good agreement with the ones of BAO and SNe over a wide range of cosmological models. Lin et al. (2020) focused the comparison in particular on \(H_0\) and \(\varOmega _{\mathrm{m}}\), confirming a good consistency between CC and an even broader collection of cosmological probes, and also highlighting the crucial synergy between the various probes.

3.1.5.3 Constraints on cosmological parameters using CC alone and in combination with independent probes

CC are a very attractive probe to study non-standard cosmological models, since no cosmological assumption is made in the derivation of H(z). For this reason, several works have explored how they can be used to put constraints and provide evidences in favor or against various cosmological models, from reconstructing the expansion history of the Universe with a cosmographic approach (Capozziello et al. 2018, 2019), to testing the consistency with concordance models (Seikel et al. 2012) or the spatial curvature of the Universe (Vagnozzi et al. 2021; Arjona and Nesseris 2021), to exploring more exotic cosmological models (such as interacting dark energy models, but not only, see e.g. Bilicki and Seikel 2012; Nunes et al. 2016; Colgáin and Yavartanoo 2019; von Marttens et al. 2019; Yang et al. 2019; Benetti and Capozziello 2019; Aljaf et al. 2021; Ayuso et al. 2021; Reyes and Escamilla-Rivera 2021; Benetti et al. 2021), to directly measuring cosmological parameters (see, e.g., Sect. 3.1.6). In particular, it has been found that CC are extremely useful in combination with other cosmological probes (SNe, BAO, CMB) to increase the accuracy on cosmological parameters (such as \(\varOmega _k\), \(\varOmega _{\mathrm{m}}\) and \(H_0\), see, e.g., Haridasu et al. 2018; Gómez-Valent and Amendola 2018; Lin et al. 2021), to determine the time evolution of the dark energy EoS (Moresco et al. 2016a; Zhao et al. 2017; Di Valentino et al. 2020; Colgáin et al. 2021), and also to provide tighter constraints on the number of existing relativistic species and on the sum of neutrino masses by breaking the existing degeneracies between parameters (Moresco et al. 2012b, 2016a). As suggested by Linder (2017), the measured H(z) data have also been used in combination with the growth rate of cosmic structures to construct a new diagram to disentangle cosmological models (Moresco and Marulli 2017; Basilakos and Nesseris 2017; Bessa et al. 2021). Finally, the CC data, in combination with BAO and SNe, have proven to be extremely useful also to test the distance-duality relation and measure the transparency (or equivalently, the opacity) of the Universe (Holanda et al. 2013; Santos-da-Costa et al. 2015; Chen et al. 2016b; Vavryčuk and Kroupa 2020; Bora and Desai 2021; Mukherjee and Mukherjee 2021; Renzi et al. 2021).

3.1.6 Forecasting the future impact of cosmic chronometers

Currently, there are two main limitations in the CC method: i) the error-bars are dominated by the uncertainty due to metallicity and SPS model, and ii) the absence of a dedicated survey (such as for SNe or BAO) to obtain a statistically significant sample of CC with high spectral S/N and resolution. For the first one, as highlighted in Moresco et al. (2020), there is a clear path to make progress, which involves a meticulous and detailed analysis and comparison of the various models with high-resolution and high S/N observations of CC spectra and SEDs. This program appears to be feasible, enabled by current or forthcoming observational instruments and facilities (e.g., X-Shooter, MOONS) possibly combined with some dedicated observations.

On the other hand, large campaigns to detect massive and passive galaxies with spectra at high S/N and resolutions are not directly foreseen at the moment, and for this science case one should rely on legacy data coming from other planned surveys. Nevertheless, future missions, either already planned (like Euclid, Laureijs et al. 2011), under study (ATLAS probe, Wang et al. 2019), or large data sets yet not fully exploited (SDSS BOSS Data Release 16, Ahumada et al. 2020), could provide significant large statistics of massive and passive galaxies either in redshift ranges previously poorly mapped (\(1.5<z<2\)) or previously exploited with significantly lower statistics (\(0.2<z<0.8\)).

In the following, we therefore explore two different scenarios, constructing their corresponding simulations and extracting forecasts on the expected performance of CC with future data. In the first scenario, we will assume to be able to exploit the available spectroscopic surveys at redshifts \(0.2<z<0.8\) (low-z, e.g. BOSS DR16), and to be able to obtain a sample large enough to measure 10 H(z) points with a statistical error of 1%, and including in the systematic error budget both the contribution of IMF and SPS models (as suggested by Moresco et al. 2020); note that already in the analysis by Moresco et al. (2016b) the statistical error was of the order of 2–3%. In the second scenario, we perform a simulation of CC measurements as they will be enabled by future spectroscopic surveys at higher redshifts (high-z), producing 5 H(z) points with a statistical error of 5% at \(1.5<z<2.1\); as an example, Euclid is expected to provide, especially with its Deep Fields, up to a few thousands very massive and passive galaxies in this redshift range, increasing by 2 orders of magnitude the currently available statistics (Laureijs et al. 2011; Wang et al. 2019) As a final step, we will analyze the combined measurements, and also a more optimistic scenario where the systematic error component is assumed to be minimized following the recipes described in Sect. 3.1.4 (and in particular considering the uncertainty due to SPS models resolved, remaining just with the covariance due to the IMF contribution).

The H(z) simulated data are generated with a given error (uncorrelated across data points) assuming cosmological parameters for the \(\Lambda \)CDM model from Planck Collaboration et al. (2020), and are shown, together with the current CC measurements, in the larger panel of Fig. 6. The associated covariance matrix is, then, calculated as presented in Sect. 3.1.4, considering the contributions previously discussed. To assess the capability of the CC method to constrain cosmological parameters, we explore the constraints current and future data can provide on an open wCDM cosmology, where both the spatial curvature density \(\varOmega _k\) and the dark energy EoS are let free to vary. We considered flat priors on [\(H_0\), \(\varOmega _{\mathrm{m}}\), \(\varOmega _{\mathrm{de}}\), \(w_0\)] (the free parameters in our fit), and analyzed the data in a Bayesian framework with a Monte Carlo Markov Chain (MCMC) approach using the public emcee (Foreman-Mackey et al. 2013) python code. The results are shown in Fig. 6 and in Table 2.

As a first comment, we note that the reduced \(\chi ^2\) (\(\chi ^2_\mathrm{red}\)) of the analysis of the current dataset in the flat \(\Lambda \)CDM model is smaller than expected, with a value \(\sim \)0.5. This effect is driven by the fact that in some of the CC analyses (and hence for some of the H(z) points), some sources of error have been estimated a bit too conservatively. This is true in particular for the diagonal part of the covariance matrix, where the error on the metallicity (which is driving in most cases the total error) has been overestimated in some works, either for a large prior assumed due to the fact that a accurate measurement was not feasible (Moresco 2015), or due to a propagation in that error of additional error contributions (e.g., the one due to SPS modeling or SFH estimate, Moresco et al. 2016b; Borghi et al. 2022a) that in this way would have been counted twice, after the full covariance matrix has been considered. This is particularly evident since this effect disappears for the analyses where a full metallicity estimate was available and in which the error has not been overestimated, providing in those cases reasonable values of \(\chi ^2_{\mathrm{red}}\), like, e.g., in Simon et al. (2005) dataset where \(\chi ^2_\mathrm{red}\sim 1.1\), or in Moresco et al. (2012a) where \(\chi ^2_\mathrm{red}\sim 0.75\). This effect, however, does not have a significant impact on the results, since the points with larger errors (driving \(chi^2_{\mathrm{red}}\) to smaller values) are also the less relevant for the cosmological constraints, and the cosmological analyses of different CC subsamples provide compatible results.

As discussed in Moresco et al. (2016a), H(z) measurements at low redshift are crucial to better constrain the intercept the Hubble parameter at \(z\sim 0\), while measurements at higher redshift become more and more important to determine the shape of the H(z) evolution, critically dependent on dark energy and dark matter parameters. As expected, the simulated CC data at low\(-z\) significantly improve the current accuracy on the estimated Hubble constant by a factor of \(\gtrsim 2\) by increasing the precision on the extrapolation of H(z) to \(z\sim 0\). On the other hand, the high\(-z\) simulated data become fundamental to determine the dark energy EoS especially when combined with lower redshift data, improving the accuracy on w from 38 to 29% and on \(\varOmega _{\mathrm{m}}\) from 59 to 31%. When considering the optimistic scenario, CC data will enable an accuracy on \(H_0\) to the 3% level, and on \(\varOmega _{\mathrm{m}}\) and w to \(\sim \)30%.

Clearly, as the dimensionality of the problem decreases, the accuracy on the derived parameter increases. As a comparison, in Table 2 we show also, for the current dataset and the optimistic scenarios, the constraints on \(H_0\) and \(\varOmega _{\mathrm{m}}\) achievable in a flat \(\Lambda \)CDM model. In this regime, we observe a particular improvement in the accuracy on \(\varOmega _{\mathrm{m}}\) up to the 3% level.

Forecast of CC measurements with future surveys. In the bottom left panel, current CC data are shown with white points, while blue and yellow points present forecasts on the expected accuracy with the CC approach respectively at low redshift (with an accurate re-analysis of current surveys, e.g. SDSS) and from future surveys, like the ESA Euclid mission (Laureijs et al. 2011) or the ATLAS probe mission (Wang et al. 2019). For the blue points, the error-bars are smaller than the points. The outer plots shows the constraints for an open wCDM cosmology that can be obtained with current data (gray contours), and with different combinations of the simulated datasets

3.2 Quasars