Abstract

We study the computation of the zero set of the Bargmann transform of a signal contaminated with complex white noise, or, equivalently, the computation of the zeros of its short-time Fourier transform with Gaussian window. We introduce the adaptive minimal grid neighbors algorithm (AMN), a variant of a method that has recently appeared in the signal processing literature, and prove that with high probability it computes the desired zero set. More precisely, given samples of the Bargmann transform of a signal on a finite grid with spacing \(\delta \), AMN is shown to compute the desired zero set up to a factor of \(\delta \) in the Wasserstein error metric, with failure probability \(O(\delta ^4 \log ^2(1/\delta ))\). We also provide numerical tests and comparison with other algorithms.

Similar content being viewed by others

1 Introduction

1.1 The Bargmann Transform and its Zeros

The Bargmann transform of a real variable function \(f \in L^2(\mathbb {R})\) is the entire function

Originally introduced as a link between configuration and phase space in quantum mechanics [7], the Bargmann transform was later recognized as a powerful tool in signal analysis [11] because it encodes the correlations between the signal f and the time–frequency shifts of the Gaussian function \(g(t) = \big (\tfrac{2}{\pi }\big )^{\frac{1}{4}} \,e^{-t^2}\,\):

In the jargon of time–frequency analysis, the right-hand side of (1.2) is known as the short-time Fourier transform of f with Gaussian window, and measures the contribution to f(t) of the frequency y near \(t=x\).

In practice, the values of the short-time Fourier transform of a signal f are only available on (a finite subset of) a grid

and possibly only approximately so due to numerical errors. The goal of Gabor analysis is to extract useful information about f from such limited measurements. Equivalently, by (1.2), the task is to capture the analytic function F given a limited number of its samples on a grid. This second point of view led to the most conclusive results in Gabor theory, such as the complete description of all grids (1.3) for which the Gabor transform fully retains the original analog signal f [11, 21, 23, 24].

While Gabor signal analysis has traditionally focused on large values of the short-time Fourier transform (1.2), recent work has brought to the foreground the rich information stored in its zeros, especially when the signal is contaminated with noise. Heuristically, the zeros of the Bargmann transform of noise exhibit a rather rigid random pattern with predictable statistics, from which the presence of a deterministic signal can be recognized as a salient local perturbation [13, 14, 16]. Remarkably, the Bargmann transform of white noise has been identified as a certain Gaussian analytic random function [5, 6], and consequently, the well-researched statistics of their zero sets [19, 22] can be leveraged in practice [15, Chapters 13 and 15], [5]. The particular structure observed in the zeros of the Bargmann transform under even a moderate amount of white noise has also been invoked as explanation for the sparsity resulting from certain nonlinear procedures to sharpen spectrograms [16], as the zeros of the Bargmann transform are repellers of the reassignment vector field [15, Chapter 12]. The practical exploitation of such insights requires an effective computational link between finitely given data on the one hand and zeros of Bargmann transforms of analog signals on the other.

1.2 Computation of Zero Sets

Suppose that the values of the Bargmann transform F of a signal f are given on a grid

and we wish to compute an approximation of \(\{F=0\}\), the zero set of F, within the square

More realistically, we only have access to samples of F on those grid points near the computation domain, e.g., on

The inverse of the spacing of the grid, \(1/\delta \), will be called the resolution of the data.

1.2.1 Thresholding

The most naive approach to compute \(\{F=0\}\) is thresholding: one selects all grid points \(\lambda \) such that \(|F(\lambda )|\) is below a certain threshold \(\varepsilon >0\):

The normalizing weight \(e^{-\frac{1}{2}|\lambda |^2}\) is motivated by (1.2), as the short-time Fourier transform of a typical signal can be expected to be bounded. One disadvantage of this approach is that it requires an educated choice for the threshold \(\varepsilon \). Moreover, computations with various reasonable choices of thresholds, such as quantiles of \(e^{-\frac{1}{2} |\lambda |^2} |F(\lambda )|\) calculated over all grid points \(\lambda \), either fail to compute many of the zeros or capture too many points (see Fig. 1).

1.2.2 Extrapolation

One may consider using the samples of F on the finite grid (1.6) to reconstruct the signal f, resample F at arbitrarily high density, and thus calculate more easily the zero set \(\{F=0\}\). However, computation of zeros through extrapolation may be inaccurate: while the samples of F on the infinite grid (1.4) determine F as soon as \(\sqrt{\pi } \cdot \delta < 1\) [11, 21, 24], the truncation errors involved in the approximation of F near \(\Omega _L\) from finite data (1.6) can only be neglected at very high-resolution \(1/\delta \). Even if the values of F are successfully extrapolated to a higher resolution grid, the remaining computation is still not trivial, as, for example, simple thresholding may fail even at high resolution (see Fig. 4 and Sect. 5).

1.2.3 Minimal Grid Neighbors

A greatly effective numerical recipe for the computation of zeros of the Bargmann transform can be found in the code accompanying [13]—although not explicitly described in the text. A grid point \(\lambda \) is selected as a numerical approximation of a zero if \(e^{-\frac{1}{2} |\lambda |^2} |F(\lambda )|\) is minimal among grid neighbors, i.e.,

where \(|z|_\infty = \max \{|x|,|y|\}\). The subset of points that pass the test furnish the computation of \(\{F=0\}\). This method, which we call minimal grid neighbors (MGN), performs impressively as long as the grid resolution is moderately high. Indeed, we understand that the method is behind the simulations in [15, Chapter 15] which quite faithfully reproduce the statistics of the zeros of the Bargmann transform of complex white noise (that are known analytically [5, 19]). The MGN algorithm was also used to produce the plots in [5], as pointed out in [5, Sect. 5.1.1.]; see also [6, Sect. 5], [20, Sect. IV], and [1]. Heuristically, the test (1.8) succeeds in identifying zeros due to the analyticity of F, which implies that \(|F(z)| e^{-\frac{1}{2} |z|^2}\) does not have nonzero local minima [19, Sect. 8.2.2]. Remarkably, (1.8) is also effective even if the comparison involves only neighboring grid points.

The MGN algorithm performs equally well when calculating the zeros of the Bargmann transform of a signal

composed of a deterministic real-variable function \(f_1\) plus complex white noise with variance \(\sigma ^2>0\). The presence of a certain amount of randomness must be behind the success of the algorithm, as, for \(\sigma =0\), the method cannot be expected to succeed. Indeed, one can engineer a deterministic signal f where the detection of zeros fails, as the value of its Bargmann transform F can be freely prescribed on any given finite subset of the computation domain [24]. We are unaware of performance guarantees for MGN.

1.3 Contribution

In this article, we introduce a variant of MGN, called adaptive minimal grid neighbors (AMN). The algorithm is based on a comparison similar to (1.8) but incorporates an adaptive decision margin, that depends on the particular realization of F. While AMN has the same mild computational complexity and similar practical effectiveness as MGN, we are able to estimate the accuracy and confidence of the computation with AMN in terms of the grid resolution. In this way, we show that AMN is probably approximately correct for the signal model (1.9), in the sense that it computes the zero set with high probability up to the resolution of the data.

On the one hand, we present what to the best of our knowledge are the first formal guarantees for the approximate computation of zero sets of analytic functions from grid values. In fact, besides its main purpose of computation with specific data, the AMN algorithm offers a computationally attractive and provably correct method to simulate zero sets of the Gaussian entire function (2.10), by running the procedure with simulated inputs. On the other hand, our analysis is a first step toward understanding the performance of MGN.

2 Main Result

2.1 The Adaptive Minimal Neighbors Algorithm

We now introduce a new algorithm to compute zero sets of Bargmann transforms. Suppose again that samples of an analytic function \(F :\mathbb {C} \rightarrow \mathbb {C}\) are given on those points of the grid (1.4) that are near the computation domain (1.5), say, on

For each grid point \(\lambda \) strictly inside the computation domain, \(\lambda \in \Lambda _L= \Lambda \cap \Omega _L\), we use the neighboring sample at \(\lambda + \delta \) to compute the following comparison margin:

The margin \(\eta _\lambda \) therefore depends on the particular realization of F. To motivate the definition, note that, when \(\lambda =0\), the maximum in (2.2) is taken over |F(0)| and the absolute value of an incremental approximation of \(\partial F(0)\), where \(\partial F=\frac{1}{2}(\frac{d}{dx} F-i \frac{d}{dy} F)\). The comparison margin thus incorporates the size and oscillation of F at \(z=0\). In general, \(\eta _\lambda \) has a similar interpretation with respect to the covariant derivative

and, indeed, \(\eta _\lambda \) is defined so that

The differential operator \(\bar{\partial }^* F\) plays a distinguished role in the analysis of vanishing orders of Bargmann transforms [10, 12] because it commutes with the translational symmetries of the space that they generate (the Bargmann–Fock shifts defined in Sect. 3.2).

The first step of the algorithm selects all grid points \(\lambda \in \Lambda _L\) that pass the following comparison test:

In contrast to (1.8), the comparison in (2.5) does not involve the immediate grid neighbors of \(\lambda \) but rather those points lying on the square centered at \(\lambda \) with half-side-length \(2\delta \); see Fig. 2.

(In particular, the test only involves grid points \(\mu \in \Lambda _{L+2\delta }\).) Intuitively, the larger distance between \(\lambda \) and \(\mu \) permits neglecting the error in the differential approximation (2.4).

The use of non-immediate neighbors in (2.5) introduces a certain redundancy in the selection of numerical zeros, because the comparison boxes delimited by \(\{\mu : |\lambda -\mu |_\infty = 2\delta \}\) overlap and, as a consequence, one zero of F can trigger multiple positive tests; see Fig. 3. The second step of the algorithm sieves the selected points to enforce a minimal separation of \(5\delta \) between different points. The algorithm is formally specified below.

Remark 2.1

The constants 3/4 in (2.6), 2 in (2.7), and 5 in (2.8) are to some extent arbitrary, and other choices lead to similar results. These particular values are chosen to aid the exposition rather than to optimize practical performance. In fact, these choices are suboptimal at low resolutions (see Sect. 5).

2.2 Performance Guarantees for AMN

To study the performance of the AMN algorithm, we introduce the following input model, which, as we will explain, corresponds to the Bargmann transform of an arbitrary signal contaminated with complex white noise of an arbitrary intensity.

2.2.1 Input Model

We consider a random entire function on the complex plane

where \(F^1:\mathbb {C} \rightarrow \mathbb {C}\) is a deterministic entire function, \(F^0\) is a (zero mean) Gaussian analytic function with correlation kernel:

and \(\sigma >0\) is the noise level. We assume that the deterministic function \(F^1\) satisfies the quadratic exponential growth estimate

for some constant \({\mathrm {A}}\ge 0\).

As for \(F^0\), the assumption means that for each \(z_1, \ldots , z_n \in \mathbb {C}\), \((F^0(z_1), \ldots , F^0(z_n))\) is a normally distributed (circularly symmetric) complex random vector, with mean zero and covariance matrix \(\big [e^{z_k \overline{z_\ell }}\big ]_{k,\ell }\). Alternatively, \(F^0\) can be described as

where \((\xi _n)_{n \ge 0}\) are independent standard complex random variables [19].

2.2.2 Discussion of the Model

The random function \(F^0\) is the Bargmann transform of standard complex white noise \(\mathcal {N}\). As each realization of complex white noise is a tempered distribution, the computation of its Bargmann transform (1.1) is also to be understood in the sense of distributions, as in [8]. (See [6] and [17, Sect. 5.1] for a detailed discussion on this, and alternative approaches.)

We can similarly interpret \(F^1\) as the Bargmann transform of a distribution \(f^1\) on the real line [8]. The assumption (2.11) means precisely that \(f^1\) belongs to the modulation space \(M^\infty (\mathbb {R})\) consisting of distributions with Bargmann transforms bounded with respect to the standard Gaussian weight—or, equivalently, with bounded short-time Fourier transforms [9]. The modulation space \(M^\infty (\mathbb {R})\) includes all square-integrable functions \(f^1 \in L^2(\mathbb {R})\) and also many of the standard distributions used in signal processing.

In summary, the input model (2.9) corresponds exactly to the Bargmann transform of a random signal

where \(f^1 \in M^\infty (\mathbb {R})\) and \(\sigma \cdot \mathcal {N}\) is complex white noise with standard deviation \(\sigma \).

2.2.3 Performance Analysis

We now present the following performance guarantees, pertaining to the computation domain (1.5) and the acquisition grid (2.1). To avoid immaterial technicalities, we assume that the corners of the computation domain lie on the acquisition grid.

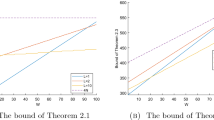

Theorem 2.2

Fix a domain width \(L \ge 1\), a noise level \(\sigma >0\), and a grid spacing \(\delta >0\) such that \(L/\delta \in \mathbb {N}\). Let a realization of a random function F as in (2.9) with (2.10) and (2.11) be observed on \(\Lambda _{L+2\delta }\), and let \(\mathrm {Z}\) be the output of the AMN algorithm.

There exists an absolute constant C such that, with probability at least

there is an injective map \(\Phi :\{F=0\} \cap \Omega _L\rightarrow \mathrm {Z}\) with the following properties:

\(\bullet \) (Each zero is mapped into a near-by numerical zero)

\(\bullet \) (Each numerical zero that is away from the boundary arises in this form)

For each \(\lambda \in \mathrm {Z}\cap \Omega _{L-2\delta }\), there exists \(\zeta \in \{F=0\} \cap \Omega _L\) such that \(\lambda =\Phi (\zeta )\).

A proof of Theorem 2.2 is presented in Sect. 4. We remark some aspects of the result.

-

The AMN algorithm does not require knowledge of the noise level \(\sigma \) and is homogeneous in the sense that F and cF, with \(c \in \mathbb {C} \setminus \{0\}\), produce the same output.

-

Within the estimated success probability, the computation is accurate up to a factor of the grid spacing.

-

The analysis concerns an arbitrary deterministic signal impacted by noise and is uniform over the class (2.11). As usual in such smoothed analysis, the success probability grows as the signal to noise ratio \({\mathrm {A}}/\sigma \) decreases, because randomness helps preclude the very untypical features that could cause the algorithm to fail [25]. In fact, in the noiseless limit \(\sigma =0\), the algorithm could completely fail, since \(F^1\) can be freely prescribed on any finite subset of the plane [24]. For example, irrespectively of its values on the acquisition grid, the deterministic function \(F^1\) could have a cluster of zeros of small diameter that would trigger a single positive minimality test. The proof of Theorem 2.2 shows that such examples are fragile, as the addition of even a moderate amount of noise regularizes the geometry of the zero set.

-

Up to a small boundary effect, the guarantees in Theorem 2.2 comprise an estimate on the Wasserstein distance between the atomic measures supported on \(\{F=0\} \cap \Omega _L\) and on the computed set \(\mathrm {Z}\). More precisely, for a tolerance \(\theta >0\) let us define the boundary-corrected Wasserstein pseudo-distance between two sets \(U,V \subseteq \mathbb {C}\) as

$$\begin{aligned} \mathrm {W}_{L,\theta }(U,V) = \inf _\Phi \max _{z \in U} | \Phi (z)-z|_\infty , \end{aligned}$$where the infimum is taken over all injective maps \(\Phi :U \rightarrow V\) such that \(V \cap \Omega _{L-\theta }\subseteq \Phi (U)\). (The definition is not symmetric in U and V, but this is not important for our purpose.) Then, Theorem 2.2 reads

$$\begin{aligned} \mathbb {P} \Big [ \mathrm {W}_{L,2\delta }\left( \{F=0\} \cap \Omega _L,\mathrm {Z}\right) > 2\delta \Big ] \le C L^2 \exp \bigg (\frac{{\mathrm {A}}^2}{8\sigma ^2}\bigg ) \max \left\{ 1,\log ^2(1/\delta )\right\} \delta ^{4}. \end{aligned}$$ -

The presented analysis concerns a signal contaminated with complex white noise. This is a mathematical simplification; we believe that with more technical arguments a similar result can be derived for real white noise. The case of colored noise seems more challenging and will be the object of future work.

2.3 Numerical Experiments

In Sect. 5, we report on numerical experiments that compare the AMN and MGN algorithms. We also include a modified version of thresholding (ST), that uses a threshold proportional to the grid spacing and incorporates a sieving step as in AMN (while standard thresholding without sieving performs extremely poorly, as seen in Fig. 1).

The performance of AMN, MGN, and ST is first tested indirectly, by using these algorithms to simulate the zero sets of the random functions in the input model (2.9). We then compare theoretically derived statistics of the zeros of (2.9) to empirical statistics obtained from the output of AMN and MGN under various simulated realizations of (2.9).

Second, we perform a consistency experiment that aims at estimating the probability of computing a low-distortion parametrization of the zero set of F, as in Theorem 2.2. Specifically, we simulate a realization of the random input F sampled at high-resolution and use the output of AMN or MGN as a proxy for the ground truth \(\{F=0\}\). We then test the extent to which this set is captured by the output of AMN, MGN, or ST from lower resolution subsets of the same simulated data.

The performance of AMN and MGN is almost identical, although the minimal resolution at which MGN starts to perform well is slightly lower than that for AMN. (This is to be expected, as the constants 2 and 5 used in (2.7) and (2.8) are not adequate for low resolutions, cf. Remark 2.1.) Both AMN and MGN significantly outperform ST. See also Fig. 4 for an illustration.

A realization of \(e^{-\frac{1}{2} |z|^2} |(F^0(z) + F^1(z))|\) with \(F^1\) the Bargmann transform of \(f^1\). The deterministic functions are scaled to obtain the prescribed \({\mathrm {A}}\). Zeros computed with AMN, MGN, and ST are calculated from grid samples with \(\delta =2^{-9}\). Zeros from AMN and MGN coincide (circle), while ST (cross) fails either by detecting false zeros (left) or by not capturing all of them (right)

The favorable performance of AMN is interesting also when the input is just noise, as it gives a fast and provably accurate method to simulate the zeros of the Gaussian entire function (2.10). (The simulations that we present in Sect. 5 use certain heuristic shortcuts to accelerate the simulation of the input (2.10)—see Sect. 5.1; although we do not formally analyze these, they are implicitly validated, as the simulated point process reproduces the expected theoretical statistics.)

All numerical experiments can be reproduced with openly accessible software and our code is available at https://github.com/laescudero/discretezeros. Our implementation of the Bargmann transform uses [4].

2.4 Organization

Section 3 introduces the notation and basic technical tools about analytic functions, Bargmann–Fock shifts, and their applications to random functions and their zeros. Theorem 2.2 is proved in Sect. 4, while numerical experiments are presented in detail in Sect. 5. Conclusions and outlook on future directions are discussed in Sect. 6.

3 Preliminaries

3.1 Notation

For a complex number \(z=x+iy\), we use the notation \(|z|_\infty = \max \{|x|,|y|\}\), while |z| denotes the usual absolute value. The zero set of F is denoted by \(\{F=0\}\). The differential of the (Lebesgue) area measure on the plane will be denoted for short \(dm\), while the measure of a set E is |E|. With a slight abuse of notation, we also denote the cardinality of a finite set Z by \(|Z|\). Squares on the complex plane are denoted by \(Q_r(z) = \{w \in \mathbb {C}: |z-w|_\infty \le r\}\). For two nonnegative functions f, g, we write \(f \lesssim g\) if there exists an absolute constant C such that \(f(x) \le C g(x)\), for all x. We write \(f \asymp g\) if \(f \lesssim g\) and \(g \lesssim f\).

The Wirtinger derivative of a function \(F:\mathbb {C} \rightarrow \mathbb {C}\) is \(\partial F=\frac{1}{2}(\frac{d}{dx} F-i \frac{d}{dy} F)\). When we need to stress on which variable the derivative is taken we write subindices, e.g., \(\partial _w F(z,w)\).

A Gaussian entire function (see [19, Ch. 2] and [22]) is a random function \(F:\mathbb {C} \rightarrow \mathbb {C}\) that is almost surely entire, and such that for every \(z_1, \ldots , z_n \in \mathbb {C}\), \(\big (F(z_1), \ldots , F(z_n)\big )\) is a circularly symmetric complex normal vector. We will be only concerned with the random function F given in (2.9). We also use the notation (1.4), (1.5), (1.6), possibly for distinct values of L.

3.2 Bargmann–Fock Shifts and Stationarity of Amplitudes

The analysis of the AMN algorithm is more transparent when formulated in terms of the Bargmann–Fock shifts. For a function \(F:\mathbb {C} \rightarrow \mathbb {C}\), we let

The amplitude of an entire function F is defined as the weighted magnitude

and satisfies

The comparison margin of the AMN algorithm (2.6) can be expressed in terms of Bargmann–Fock shifts as

and leads to the approximation (2.4) because

(Here and throughout we write \(F'_\lambda (0)\) for \(\partial [F_\lambda ](0)\).) Similarly, in terms of amplitudes, the test (2.7) reads

With respect to the input model (2.9) we note that, if \(F^0\) is the (zero mean) Gaussian entire function with correlation kernel (2.10), then the Bargmann–Fock shifts \(F^0 \mapsto F^0_w\) preserve the stochastics of \(F^0\), as they leave its covariance kernel invariant. As a consequence, for any \(w \in \mathbb {C}\), \(F^0_w(0), \big [F^{0}_{w}\big ]'(0)\) are independent standard complex normal random variables (with zero mean and variance 1). Indeed, by the mentioned invariance it suffices to consider \(w=0\), and, in this case, \(F^0_w(0), \big [F^{0}_{w}\big ]'(0)\) are the coefficients \(\xi _0\) and \(\xi _1\) in (2.12).

3.3 Minimum Principle for Amplitudes

The following weighted version of the minimum principle is at the core of the success of MGN and AMN.

Lemma 3.1

Let \(F:\mathbb {C} \rightarrow \mathbb {C}\) be entire, \(r>0\), and assume that

Then, there exists \(z \in \mathbb {C}\) with \(|z|_\infty \le r\) such that \(F(z)=0\).

Proof

Let \(D := \{ z \in \mathbb {C}: |z|_\infty < r\}\) and suppose that F does not vanish on \(\bar{D}\). Then, the function

is well defined on \(\bar{D}\) and satisfies

By the analyticity of F, \(\Delta \log |F| = 0\) and thus

Hence, the maximum principle for subharmonic functions together with (3.7) implies that \(\log H(z)\) and therefore \(|F(z)| e^{-\frac{1}{2} |z|^2}\) is constant on D. For \(z \in D\), we compute

As F is nonvanishing on D, it follows that \(\partial _z F-\bar{z}F = 0\) on D, and therefore

on D. This contradiction shows that F must vanish on \(\bar{D}\). \(\square \)

3.4 Linearization

In what follows, we derive basic facts about the input model (2.9), and always assume that (2.10) and (2.11) hold.

The following is a strengthened version of [19, Lemma 2.4.4].

Lemma 3.2

Let F be as in (2.9). Then, there exists an absolute constant \(C>0\) such that for all \(L \ge 1\) and \(t\ge {\mathrm {A}}\),

Proof

We consider the Taylor expansion of F:

where \(E_2\) can be bounded in terms of the amplitude (3.2) as

We also note that for \(|z| \le 10\),

Hence, for \(|z| \le 10\),

We apply the previous bounds to \(F_w\), note that, by (3.3), \(G_w(z) = G(z+w)\), and obtain that for \(|z| \le 10\),

Hence,

Let \(G^0\) and \(G^1\) be the amplitudes corresponding to \(F^0\) and \(F^1\), respectively. Then by (2.11),

Hence,

To conclude, we claim that the following excursion bound holds:

where \(C>0\) is an absolute constant. For \(L \le 1/4\), this follows, for example, from [19, Lemma 2.4.4]. In general, we cover the domain with \(\lesssim L^2\) squares of the form \(w+[-1/4,1/4]^2\), apply the previously mentioned bound to \(G^0_w(z)=G^0(z+w)\), and use a union bound. This completes the proof. \(\square \)

3.5 Almost Multiple Zeros

It is easy to see that, almost surely, the random function (2.9) has no multiple zeros. In the analysis of the AMN algorithm, we will also need to control the occurrence of zeros that are multiple up to a certain numerical precision, in the sense that F and its derivative are simultaneously small. The following lemma is a first step in that direction, as it controls the probability of finding a grid point that is an almost multiple zero.

Lemma 3.3

Let F be as in (2.9) and \(\alpha , \beta >0\). Then, the probability that for some grid point \(\lambda \in \Lambda _L\) the following occurs:

is at most \(C L^2 \alpha ^2 \beta ^2 \delta ^{-2} \sigma ^{-4}\), where C is an absolute constant.

Proof

For each grid point \(\lambda \in \Lambda _L\), \(F_\lambda (0)\) and \(F'_\lambda (0)\) are independent complex normal variables with possibly nonzero means \(\mu _1, \mu _2\) and variance \(\sigma ^2\). Therefore,

By Anderson’s lemma [2], the right-hand sides of (3.9) and (3.10) are maximal when \(\mu _1=0\) and \(\mu _2=0\), respectively. Direct computation in those cases yields \(P(|F_\lambda (0)|\le \alpha ) \lesssim \alpha ^2 \sigma ^{-2}\) and \(P(|F'_\lambda (0)|\le \beta ) \lesssim \beta ^2 \sigma ^{-2}\). By independence, the probability of (3.8) is \(\lesssim \alpha ^2 \beta ^2 \sigma ^{-4}\). On the other hand, there are \(\lesssim L^2 \delta ^{-2}\) grid points under consideration, so the conclusion follows from the union bound. \(\square \)

3.6 First Intensity of Zeros

The following proposition is not used in the proof of Theorem 2.2, but rather as a benchmark in the numerical experiments (Sect. 5).

Proposition 3.4

Let F be as in (2.9). Then for every Borel set \(B \subset \mathbb {C}\),

where

Proof

The set of zeros and thus \(\rho _1(\zeta )\) does not change if we scale F by a fixed constant. Hence, by considering the function \(\frac{1}{\sigma }F\) in place of F, we can assume that \(\sigma =1\). The expected number of points \(\{z\in B: F(z)=0\}\) of a Gaussian random field F is given by Kac–Rice’s formula:

where \(p_{F(\zeta )}(0)\) is the probability density of \(F(\zeta )\) at 0; see, e.g., [3, Th. 6.2].

We first compute the value

Second, since F is analytic, the determinant in (3.12) can easily be seen to simplify to \(\left| \det DF(\zeta ) \right| = \left| \partial _\zeta F(\zeta ) \right| ^2\). The joint vector \((F(z), \partial _z F(z))\) has mean \((F^1(z), \partial _z F^1(z))\) and covariance

Following a Gaussian regression approach, see, e.g., [3, Prop. 1.2], the conditional expectation of \(\left| \partial _\zeta F(\zeta ) \right| ^2\) given \(F(\zeta )=0\) is the same as the expectation of \(\left| W \right| ^2\), where \(W=\partial _\zeta F^1(\zeta )-\overline{\zeta }F^1(\zeta ) + W_0\) and \(W_0\) is a circularly symmetric complex Gaussian random variable with variance \(e^{\left| \zeta \right| ^2}\) (and zero mean). Thus,

Inserting (3.15) and (3.13) into (3.12) yields (3.11). \(\square \)

4 Proof of Theorem 2.2

We present the proof of Theorem 2.2 in several steps. The strategy is twofold: (i) to show that computed zeros are close to true ones, we relate the comparison test (2.7) to a similar property involving non-grid points and apply the minimum principle from Lemma 3.1; (ii) to show that true zeros do trigger a detection, we show that the test (2.7) is satisfied by linearly approximating the input function. The two objectives are in tension: while a large comparison margin \(\eta _\lambda \) would facilitate (i) by absorbing possible oscillations between a grid and a close-by non-grid point, a small margin makes the comparison test easier to satisfy and thus facilitates (ii). The core of the proof consists in showing that the adaptive margin (2.6) strikes the desired balance with high probability.

Initially, we bound the Hausdorff distance between the exact and computed zero sets (showing that each of the sets lies in a small neighborhood of the other). We then refine this conclusion to a bound on the Wasserstein distance by analyzing the sieving step.

4.1 Preparations

Let L, \(\sigma \), \(\delta \), and F satisfy the assumptions of the theorem, and denote by \(\mathrm {Z}_1\) the set produced by the AMN algorithm after the selection step. Recall that \(L \ge 1\). By choosing a sufficiently large constant in (2.14), we can assume that \(\delta \le 1/5\); otherwise, the success probability would be trivial. For the same reason, we can assume that

4.2 Excluding bad Events

We let \(\gamma = 8 \sigma \) and wish to apply Lemma 3.2 with \(t=\gamma \sqrt{\log (1/\delta )}\). By (4.1),

Hence, \(t \ge A\) and we can apply Lemma 3.2 to conclude that

for all \(w \in \Omega _{L+1}\) and \(|z| \le 10\), except for an event of probability at most \(C L^2 \exp \big [ -(t-{\mathrm {A}})^2/(8\sigma ^2)\big ]\), where C is an absolute constant. Since \((t-{\mathrm {A}})^2 \ge \frac{t^2}{2}-{\mathrm {A}}^2\), we further have

Second, we select a large absolute constant \(\kappa >1\) to be specified later, and use Lemma 3.3 with

to conclude that, for each grid point \(\lambda \in \Lambda _{L+2\delta }\),

except for an event with probability at most \(\lesssim L^2 \log ^2(1/\delta ) \delta ^4\).

Overall we have excluded events with total probability

In what follows, we show that under the complementary events the conclusions of Theorem 2.2 hold.

4.3 The True Zeros are Adequately Separated

We claim that, by taking \(\kappa \) sufficiently large, the set \(\{F=0\} \cap \Omega _{L+2\delta }\) satisfies:

Suppose that \(\zeta , \zeta ' \in \{F=0\} \cap \Omega _{L+2\delta }\) are such that \(0<|\zeta -\zeta '|_\infty \le 7 \delta \). Since \(L/\delta \in \mathbb {N}\), we can select a lattice point \(\lambda \in \Lambda _{L+2\delta }\) such that \(0 < |\lambda -\zeta | \le \delta \). We now use repeatedly (4.2) and (4.3).

First, we use (4.2) with \(w=\zeta \) and \(z=\zeta '-\zeta \), and note that \(F_\zeta (\zeta '-\zeta )=0\) and \(F_{\zeta }(0)=0\) while \(|\zeta -\zeta '| \le \sqrt{2} |\zeta -\zeta '|_{\infty } \le 7 \sqrt{2} \delta \le 10\) to obtain:

Since \(\zeta \not = \zeta '\), we conclude:

Second, we similarly apply (4.3) with \(w=\zeta \) and \(z=\lambda -\zeta \), to obtain

Combining the last equation with (4.6) yields

Third, we apply (4.2) with \(w=\lambda \) and \(z=\zeta -\lambda \) to obtain

Note that \(|F_\lambda (0)| = \left| F_\zeta (\lambda -\zeta ) \right| \cdot e^{-\frac{1}{2} |\lambda -\zeta |^2}\). Hence, combining (4.7) and (4.8) we obtain:

Since \(0<|\lambda -\zeta | \le \delta \) and \(|\zeta -\zeta '|_{\infty }\le 7\delta \), we conclude that

Assuming as we may that \(\kappa > 11\), this contradicts (4.4). Thus, (4.5) must indeed hold.

4.4 Linearization Holds with Estimated Slopes

For each \(\lambda \in \Lambda _L\), we use the notation

and observe that, by (4.2),

Combining this with (4.3), we conclude that for \(|z|_{\infty } \le 2\delta \) and \(\lambda \in \Lambda _L\),

4.5 After the Selection Step, Each True Zero is Close to a Computed Zero

We show that

(Recall that \(\mathrm {Z}_1\) is the set produced after the selection step, while the cube \(Q_\delta (0)\) is defined in Sect. 3.1.)

Let \(\zeta \in \Omega _L\) be a zero of F. Since \(L/\delta \in \mathbb {N}\), we can find \(\lambda \in \Lambda _L\) such that \(|\zeta -\lambda |_\infty \le \delta /2\). We show that \(\lambda \in \mathrm {Z}_1\).

Let us first prove that

Suppose to the contrary that \(|\tau _\lambda | < \kappa \gamma \sqrt{\log (1/\delta )} \cdot \delta \). We will show that this contradicts (4.4). Assuming as we may that \(\kappa \ge 1\), by (4.9),

while, by (4.2),

provided \(\kappa \ge \frac{1+\sqrt{2}}{2-\sqrt{2}}\). This indeed contradicts (4.4). We conclude that (4.13) holds.

Second, we show that \(\lambda \in \mathrm {Z}_1\) by showing that the test (2.7) is satisfied. By (4.10), and since \(|\zeta -\lambda |_\infty \le \delta /2\), we have

Choosing \(\kappa \ge \frac{8}{3-2\sqrt{2}}\), (4.14) and (4.13) further imply

Hence,

Let \(\mu \in \Lambda \) be an arbitrary lattice point with \(|\mu -\lambda |_{\infty }=2\delta \). By (4.11),

Together with (4.14), this implies

Finally, we use (4.13) to analyze the obtained comparison margin against (4.16):

where we fixed the value of \(\kappa \) so that \(\frac{3-2\sqrt{2}}{4} \kappa - 15 \ge 0\). Therefore, the point \(\lambda \) passes the selection test (2.7) (as formulated in (3.5)), i.e., \(\lambda \in \mathrm {Z}_1\), as claimed.

4.6 After the Selection Step, Each Computed Zero is Close to a True Zero

We show that

Let \(\lambda \in \mathrm {Z}_1\) be a computed zero, and let us find a zero z of F with \(|\lambda -z|_\infty \le 2 \delta \). In terms of the Fock shift \(F_\lambda \), the success of the test (2.7) reads,

see (3.5). For an arbitrary \(z \in \mathbb {C}\) with \(|z|_\infty = 2 \delta \), we can find a lattice point \(\mu \in \Lambda \) with \(|\mu |_\infty = 2\delta \) such that \(|z-\mu |=|z-\mu |_\infty \le \delta /2\). Hence, by (4.11),

By (4.4) and (4.9), either \(|\tau _\lambda |\delta \ge \kappa \gamma \sqrt{\log (1/\delta )} \cdot \delta ^2\) or \(|F_\lambda (0)| \ge \kappa \gamma \sqrt{\log (1/\delta )} \cdot \delta ^2 \ge |\tau _\lambda |\delta \). Choosing \(\kappa \ge 88\) ensures in the first case that

and in the second case

Combining this with (4.18), we conclude that

By Lemma 3.1, there exists \(w_\lambda \in \mathbb {C}\) with \(|w_\lambda | \le 2 \delta \) such that \(F_\lambda (w_\lambda )=0\). This means that \(z_\lambda :=w_\lambda +\lambda \) is a zero of F that satisfies \(|z_\lambda -\lambda |_\infty \le 2 \delta \), as desired.

4.7 Definition of the Map \(\Phi \)

We now look into the sieving step of the AMN algorithm and analyze the final output set \(\mathrm {Z}\).

Given \(\zeta \in \{F=0\} \cap \Omega _L\), we claim that there exists \(\lambda \in \mathrm {Z}\) such that \(|\zeta - \lambda |_\infty \le 2 \delta \). Suppose to the contrary that

By (4.12), there exists \(\mu \in \mathrm {Z}_1\) such that \(|\zeta -\mu |_\infty \le \delta /2\). By (4.20), \(\mathrm {Z}\subsetneq \mathrm {Z}\cup \{\mu \}\). We claim that \(\mathrm {Z}\cup \{\mu \}\) is \(5\delta \)-separated. For this, it suffices to check that

If \(\lambda \in \mathrm {Z}\), by (4.17), there exist \(\zeta ' \in \{F=0\}\) such that \(|\zeta '-\lambda |_\infty \le 2 \delta \). If \(\zeta '=\zeta \), then \(|\zeta -\lambda |_\infty \le 2 \delta \), contradicting (4.20). Thus, \(\zeta \not = \zeta '\), while, \(\zeta ' \in \mathrm {Z}+Q_{2\delta } \subset \Omega _{L+2\delta }\). Hence, we use (4.5) to conclude that

Thus, the set is \(5\delta \)-separated:

contradicting the maximality of \(\mathrm {Z}\). It follows that a point \(\lambda \in \mathrm {Z}\) such that \(|\zeta - \lambda |_\infty \le 2 \delta \) must exist. We choose any such point, and define \(\Phi (\zeta ) = \lambda \).

4.8 Verification of the Properties of \(\Phi \)

By construction, the map \(\Phi \) satisfies (2.15). We now show the remaining properties. To show that \(\Phi \) is injective, assume that \(\Phi (\zeta )=\Phi (\zeta ')\). Then, by (2.15),

Hence, by (4.5), we must have \(\zeta =\zeta '\).

Finally, assume that \(\lambda \in \mathrm {Z}\cap \Omega _{L-2\delta }\) and use (4.17) to select a zero \(\zeta \in \{F=0\}\) such that \(|\zeta -\lambda |_\infty \le 2 \delta \). Then \(\zeta \in \Omega _L\), and, by (2.15),

As \(\lambda , \Phi (\zeta ) \in \mathrm {Z}\) and \(\mathrm {Z}\) is \(5\delta \)-separated (see (2.8)), we conclude that \(\lambda =\Phi (\zeta )\), as claimed.

This concludes the proof of Theorem 2.2. \(\square \)

5 Numerical Experiments

In this section, we perform a series of tests of the AMN algorithm and compare its performance with MGN and thresholding supplemented with a sieving step (ST).

5.1 Simulation

We first discuss how to simulate samples from the input model (2.9). To make simulations tractable, we introduce a fast method to draw samples of the Gaussian entire function \(F^0\) given by (2.10) on the finite grid (1.6). The method is based on the relation between the Bargmann transform and the short-time Fourier transform (1.2) and amounts to discretizing the underlying signal f.

We fix \(L>0\), \(T>0\), and \(\delta > 0\). For convenience, we further let \(\sigma = 1\) and assume that \(T\delta ^{-1}\) is an integer. Recall that we also assumed that \(L\delta ^{-1}\) is an integer.

To model a discretization of \(\mathcal {N}\), we take i.i.d. noise samples in the interval \([-T-L, T+L] \subseteq \mathbb {R}\) spaced by a distance \(\delta \). More specifically, we consider a random vector \(w=(w_{-(T+L)\delta ^{-1}}, \ldots , w_{(T+L)\delta ^{-1}})\), where the elements \(w_{s} \sim \mathcal {N}_\mathbb {C}(0, \delta )\) are independent, i.e., \(\mathbb {E}[w_s \overline{w_{s}}]=\delta \), and \(\mathbb {E}[w_s \overline{w_{s'}}]=0\) for \(s \not = s'\). Here, \(w_{s}\) can be interpreted as an integration of \(\mathcal {N}\) over the interval \([\delta s, \delta (s+1)]\).

Let \(f^1:\mathbb {R} \rightarrow \mathbb {C}\), \(\varphi = g|_{[-T,T]}\) the restriction of \(g(t) = (\tfrac{2}{\pi })^{\frac{1}{4}} \,e^{-t^2}\) to the compact support \([-T, T]\) and define

for \(k,j \in \{-L\delta ^{-1}, \dots , L\delta ^{-1}\}\). The mean of \(\widehat{H}\) is given by

and approximates the integral

with \(x= \delta k\) and \(y= \delta j\). Furthermore, the covariance of \(\widehat{H}\) is

For small \(\delta \) and sufficiently large T, this is an approximation of the integral

with \(x= \delta k\), \(y= \delta j\), \(u= \delta k'\), and \(v= \delta j'\). Therefore, if we take T large enough so that we can ignore the numerical error introduced by the truncation of the normalized Gaussian window g, we obtain in (5.1) a random Gaussian vector whose covariance structure approximates the right-hand side of (5.2) on the grid \(\Lambda _L\), provided that \(\delta \) is small.

To obtain a vector whose covariance structure approximates (2.10), we proceed as follows. By conjugating z in (5.1) and multiplying by the deterministic factor \(e^{-i x y}\), we obtain an approximate sampling of (2.9) with weight \(e^{-\frac{1}{2} |z|^{2}}\):

for \(z=\delta k + i \delta j\). We carry out all computations with the weighted function (5.3), as the unweighted version can lead to floating point arithmetic problems. Note that, for a grid point \(\lambda \), the comparison margin (2.6) can be expressed in terms of \(e^{-\frac{1}{2}|\, \cdot \,|^{2}} F(\,\cdot \,)\) as

5.2 Specifications for the Experiments

5.2.1 Implementation of the Sieving Step in AMN

In order to fully specify the AMN algorithm, we need to fix an implementation of the sieving step, which provides a subset \(Z \subseteq Z_1\) satisfying (2.8), and such that no proper superset \(Z_1 \supseteq \tilde{Z} \supsetneq Z\) satisfies (2.8). We choose an implementation that uses knowledge of the input F to decide which points are to be discarded. We assume that \(Z_1\) is non-empty, otherwise Z is trivial.

The resulting set \(Z \subseteq Z_1\) always satisfies (2.8). Moreover, any superset \(\tilde{Z} \supsetneq Z\) included in \(Z_1\) must contain some of the discarded points \(\mu \in Z_1\), which by construction satisfy (5.5) for some \(\lambda \in Z\), and therefore \(\tilde{Z}\) is not \(5\delta \) separated. Thus, Z is indeed maximal with respect to (2.8).

The choice of \(\lambda \in Z_1^\text {aux}\) in Step 3 of S1 is not essential. Our particular choice is motivated by finding the zeros of F; however, we did not observe any significant performance difference when using other algorithms than S1 as the sieving step of AMN.

5.2.2 Specification of the Compared Algorithms

Given the values of a function \(F:\mathbb {C} \rightarrow \mathbb {C}\) on the grid \(\Lambda _L\), we consider the following three algorithms to compute an approximation of \(\{F=0\} \cap \Lambda _{L-1}\).

-

AMN: the AMN algorithm run with domain length \(L-1\) and with sieving step S1 implemented as described in Sect. 5.2.1,

-

MGN: outputs the set of all grid points \(\lambda \in \Lambda _{L-1}\) such that

$$\begin{aligned} e^{-\frac{1}{2}|\lambda |^{2}}|F(\lambda )| \le e^{-\frac{1}{2}|\mu |^{2}}|F(\mu )|, \quad |\lambda -\mu |_{\infty }=\delta . \end{aligned}$$(5.6) -

ST: outputs the set of grid points \(\lambda \in \Lambda _{L-1}\) obtained as the result of applying the sieving algorithm S1 to

$$\begin{aligned} \left\{ \lambda \in \Lambda _{L-1}: e^{-\frac{1}{2}|\lambda |^2} |F(\lambda )| \le 2 \delta \right\} . \end{aligned}$$

Note that each of the algorithms relies only on the samples of F on \(\Lambda _{L+2\delta -1}\). The use of a common input grid \(\Lambda _L\) simplifies the notation when considering various grid spacing parameters \(\delta \).

5.2.3 Varying the Grid Resolution

In the numerical experiments, we start with a small minimal spacing value \(\delta =\delta _{\text {Hi}}\), that provides a high-resolution approximation in (5.1), and simulate F as in Sect. 5.1. We then incrementally double \(\delta \) to produce coarser grid resolutions and subsample F accordingly. More precisely, each element of the grid \(\Lambda _L\) can be written as

for adequate M, \(N >0\). If F is given on \(\Lambda _L\), we subsample it by setting

for values (k, l) such that the indices \(2k +i 2l\) are valid.

5.3 Faithfulness of Simulation of Zero Sets

As a first test, we simulate random inputs from the model (2.9), as specified in Sect. 5.1, apply the above-described three different algorithms, and test whether this process faithfully simulates the zero sets of the random function (2.9). To this end, we estimate first or second order statistics on the computed zero sets by averaging over several realizations of (2.9), and compare them to the corresponding expected values concerning the zero sets of (2.9).

5.3.1 No Deterministic Signal

We first consider the case \(F^1\equiv 0\) and \(\sigma =1\) in (2.9). Let \(\widehat{F}_{1}^{\delta _{\text {Hi}}}, \ldots , \widehat{F}_{R}^{\delta _{\text {Hi}}}\) be R independent realizations of samples of (2.9) on a grid \(\Lambda _L\) with resolution \(\delta =\delta _{\text {Hi}}\), simulated as in Sect. 5.1. These are then subsampled with (5.8) yielding \(F_{r}^{\delta _{k}} = \mathcal {S}^{(k)}(F_{r}^{\delta _{\text {Hi}}})\) and used as input for AMN, MGN, and ST, as specified in Sect. 5.2.2. The corresponding output sets are denoted \(\widehat{Z}_{r}^{\delta }\) where we omit the dependence on the method to simplify the notation. These sets should approximately correspond to \(\{F_r=0\} \cap \Lambda _{L-1}\), for R independent realizations of (2.9). We now put that statement to test.

The expected number of zeros of the random function F on a Borel set \(\Theta \subseteq \mathbb {C}\) is

see, e.g., [19, Sect. 2.4]. We define the following empirical estimator for the first intensity \(\rho _1=1/\pi \):

If the computed set \(\widehat{Z}_{r}^{\delta }\) were replaced by \(\{F=0\}\) in (5.10), the estimator would be unbiased. The mean of the estimation error \(\widehat{\rho }(\Theta , r, \delta ) - 1/\pi \) thus measures the quality of the algorithm used to compute \(\widehat{Z}_{r}^{\delta }\), as it should be close to zero when the algorithm is faithful. In Table 1, we present the empirical means and the empirical standard deviations of the estimation error over \(R=1000\) independent realizations \(F_{r}^{\delta }\) for \(L=7\), \(\Theta = \Omega _{L-1}\), \(T=6\), and various grid sizes \(\delta \).

To derive a benchmark for the empirical standard deviation of \(\widehat{\rho }(\Theta , r, \delta ) - 1/\pi \), we express the variance of \(|\{F=0\} \cap \Theta |/ |\Theta |\) in terms of the second intensity function \(\rho _2(\zeta , \zeta ')\) of \(\{F=0\}\) as follows:

A formula for \(\rho _2(\zeta , \zeta ')\) is provided in [18] and numerical integration over \(\Theta = \Omega _{L-1}\) results in \(\sqrt{{\text {Var}}[|\{F=0\} \cap \Theta |/ |\Theta |]} \approx 0.01165\). We see in Table 1 that the methods AMN and MGN almost perfectly match the expected mean and standard deviation while ST does not.

5.3.2 Deterministic Signal Plus Noise

We now consider the input model (2.9) with \(F^1 \not =0\) and \(\sigma =1\). We choose \(F^1\) from Table 2 and rescale it so that \({\mathrm {A}}= \sup _{\zeta \in \mathbb {C}} e^{-{\frac{1}{2} |\zeta |^2}}|F^1(\zeta )| \) holds for the signal intensities \({\mathrm {A}}=1\) and 100.

We only test first-order statistics of the computed zero sets. The benchmark is provided by Proposition 3.4: the expected number of zeros of F in \(\Theta \) is

where \(\rho _1\) is given by (3.11) (with \(\sigma =1\)). For each of the tested algorithms, we define an estimator for the error resulting from replacing \(\{F=0\}\) in (5.12) by the computed set \(\widehat{Z}_{r}^{\delta }\) (for \(1\le r\le R\)):

As before, we simulate \(R=100\) realizations of \(F=F^0+F^1\) on a grid with a certain spacing \(\delta \). The empirical average of \(\widehat{\beta }(\Theta , r, \delta )\) over all realizations is denoted \(\widehat{\beta }_{R}(\Theta ,\delta )\). As \(\rho _1\) is not constant when \(F^1 \not = 0\), this time we calculate \(\widehat{\beta }_{R}(\Theta ,\delta )\) on \(\Theta =\Omega _{L_1}\) for several values of \(L_1\).

The results for \(\delta =2^{-9}\) are depicted in Fig. 5. We see that the performance of AMN and MGN is indistinguishable, while ST may perform poorly even at such high resolution. Lower grid resolutions yield similar results.

5.4 Failure Probabilities and Consistency as Resolution Decreases

Having tested the statistical properties of the computed zero sets under the input model (2.9), we now look into the accuracy of the computation for an individual realization F. We aim to test the existence of a map as in Theorem 2.2, that assigns true zeros to computed ones with small distortion and almost bijectively. As a proxy for the (unavailable) ground truth \(\{F=0\}\), we will use the output of AMN from data at very high resolution (computations with MGN yield indistinguishable results). We thus conduct a consistency experiment, where the zero set of the same realization of F is computed from samples on grids of different resolution, and the existence of a map as in Theorem 2.2 between both outputs is put to test.

Suppose that samples of a function F are simulated on a high-resolution grid \(\Lambda _L\) with spacing \(\delta =\delta _{\text {Hi}}\) and restricted to the low-resolution grid \(\Lambda _L\) with spacing \(\delta =\delta _{\text {Lo}}\) by subsampling. We compute \(\widetilde{Z}_{}^{\delta _{\text {Hi}}}\subseteq \Omega _{L-1}\) from the high-resolution data using AMN, and \(\widehat{Z}_{}^{\delta _{\text {Lo}}}\subseteq \Omega _{L-1}\) from the low-resolution data, using one of the algorithms described in Sect. 5.2.2.

Second we construct a set \(U \subseteq \widetilde{Z}_{}^{\delta _{\text {Hi}}}\) and a map \(\phi :U \rightarrow \widehat{Z}_{r}^{\delta _{\text {Lo}}}\) with the following greedy procedure:

The resulting function \(\phi \) is injective and satisfies

We say that the computation of \(\widehat{Z}_{r}^{\delta _{\text {Lo}}}\) was certified to be accurate if

In this case, the map \(\phi \) satisfies properties analogous to the ones in Theorem 2.2. Conceivably, other such maps may exist even if the one constructed in the greedy fashion fails to satisfy (5.14). We define the following computation certificate:

The experiment to estimate failure probabilities as a function of the grid resolutions is fully specified as follows. We consider the input model (2.9) with \(\sigma =1\). We choose \(F^1\) from Table 2 and rescale it so that \({\mathrm {A}}= \sup _{\zeta \in \mathbb {C}} e^{-\frac{1}{2} |\zeta |^2}|F^1(\zeta )| \) holds for the signal intensities \({\mathrm {A}}=1\) and 100. We fix \(L>0\) and \(\delta _{\text {Hi}}>0\) and let \(\widehat{F}_{1}^{\delta _{\text {Hi}}}, \ldots , \widehat{F}_{R}^{\delta _{\text {Hi}}}\) be R independent realizations of samples of (2.9) on a grid \(\Lambda _L\) with resolution \(\delta =\delta _{\text {Hi}}\), simulated as in Sect. 5.1. These are then subsampled j times with (5.8) yielding \(F_{r}^{\delta _{k}} = \mathcal {S}^{(k)}(F_{r}^{\delta _{\text {Hi}}})\), \(1 \le k \le j\).

We use AMN with input \(F_{r}^{\delta _{\text {Hi}}}\) to obtain a set \(\widetilde{Z}_{r}^{\delta _{\text {Hi}}}\). Further, for each \(1 \le k \le j\), we use each of the algorithms \(M = \text {AMN, MGN, or ST}\) with input \(F_{r}^{\delta _k}\) to obtain sets \(\widehat{Z}_{r,M}^{\delta ^k}\). Finally, we compute all the certificates \(\mathcal {M}(\widetilde{Z}_{r}^{\delta _{\text {Hi}}}, \widehat{Z}_{r,M}^{\delta _k})\) and average them over all realizations to obtain the following estimated upper bound for the failure probability of the method M with grid spacing \(\delta =\delta _k\):

We present in Table 3 values obtained for \(p(\delta _k,M)\) for a resolution starting as high as \(\delta _{\text {Hi}}=2^{-9}\), with a truncation of the window g at \(T=6\), in the target domain \(\Omega _{L-1}\) for \(L=7\), and \(R=1000\) realizations of a zero-mean \(F\). We also present the results for \(F^1\) as in Table 2, rescaled to achieve a signal intensity \({\mathrm {A}}=1\) or \({\mathrm {A}}=100\). We see that both AMN and MGN deliver very low failure probabilities (with MGN slightly outperforming AMN at lower resolutions). In contrast, ST delivers large failure probabilities even at high resolution.

6 Conclusions and Outlook

We analyzed the AMN algorithm under a stochastic input model aimed to describe the performance of the method in practice [25]. One limitation of our analysis is the assumption that grid samples of the Bargmann transform are exactly given, while, more realistically, acquired data corresponds to averages of the signal values resulting from analog to digital conversion and numerical integration. Second, we considered complex-valued white noise, while in practice noise may also be colored or real-valued. We understand that the techniques used to prove Theorem 2.2 are general enough to allow for a refinement of the result in these directions. Similarly, we expect to be able to adapt our analysis of AMN to other ensembles of analytic functions, which are relevant in connection to other signal transforms. A more challenging open direction is the investigation of rigorous performance guarantees for MGN, which remains the algorithm of choice in practice.

References

L. D. Abreu, A. Haimi, G. Koliander, and J. L. Romero. Filtering with wavelet zeros and gaussian analytic functions. Technical report, arXiv:1807.03183v3.

T. W. Anderson. The integral of a symmetric unimodal function over a symmetric convex set and some probability inequalities. Proc. Amer. Math. Soc., 6:170–176, 1955.

J.-M. Azaïs and M. Wschebor. Level sets and extrema of random processes and fields. John Wiley & Sons, Inc., Hoboken, NJ, 2009.

D. H. Bailey and P. N. Swarztrauber. The fractional Fourier transform and applications. SIAM Rev., 33(3):389–404, 1991.

R. Bardenet, J. Flamant, and P. Chainais. On the zeros of the spectrogram of white noise. Appl. Comput. Harmon. Anal., 48(2):682–705, 2020.

R. Bardenet and A. Hardy. Time-frequency transforms of white noises and Gaussian analytic functions. Appl. Comput. Harmon. Anal., 50:73–104, 2021.

V. Bargmann. On a Hilbert space of analytic functions and an associated integral transform. Comm. Pure Appl. Math., 14:187–214, 1961.

V. Bargmann. On a Hilbert space of analytic functions and an associated integral transform. Part II. A family of related function spaces. Application to distribution theory. Comm. Pure Appl. Math., 20:1–101, 1967.

Á. Bényi and K. A. Okoudjou. Modulation Spaces: With Applications to Pseudodifferential Operators and Nonlinear Schrödinger Equations. Applied and Numerical Harmonic Analysis. Birkhäuser Basel, 2020.

S. Brekke and K. Seip. Density theorems for sampling and interpolation in the Bargmann-Fock space. III. Math. Scand., 73(1):112–126, 1993.

I. Daubechies and A. Grossmann. Frames in the Bargmann space of entire functions. Comm. Pure Appl. Math., 41(2):151–164, 1988.

L. A. Escudero, A. Haimi, and J. L. Romero. Multiple sampling and interpolation in weighted Fock spaces of entire functions. Complex Anal. Oper. Theory, 15(2):Paper No. 35, 32, 2021.

P. Flandrin. Time–frequency filtering based on spectrogram zeros. IEEE Signal Processing Letters, 22(11):2137–2141, 2015.

P. Flandrin. The sound of silence: Recovering signals from time-frequency zeros. In 2016 50th Asilomar Conference on Signals, Systems and Computers, pages 544–548, 2016.

P. Flandrin. Explorations in time-frequency analysis. Cambridge University Press, 2018.

T. J. Gardner and M. O. Magnasco. Sparse time-frequency representations. Proc. Nat. Acad. Sc., 103(16):6094–6099, 2006.

A. Haimi, G. Koliander, and J. L. Romero. Zeros of Gaussian Weyl-Heisenberg functions and hyperuniformity of charge. J. Stat. Phys., 187(3):Paper No. 22, 41, 2022.

J. H. Hannay. Chaotic analytic zero points: exact statistics for those of a random spin state. J. Phys. A, 29(5):L101–L105, 1996.

J. B. Hough, M. Krishnapur, Y. Peres, and B. Virág. Zeros of Gaussian analytic functions and determinantal point processes, volume 51 of University Lecture Series. American Mathematical Society, Providence, RI, 2009.

G. Koliander, L. D. Abreu, A. Haimi, and J. L. Romero. Filtering the continuous wavelet transform using hyperbolic triangulations. In 2019 13th International conference on Sampling Theory and Applications (SampTA), pages 1–4. IEEE, 2019.

Y. I. Lyubarskiĭ. Frames in the Bargmann space of entire functions. In Entire and subharmonic functions, volume 11 of Adv. Soviet Math., pages 167–180. Amer. Math. Soc., Providence, RI, 1992.

F. Nazarov and M. Sodin. What is\(\ldots \)a Gaussian entire function? Notices Amer. Math. Soc., 57(3):375–377, 2010.

K. Seip. Density theorems for sampling and interpolation in the Bargmann-Fock space. I. J. Reine Angew. Math., 429:91–106, 1992.

K. Seip and R. Wallstén. Density theorems for sampling and interpolation in the Bargmann-Fock space. II. J. Reine Angew. Math., 429:107–113, 1992.

D. A. Spielman and S.-H. Teng. Smoothed analysis: an attempt to explain the behavior of algorithms in practice. Communications of the ACM, 52(10):76–84, 2009.

Funding

Open access funding provided by Austrian Science Fund (FWF).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Thomas Strohmer.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

L. A. E., G. K., and J. L. R. gratefully acknowledge support from the Austrian Science Fund (FWF): Y 1199 and P 29462. N. F. gratefully acknowledges support from the Israel Science Foundation (ISF) grant no. 1327/19.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Escudero, L.A., Feldheim, N., Koliander, G. et al. Efficient Computation of the Zeros of the Bargmann Transform Under Additive White Noise. Found Comput Math 24, 279–312 (2024). https://doi.org/10.1007/s10208-022-09593-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10208-022-09593-5

Keywords

- Bargmann transform

- Random analytic function

- Short-time Fourier transform

- Zero set

- Computation

- Wasserstein metric